Microsoft advances natural UI with Kinect SDK — from cnet.com by Jay Greene

Oregon State University student Alex Wiggins gestures to Kinect, which in turn makes a remote-control

toy helicopter take off while teammates Ruma Paul (left) and Fabio Matsui (right) look on. (Credit: Microsoft)

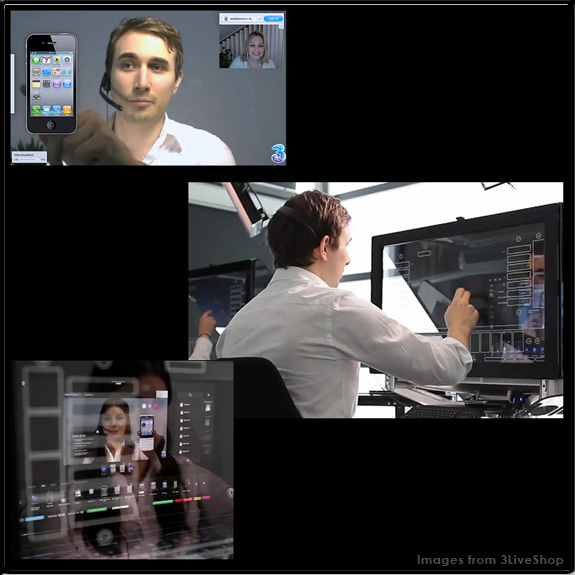

Multiscreen Patterns — from Precious-Forever.com by Christophe Stolle

Patterns to help understand and define strategies for the multiscreen world.

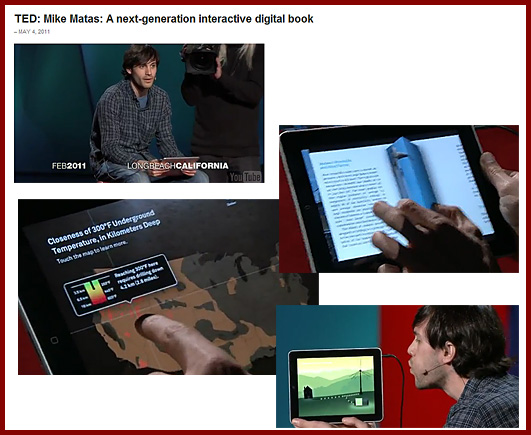

Mike Matas: A next-generation digital book (filmed March 2011)

About this talk

Software developer Mike Matas demos the first full-length interactive book for the iPad — with clever, swipeable video and graphics and some very cool data visualizations to play with. The book is “Our Choice,” Al Gore’s sequel to “An Inconvenient Truth.”

About Mike Matas

While at Apple, Mike Matas helped write the user interface for the iPhone and iPad. Now with Push Pop Press, he’s helping to rewrite the electronic book.

Why Angry Birds is so successful and popular: A cognitive teardown of the user experience — from Pulse > UX by Charles L. Mauro

Excerpt:

Simple yet engaging interaction concept: This seems an obvious point, but few realize that a simple interaction model need not be, and rarely is, procedurally simple. Simplification means once users have a relatively brief period of experience with the software, their mental model of how the interface behaves is well formed and fully embedded. This is known technically as schema formation. In truly great user interfaces, this critical bit of skill acquisition takes place during a specific use cycle known as the First User Experience or FUE. When users are able to construct a robust schema quickly, they routinely rate the user interface as “simple”. However, simple does not equal engaging. It is possible to create a user interface solution that is initially perceived by users as simple. However, the challenge is to create a desire by users to continue interaction with a system over time, what we call user “engagement”.

What makes a user interface engaging is adding more detail to the user’s mental model at just the right time. Angry Birds’ simple interaction model is easy to learn because it allows the user to quickly develop a mental model of the game’s interaction methodology, core strategy and scoring processes. It is engaging, in fact addictive, due to the carefully scripted expansion of the user’s mental model of the strategy component and incremental increases in problem/solution methodology. These little birds are packed with clever behaviors that expand the user’s mental model at just the point when game-level complexity is increased. The process of creating simple, engaging interaction models turns out to be exceedingly complex. Most groups developing software today think expansion of the user’s mental model is for the birds. Not necessarily so.

Other key items discussed:

- Simple yet engaging interaction concept

- Cleverly managed response time

- Short-term memory management

- Mystery

- How things sound

- How things look

- Measuring that which some say cannot be measured

From DSC:

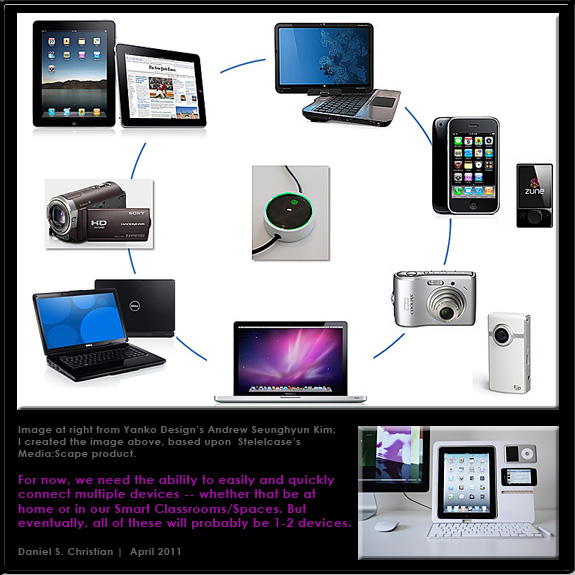

What Apple is able to do with many of their hardware and software products, what Charles describes here with Angry Birds, what Steelcase did with their Media:Scape product’s puck — and other examples — point out that creating something that is “easy” is actually quite hard.

Mobile content is twice as difficult [usability] — from Jakob Nielsen

Summary:

When reading from an iPhone-sized screen, comprehension scores for complex Web content were 48% of desktop monitor scores.

It’s more painful to use the Web on mobile phones than on desktop computers for many reasons:

- Slower downloads

- No physical keyboard for data entry

- No mouse for selection; no mouse buttons to issue commands and access contextual menus (indeed fewer signaling states, as discussed further in our seminar on Applying HCI Principles to Real World Problems: a touchscreen only signals “finger-down/up,” whereas a mouse has hover state in addition to button press/release)

- Small screen (often with tiny text)

- Websites designed for desktop access instead of following the usability guidelines for mobile

- App UIs that lack consistency

New research by R.I. Singh and colleagues from the University of Alberta provides one more reason: it’s much harder to understand complicated information when you’re reading through a peephole.

From DSC:

With the above said, the mobile learning wave cannot — and most likely should not — be stopped. The types of devices we end up using may change, but mobile learning will move forward.

For one example of this, see: