From DSC:

Given:

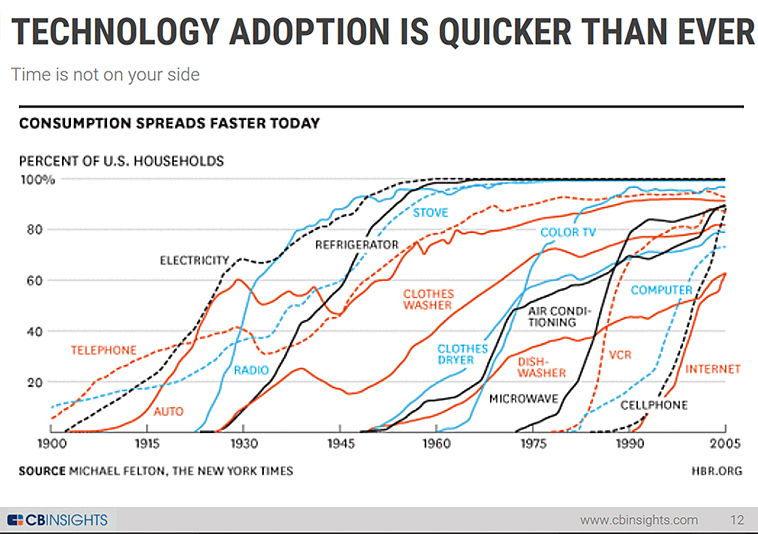

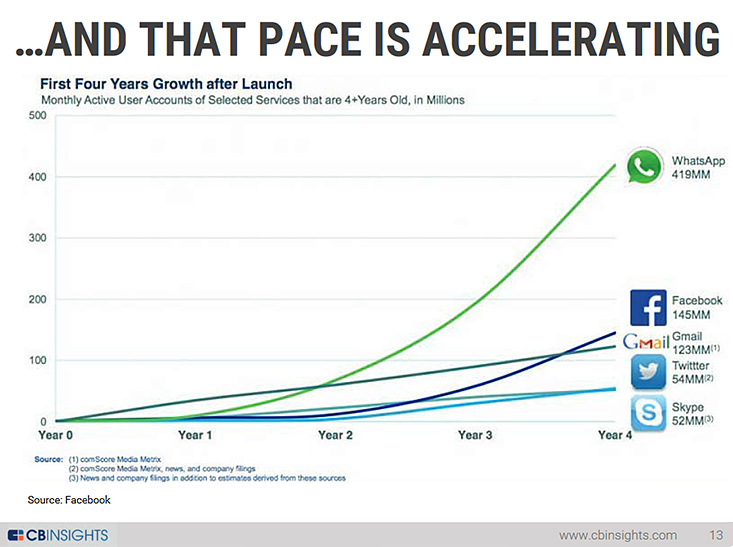

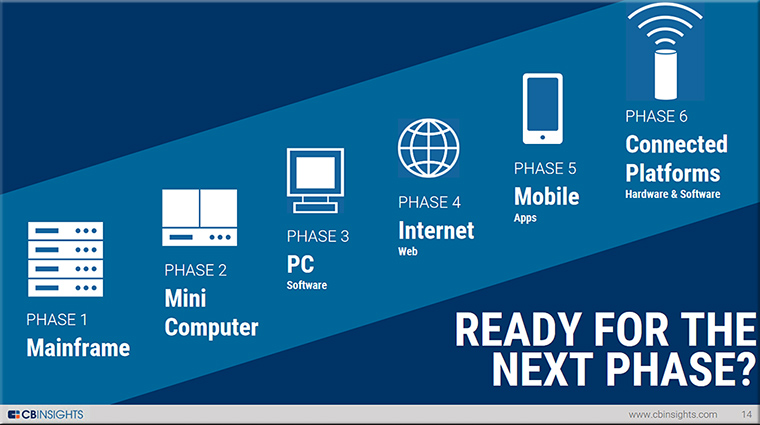

- the accelerating pace of change that’s been occurring over the last decade or more

- the current setup of the legal field within the U.S. — and who can practice law

- the number of emerging technologies now on the landscapes out there

…I think we’ve run out of time to effectively practice law in the U.S. — at least in terms of dealing with emerging technologies. Consider the following items/reflections.

Inside one of the nation’s few hybrid J.D. programs — from highereddive.com by Natalie Schwartz

Shannon Gardner, Syracuse law school’s associate dean for online education, talks about the program’s inaugural graduates and how it has evolved.

Excerpt (emphasis DSC):

In May, Syracuse University’s law school graduated its first class of students earning a Juris Doctor degree through a hybrid program, called JDinteractive, or JDi. The 45 class members were part of almost 200 Syracuse students who received a J.D. this year, according to a university announcement.

The private nonprofit, located in upstate New York, won approval from the American Bar Association in 2018 to offer the three-year hybrid program.

The ABA strictly limits distance education, requiring a waiver for colleges that wish to offer more than one-third of their credits online. To date, the ABA has only approved distance education J.D. programs at about a dozen schools, including Syracuse.

Many folks realize this is the future of legal education — not that it will replace traditional programs. It is one route to pursue a legal education that is here to stay. I did not see it as pressure, and I think, by all accounts, we have definitely proven that it is and can be a success.

Shannon Gardner, associate dean for online education

From DSC:

It was March 2018. I just started working as a Director of Instructional Services at a law school. I had been involved with online-based learning since 2001.

I was absolutely shocked at how far behind law schools were in terms of offering 100% online-based programs. I was dismayed to find out that 20+ years after such undergraduate programs were made available — and whose effectiveness had been proven time and again — that there were no 100%-online based Juris Doctor (JD) programs in the U.S. (The JD degree is what you have to have to practice law in the U.S. Some folks go on to take further courses after obtaining that degree — that’s when Masters of Law programs like LLM programs kick in.)

Why was this I asked? Much of the answer lies with the extremely tight control that is exercised by the American Bar Association (ABA). They essentially lay down the rules for how much of a law student’s training can be online (normally not more than a third of one’s credit hours, by the way).

Did I say it’s 2022? And let me say the name of that organization again — the American Bar Association (ABA).

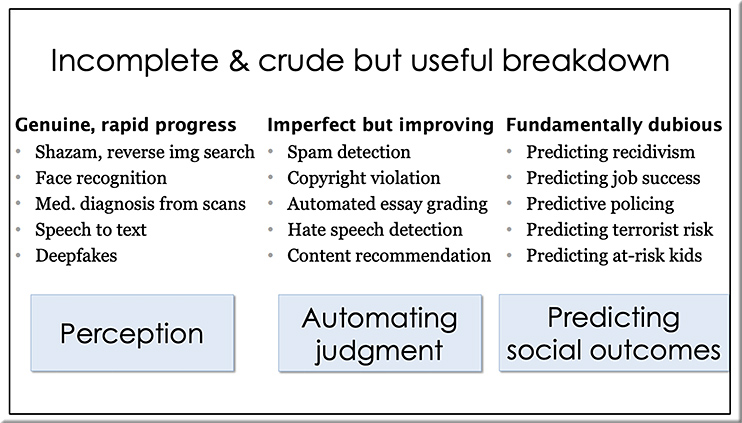

Graphic by Daniel S. Christian

Not to scare you (too much), but this is the organization that is supposed to be in charge of developing lawyers who are already having to deal with issues and legal concerns arising from the following technologies:

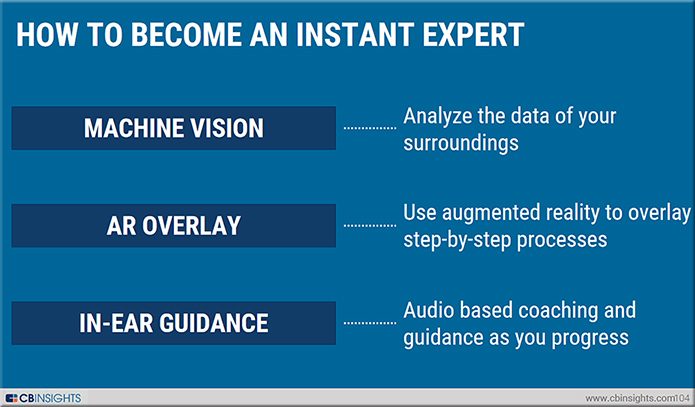

- Artificial Intelligence (AI) — Machine Learning (ML), Natural Language Processing (NLP), algorithms, bots, and the like

- The Internet of Things (IoT) and/or the Internet of Everything (IoE)

- Extended Reality (XR) — Augmented Reality (AR), Mixed Reality (MR), Virtual Reality (VR)

- Holographic communications

- Big data

- High-end robotics

- The Metaverse

- Cryptocurrencies

- NFTs

- Web3

- Blockchain

- …and the like

I don’t think there’s enough time for the ABA — and then law schools — to reinvent themselves. We no longer have that luxury. (And most existing/practicing lawyers don’t have the time to get up the steep learning curves involved here — in addition to their current responsibilities.)

The other option is to use teams of specialists, That’s our best hope. If the use of what’s called nonlawyers* doesn’t increase greatly, the U.S. has little hope of dealing with legal matters that are already arising from such emerging technologies.

So let’s hope the legal field catches up with the pace of change that’s been accelerating for years now. If not, we’re in trouble.

* Nonlawyers — not a very complimentary term…

I hope they come up with something else.

Some use the term Paralegals.

I’m sure there are other terms as well.

From DSC:

There is hope though. As Gabe Teninbaum just posted the resource below (out on Twitter). I just think the lack of responsiveness from the ABA has caught up with us. We’ve run out of time for doing “business as usual.”

Law students want more distance education classes, according to ABA findings — from abajournal.com by Stephanie Francis Ward

Excerpt:

A recent survey of 1,394 students in their third year of law school found that 68.65% wanted the ability to earn more distance education credits than what their schools offered.

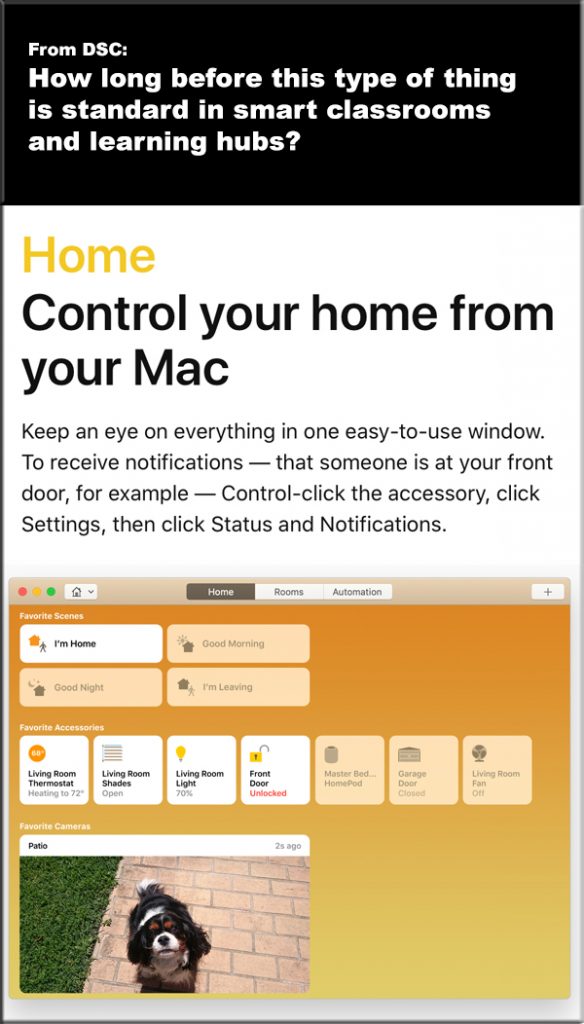

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)