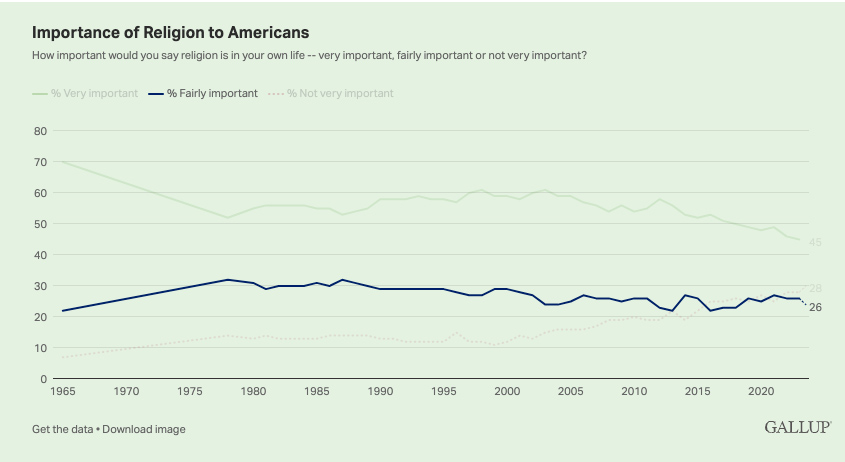

DC: I’m really hoping that a variety of AI-based tools, technologies, and services will significantly help with our Access to Justice (#A2J) issues here in America. So this article, per Kristen Sonday at Thomson Reuters — caught my eye.

***

AI for Legal Aid: How to empower clients in need — from thomsonreuters.com by Kristen Sonday

In this second part of this series, we look at how AI-driven technologies can empower those legal aid clients who may be most in need

It’s hard to overstate the impact that artificial intelligence (AI) is expected to have on helping low-income individuals achieve better access to justice. And for those legal services organizations (LSOs) that serve on the front lines, too often without sufficient funding, staff, or technology, AI presents perhaps their best opportunity to close the justice gap. With the ability of AI-driven tools to streamline agency operations, minimize administrative work, more effectively reallocate talent, and allow LSOs to more effectively service clients, the implementation of these tools is essential.

Innovative LSOs leading the way

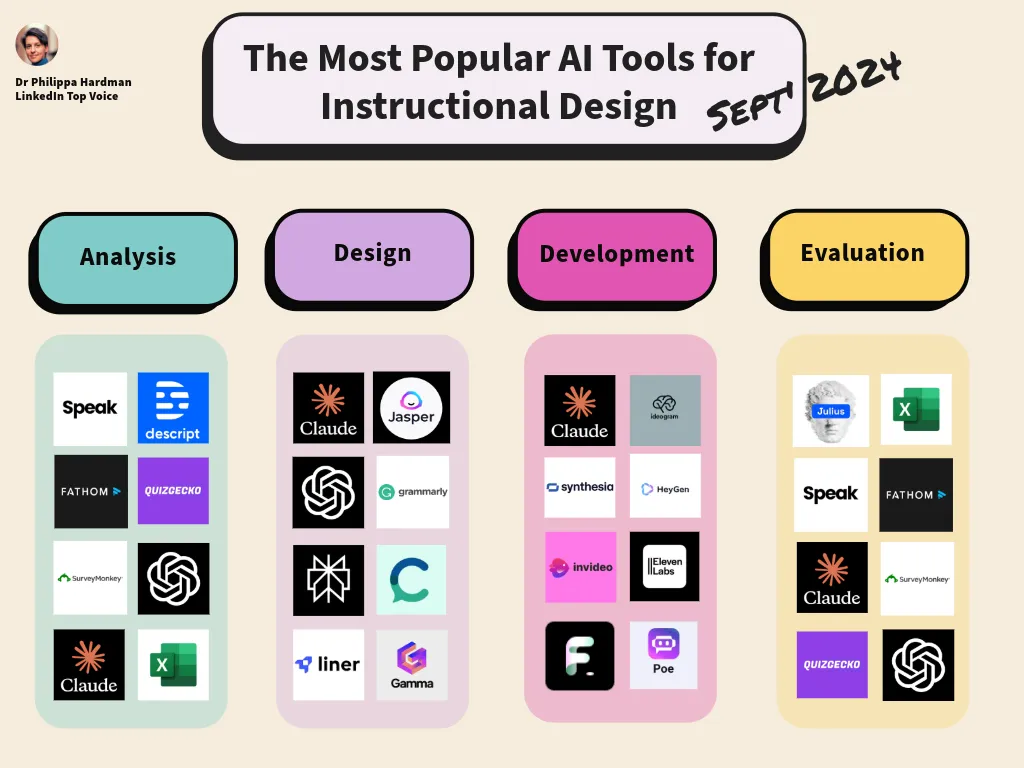

Already many innovative LSOs are taking the lead, utilizing new technology to complete tasks from complex analysis to AI-driven legal research. Here are two compelling examples of how AI is already helping LSOs empower low-income clients in need.

#A2J #justice #tools #vendors #society #legal #lawfirms #AI #legaltech #legalresearch

Criminal charges, even those that are eligible for simple, free expungement, can prevent someone from obtaining housing or employment. This is a simple barrier to overcome if only help is available.

…

AI offers the capacity to provide quick, accurate information to a vast audience, particularly to those in urgent need. AI can also help reduce the burden on our legal staff…

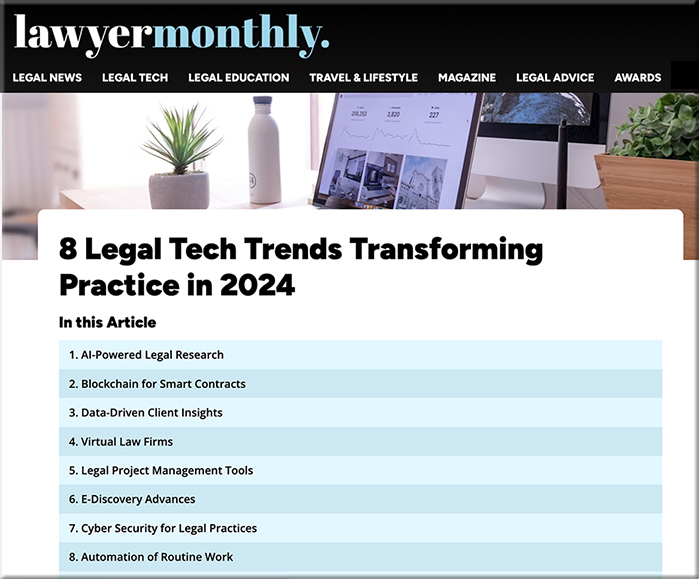

A legal tech executive explains how AI will fully change the way lawyers work — from legaldive.com by Justin Bachman

A senior executive with ContractPodAi discusses how legal AI poses economic benefits for in-house departments and disruption risks for law firm billing models.

Everything you thought you knew about being a lawyer is about to change.

Legal Dive spoke with Podinic about the transformative nature of AI, including the financial risks to lawyers’ billing models and how it will force general counsel and chief legal officers to consider how they’ll use the time AI is expected to free up for the lawyers on their teams when they no longer have to do administrative tasks and low-level work.

Legaltech will augment lawyers’ capabilities but not replace them, says GlobalData — from globaldata.com

- Traditionally, law firms have been wary of adopting technologies that could compromise data privacy and legal accuracy; however, attitudes are changing

- Despite concerns about technology replacing humans in the legal sector, legaltech is more likely to augment the legal profession than replace it entirely

- Generative AI will accelerate digital transformation in the legal sector