Speaking of AI-related items, also see:

OpenAI debuts Whisper API for speech-to-text transcription and translation — from techcrunch.com by Kyle Wiggers

Excerpt:

To coincide with the rollout of the ChatGPT API, OpenAI today launched the Whisper API, a hosted version of the open source Whisper speech-to-text model that the company released in September.

Priced at $0.006 per minute, Whisper is an automatic speech recognition system that OpenAI claims enables “robust” transcription in multiple languages as well as translation from those languages into English. It takes files in a variety of formats, including M4A, MP3, MP4, MPEG, MPGA, WAV and WEBM.

Introducing ChatGPT and Whisper APIs — from openai.com

Developers can now integrate ChatGPT and Whisper models into their apps and products through our API.

Excerpt:

ChatGPT and Whisper models are now available on our API, giving developers access to cutting-edge language (not just chat!) and speech-to-text capabilities.

Everything you wanted to know about AI – but were afraid to ask — from theguardian.com by Dan Milmo and Alex Hern

From chatbots to deepfakes, here is the lowdown on the current state of artificial intelligence

Excerpt:

Barely a day goes by without some new story about AI, or artificial intelligence. The excitement about it is palpable – the possibilities, some say, are endless. Fears about it are spreading fast, too.

There can be much assumed knowledge and understanding about AI, which can be bewildering for people who have not followed every twist and turn of the debate.

So, the Guardian’s technology editors, Dan Milmo and Alex Hern, are going back to basics – answering the questions that millions of readers may have been too afraid to ask.

Nvidia CEO: “We’re going to accelerate AI by another million times” — from

In a recent earnings call, the boss of Nvidia Corporation, Jensen Huang, outlined his company’s achievements over the last 10 years and predicted what might be possible in the next decade.

Excerpt:

Fast forward to today, and CEO Jensen Huang is optimistic that the recent momentum in AI can be sustained into at least the next decade. During the company’s latest earnings call, he explained that Nvidia’s GPUs had boosted AI processing by a factor of one million in the last 10 years.

“Moore’s Law, in its best days, would have delivered 100x in a decade. By coming up with new processors, new systems, new interconnects, new frameworks and algorithms and working with data scientists, AI researchers on new models – across that entire span – we’ve made large language model processing a million times faster,” Huang said.

From DSC:

NVIDA is the inventor of the Graphics Processing Unit (GPU), which creates interactive graphics on laptops, workstations, mobile devices, notebooks, PCs, and more. They are a dominant supplier of artificial intelligence hardware and software.

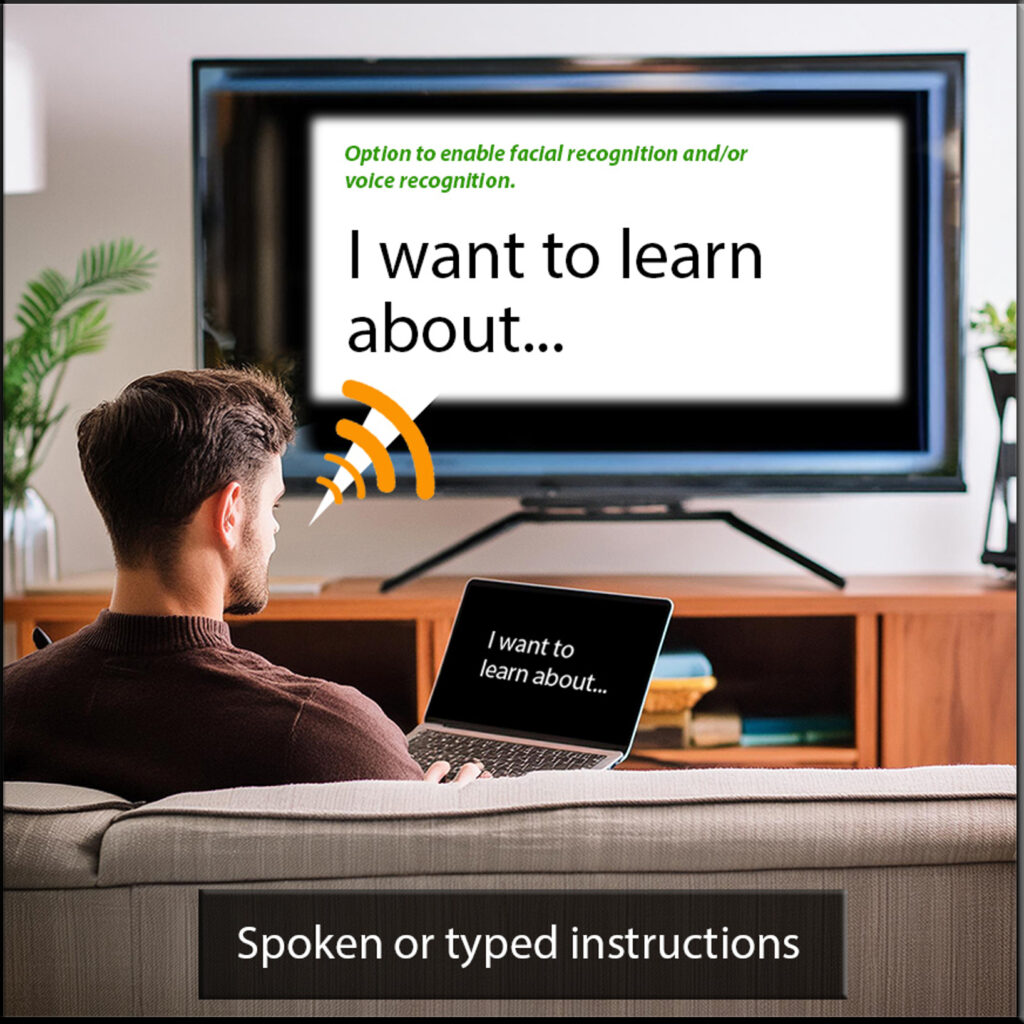

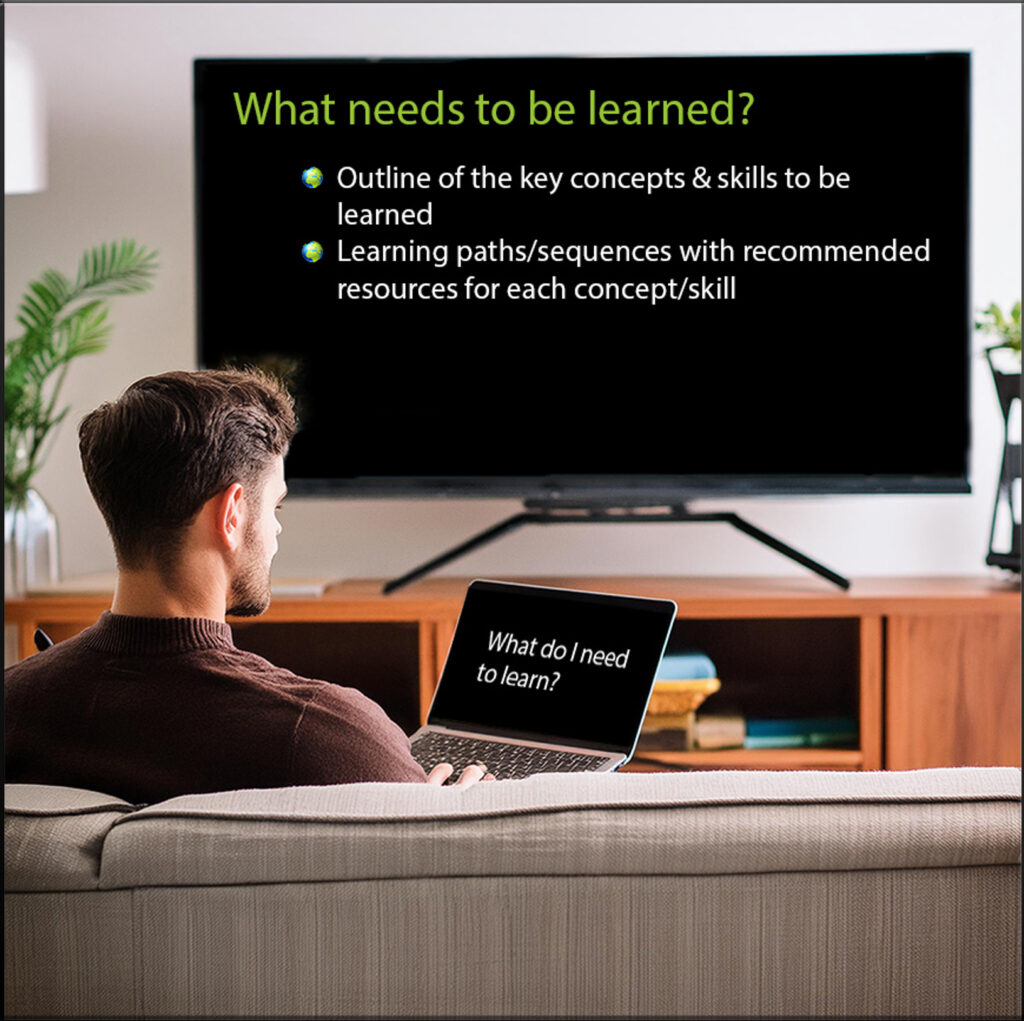

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)