How Learning Designers Are Using AI for Analysis — from drphilippahardman.substack.com by Dr. Philippa Hardman

A practical guide on how to 10X your analysis process using free AI tools, based on real use cases

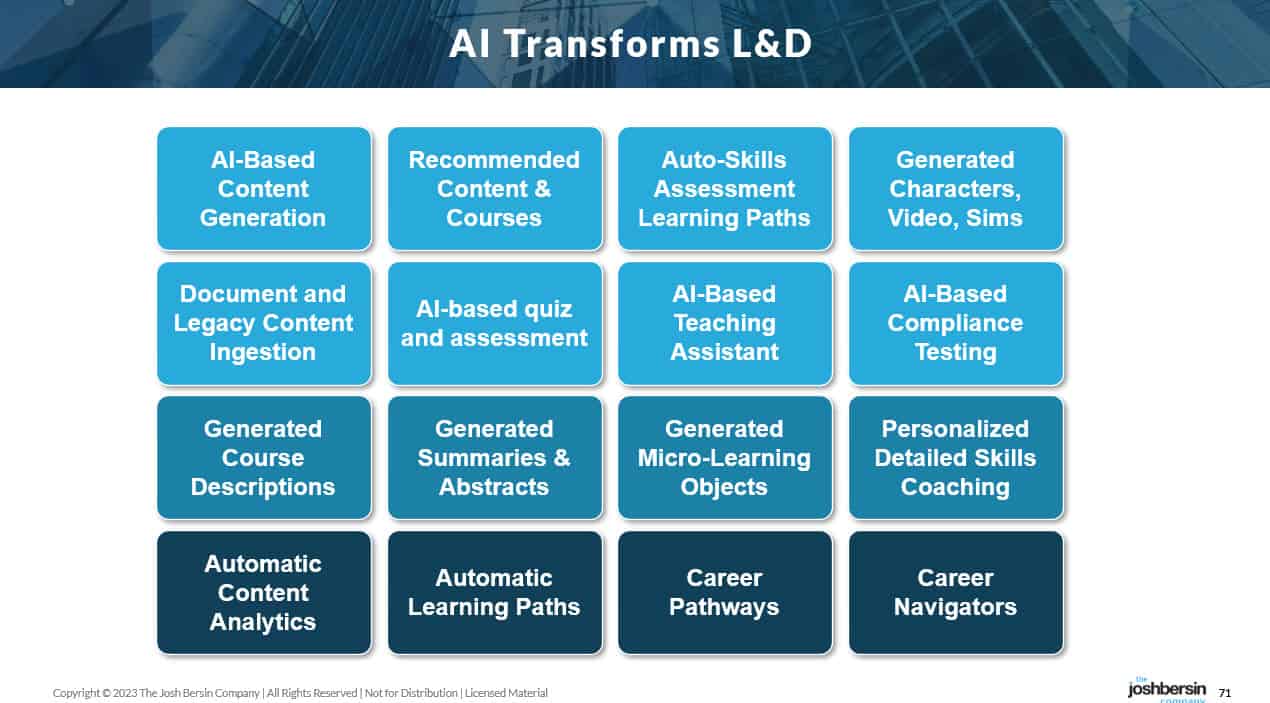

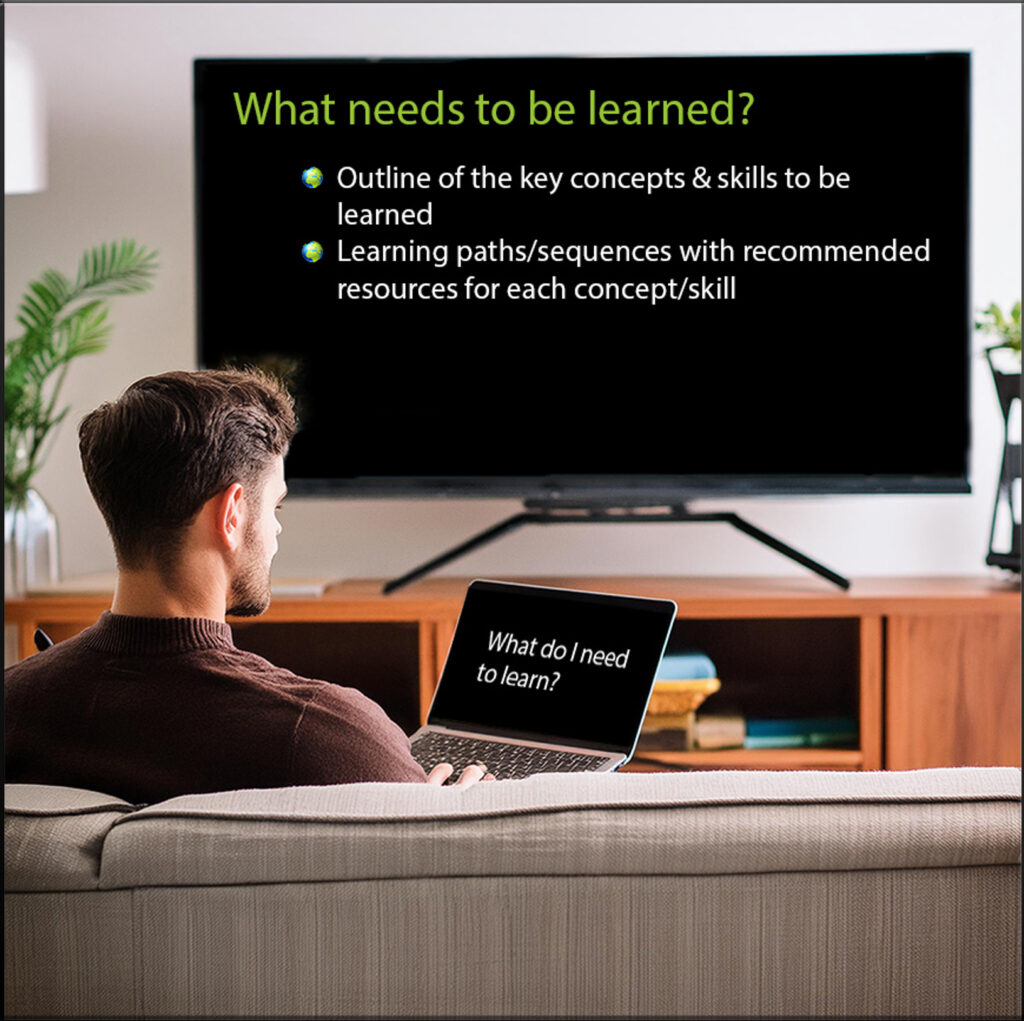

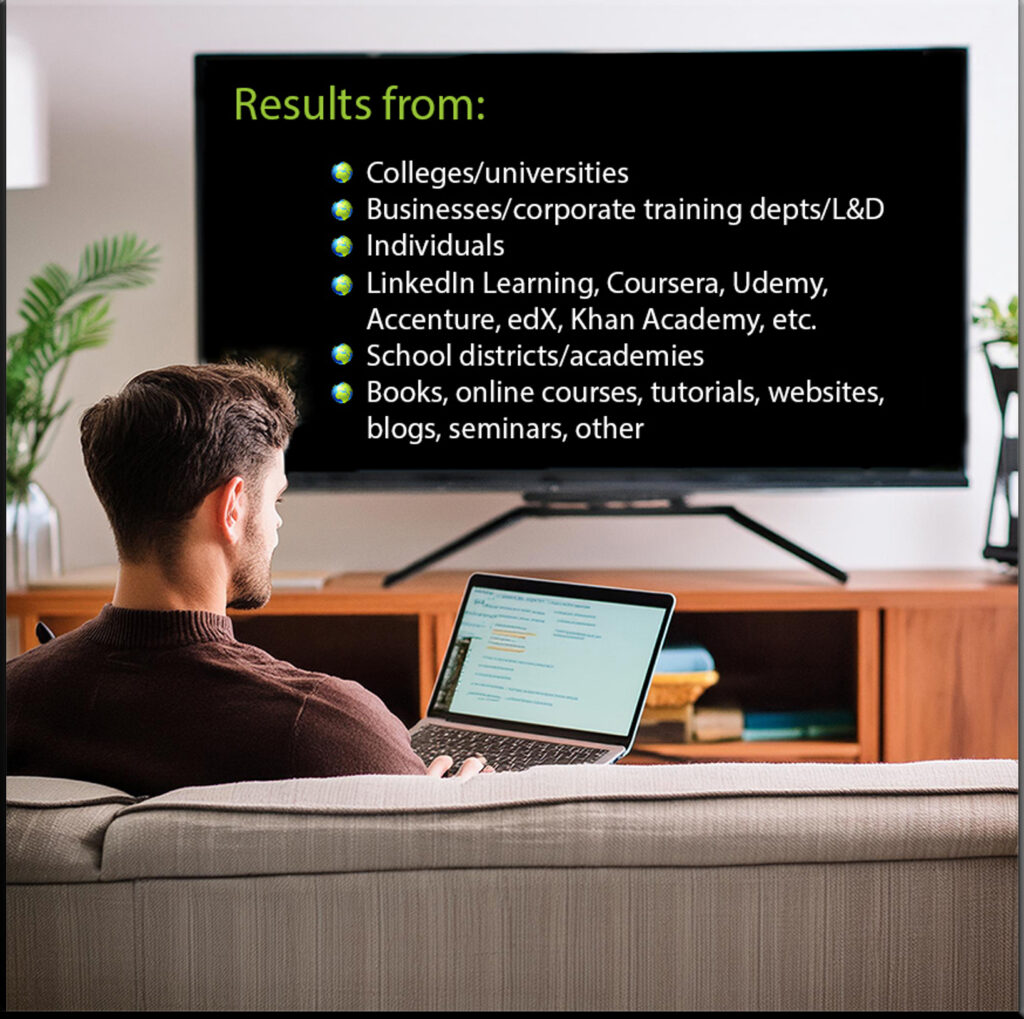

There are three key areas where AI tools make a significant impact on how we tackle the analysis part of the learning design process:

- Understanding the why: what is the problem this learning experience solves? What’s the change we want to see as a result?

- Defining the who: who do we need to target in order to solve the problem and achieve the intended goal?

- Clarifying the what: given who our learners are and the goal we want to achieve, what concepts and skills do we need to teach?

PROOF POINTS: Teens are looking to AI for information and answers, two surveys show — from hechingerreport.org by Jill Barshay

Rapidly evolving usage patterns show Black, Hispanic and Asian American youth are often quick to adopt the new technology

Two new surveys, both released this month, show how high school and college-age students are embracing artificial intelligence. There are some inconsistencies and many unanswered questions, but what stands out is how much teens are turning to AI for information and to ask questions, not just to do their homework for them. And they’re using it for personal reasons as well as for school. Another big takeaway is that there are different patterns by race and ethnicity with Black, Hispanic and Asian American students often adopting AI faster than white students.

AI Instructional Design Must Be More Than a Time Saver — from marcwatkins.substack.com by Marc Watkins

We’ve ceded so much trust to digital systems already that most simply assume a tool is safe to use with students because a company published it. We don’t check to see if it is compliant with any existing regulations. We don’t ask what powers it. We do not question what happens to our data or our student’s data once we upload it. We likewise don’t know where its information came from or how it came to generate human-like responses. The trust we put into these systems is entirely unearned and uncritical.

…

The allure of these AI tools for teachers is understandable—who doesn’t want to save time on the laborious process of designing lesson plans and materials? But we have to ask ourselves what is lost when we cede the instructional design process to an automated system without critical scrutiny.

From DSC:

I post this to be a balanced publisher of information. I don’t agree with everything Marc says here, but he brings up several solids points.

What does Disruptive Innovation Theory have to say about AI? — from christenseninstitute.org by Michael B. Horn

As news about generative artificial intelligence (GenAI) continually splashes across social media feeds, including how ChatGPT 4o can help you play Rock, Paper, Scissors with a friend, breathtaking pronouncements about GenAI’s “disruptive” impact aren’t hard to find.

It turns out that it doesn’t make much sense to talk about GenAI as being “disruptive” in and of itself.

Can it be part of a disruptive innovation? You bet.

But much more important than just the AI technology in determining whether something is disruptive is the business model in which the AI is used—and its competitive impact on existing products and services in different markets.

On a somewhat note, also see:

National summit explores how digital education can promote deeper learning — from digitaleducation.stanford.edu by Jenny Robinson; via Eric Kunnen on Linkedin.com

The conference, held at Stanford, was organized to help universities imagine how digital innovation can expand their reach, improve learning, and better serve the public good.

The summit was organized around several key questions: “What might learning design, learning technologies, and educational media look like in three, five, or ten years at our institutions? How will blended and digital education be poised to advance equitable, just, and accessible education systems and contribute to the public good? What structures will we need in place for our teams and offices?”

.webp)