From DSC:

I was watching a sermon the other day, and I’m always amazed when the pastor doesn’t need to read their notes (or hardly ever refers to them). And they can still do this in a much longer sermon too. Not me man.

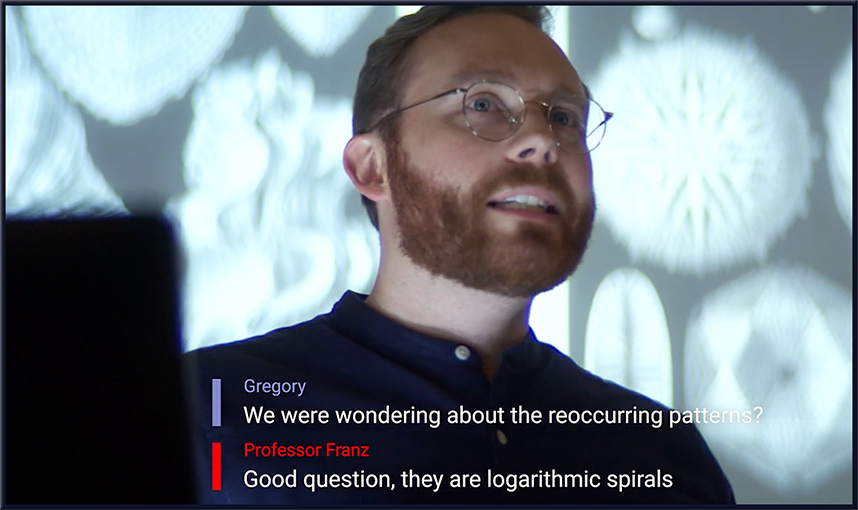

It got me wondering about the idea of having a teleprompter on our future Augmented Reality (AR) glasses and/or on our Virtual Reality (VR) headsets. Or perhaps such functionality will be provided on our mobile devices as well (i.e., our smartphones, tablets, laptops, other) via cloud-based applications.

One could see one’s presentation, sermon, main points for the meeting, what charges are being brought against the defendant, etc. and the system would know to scroll down as you said the words (via Natural Language Processing (NLP)). If you went off script, the system would stop scrolling and you might need to scroll down manually or just begin where you left off.

For that matter, I suppose a faculty member could turn on and off a feed for an AI-based stream of content on where a topic is in the textbook. Or a CEO or University President could get prompted to refer to a particular section of the Strategic Plan. Hmmm…I don’t know…it might be too much cognitive load/overload…I’d have to try it out.

And/or perhaps this is a feature in our future videoconferencing applications.

But I just wanted to throw these ideas out there in case someone wanted to run with one or more of them.

Along these lines, see:

.