Hello GPT-4o — from openai.com

We’re announcing GPT-4o, our new flagship model that can reason across audio, vision, and text in real time.

GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction—it accepts as input any combination of text, audio, image, and video and generates any combination of text, audio, and image outputs. It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time in a conversation. It matches GPT-4 Turbo performance on text in English and code, with significant improvement on text in non-English languages, while also being much faster and 50% cheaper in the API. GPT-4o is especially better at vision and audio understanding compared to existing models.

Example topics covered here:

- Two GPT-4os interacting and singing

- Languages/translation

- Personalized math tutor

- Meeting AI

- Harmonizing and creating music

- Providing inflection, emotions, and a human-like voice

- Understanding what the camera is looking at and integrating it into the AI’s responses

- Providing customer service

With GPT-4o, we trained a single new model end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network. Because GPT-4o is our first model combining all of these modalities, we are still just scratching the surface of exploring what the model can do and its limitations.

This demo is insane.

A student shares their iPad screen with the new ChatGPT + GPT-4o, and the AI speaks with them and helps them learn in *realtime*.

Imagine giving this to every student in the world.

The future is so, so bright. pic.twitter.com/t14M4fDjwV

— Mckay Wrigley (@mckaywrigley) May 13, 2024

From DSC:

I like the assistive tech angle here:

GPT-4o as tested by @BeMyEyes: pic.twitter.com/WeAoVmxUFH

— Greg Brockman (@gdb) May 14, 2024

It’s been less than 24 hours since the OpenAI changed the world with GPT-4o announcement.

And the Internet is a flooded with demo videos.

Here’re the 10 most jaw-dropping examples so far (Don’t miss the 6th one) pic.twitter.com/sLx1D1YSqb

— Poonam Soni (@CodeByPoonam) May 14, 2024

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)

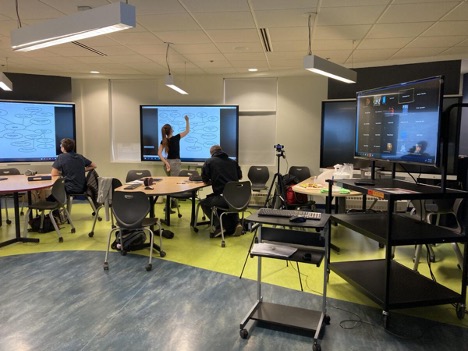

Podcasting studio at FUSE Workspace in Houston, TX.

Podcasting studio at FUSE Workspace in Houston, TX.