How Generative AI will change what lawyers do — from jordanfurlong.substack.com by Jordan Furlong

As we enter the Age of Accessible Law, a wave of new demand is coming our way — but AI will meet most of the surge. What will be left for lawyers? Just the most valuable and irreplaceable role in law.

AI can already provide actionable professional advice; within the next ten years, if it takes that long, I believe it will offer acceptable legal advice. No one really wants “AI courts,” but soon enough, we’ll have AI-enabled mediation and arbitration, which will have a much greater impact on everyday dispute resolution.

I think it’s dangerous to assume that AI will never be able to do something that lawyers now do. “Never” is a very long time. And AI doesn’t need to replicate the complete arsenal of the most gifted lawyer out there. If a Legal AI can replicate 80% of what a middling lawyer can do, for 10% of the cost, in 1% of the time, that’s all the revolution you’ll need.

From DSC:

It is my sincere hope that AI will open up the floodgates to FAR great Access to Justice (A2J) in the future.

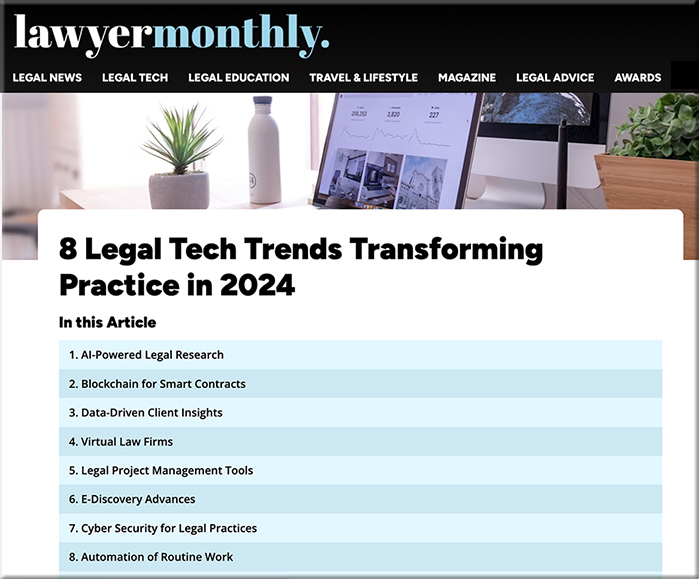

It’s the Battle of the AI Legal Assistants, As LexisNexis Unveils Its New Protégé and Thomson Reuters Rolls Out CoCounsel 2.0 — from lawnext.com by Bob Ambrogi

It’s not quite BattleBots, but competitors LexisNexis and Thomson Reuters both made significant announcements today involving the development of generative AI legal assistants within their products.

Thomson Reuters, which last year acquired the CoCounsel legal assistant originally developed by Casetext, and which later announced plans to deploy it throughout its product lines, today unveiled what it says is the “supercharged” CoCounsel 2.0.

Meanwhile, LexisNexis said today it is rolling out the commercial preview version of its Protégé Legal AI Assistant, which it describes as a “substantial leap forward in personalized generative AI that will transform legal work.” It is part of the launch of the third generation of Lexis+ AI, the AI-driven legal research platform the company launched last year.

Thomson Reuters Launches CoCounsel 2.0 — from abovethelaw.com by Joe Patrice

New release promises results three times faster than the last version.

It seems like just last year we were talking about CoCounsel 1.0, the generative AI product launched by Casetext and then swiftly acquired by Thomson Reuters. That’s because it was just last year. Since then, Thomson Reuters has worked to marry Casetext’s tool with TR’s treasure trove of data.

It’s not an easy task. A lot of the legal AI conversation glosses over how constructing these tools requires a radical confrontation with the lawyers’ mind. Why do attorneys do what they do every day? Are there seemingly “inefficient” steps that actually serve a purpose? Does an AI “answer” advance the workflow or hinder the research alchemy? As recently as April, Thomson Reuters was busy hyping the fruits of its efforts to get ahead of these challenges.

Though this next item is not necessarily related to legaltech, it’s still relevant to the legal realm:

A Law Degree Is No Sure Thing— from cew.georgetown.edu

Some Law School Graduates Earn Top Dollar, but Many Do Not

Summary

Is law school worth it? A Juris Doctor (JD) offers high median earnings and a substantial earnings boost relative to a bachelor’s degree in the humanities or social sciences—two of the more common fields of study that lawyers pursue as undergraduate students. However, graduates of most law schools carry substantial student loan debt, which dims the financial returns associated with a JD.

A Law Degree Is No Sure Thing: Some Law School Graduates Earn Top Dollar, but Many Do Not finds that the return on investment (ROI) in earnings and career outcomes varies widely across law schools. The median earnings net of debt payments are $72,000 four years after graduation for all law school graduates, but exceed $200,000 at seven law schools. By comparison, graduates of 33 law schools earn less than $55,000 net of debt payments four years after graduation.

From DSC:

A former boss’ husband was starting up a local public defender’s office in Michigan and needed to hire over two dozen people. The salaries were in the $40K’s she said. This surprised me greatly, as I thought all lawyers were bringing in the big bucks. This is not the case, clearly. Many lawyers do not make the big bucks, as this report shows:

…graduates of 33 law schools earn less than $55,000 net of debt payments four years after graduation.

.

Also relevant/see: