Excerpt from Beyond school choice — from Michael Horn

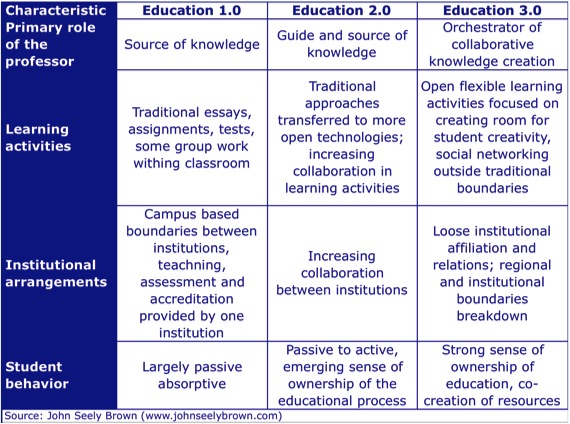

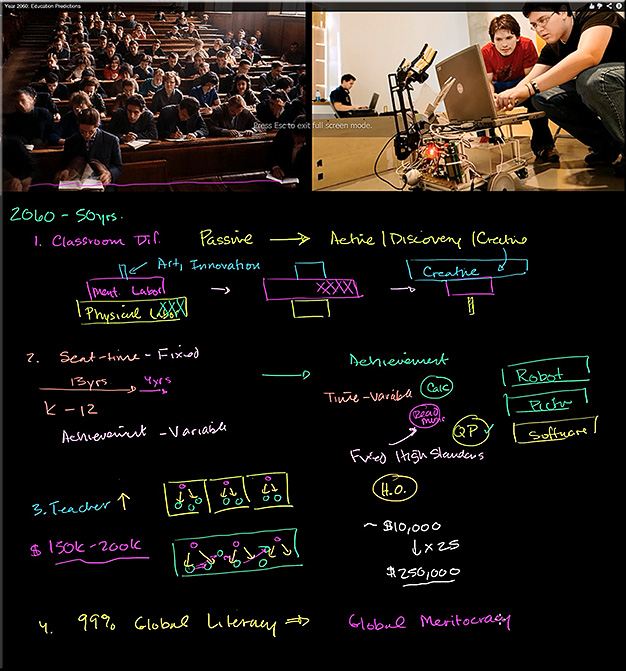

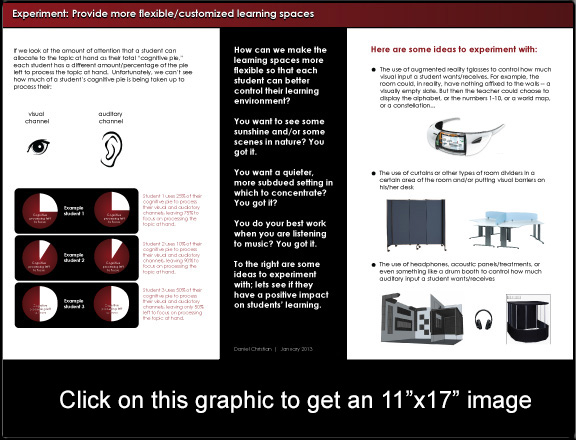

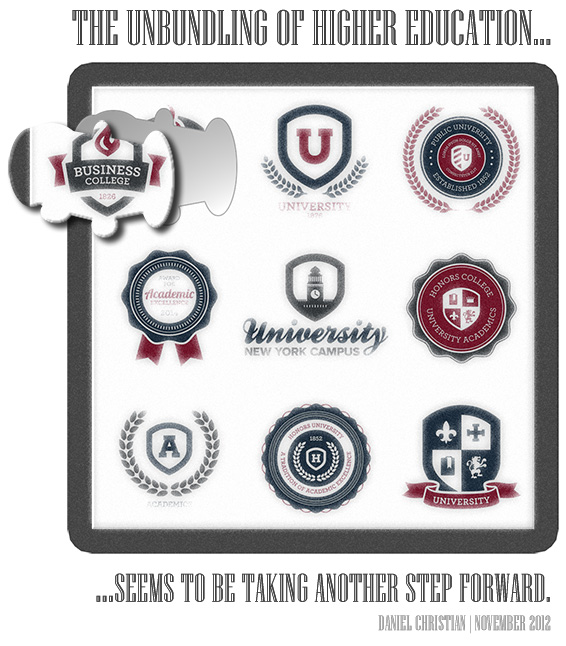

With the rapid growth in online and mobile learning, students everywhere at all levels are increasingly having educational choices—regardless of where they live and even regardless of the policies that regulate schools.

What’s so exciting about this movement beyond school choice is the customization that it allows students to have. Given that each student has different learning needs at different times and different passions and interests, there is likely no school, no matter how great, that can single-handedly cater to all of these needs just by using its own resources contained within the four walls of its classrooms.

…

With the choices available, students increasingly don’t need to make the tradeoff between attending a large school with lots of choices but perhaps lots of anonymity or a small school with limited choices but a deeply developed personal support structure.

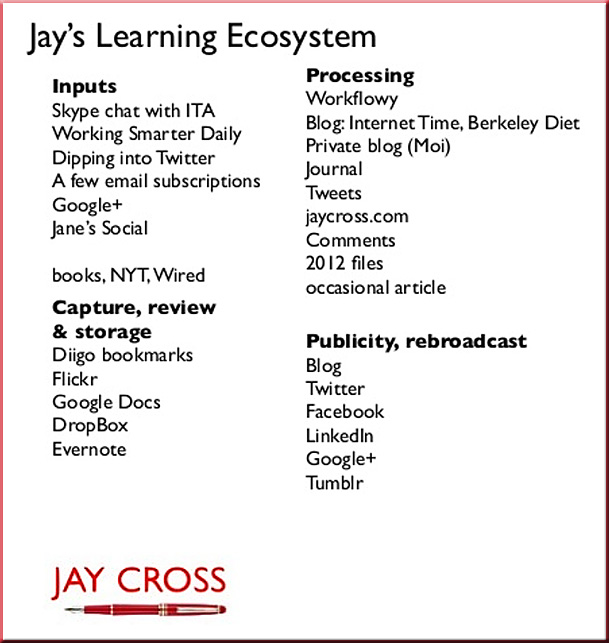

Excerpt from Cooperating in the open — from Harold Jarche

I think one of the problems today is that many online social networks are trying to be communities of practice. But to be a community of practice, there has to be something to practice. One social network, mine, is enough for me. How I manage the connections is also up to me. In some cases I will follow a blogger, in others I will connect via Google Plus or Twitter, but from my perspective it is one network, with varying types of connections. Jumping into someone else’s bounded social network/community only makes sense if I have an objective. If not, I’ll keep cooperating out in the open.

From DSC:

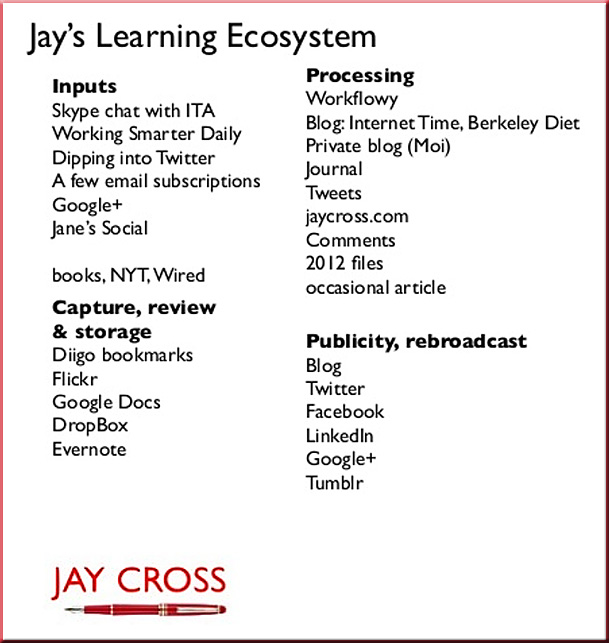

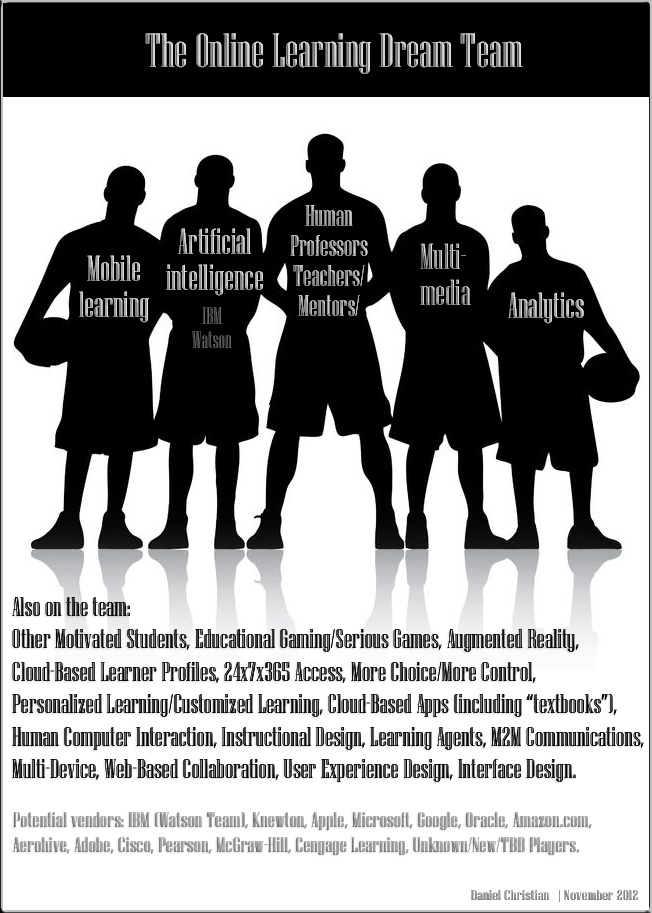

Perhaps helping folks build their own learning ecosystems — based upon one’s gifts/abilities/passions — should be an objective for teachers, professors, instructional designers, trainers, and consultants alike. No matter whether we’re talking K-12, higher ed, or corporate training, these ever-changing networks/tools/strategies will help keep us marketable and able to contribute in a variety of areas to society.

Addendum on 2/5/13:

.