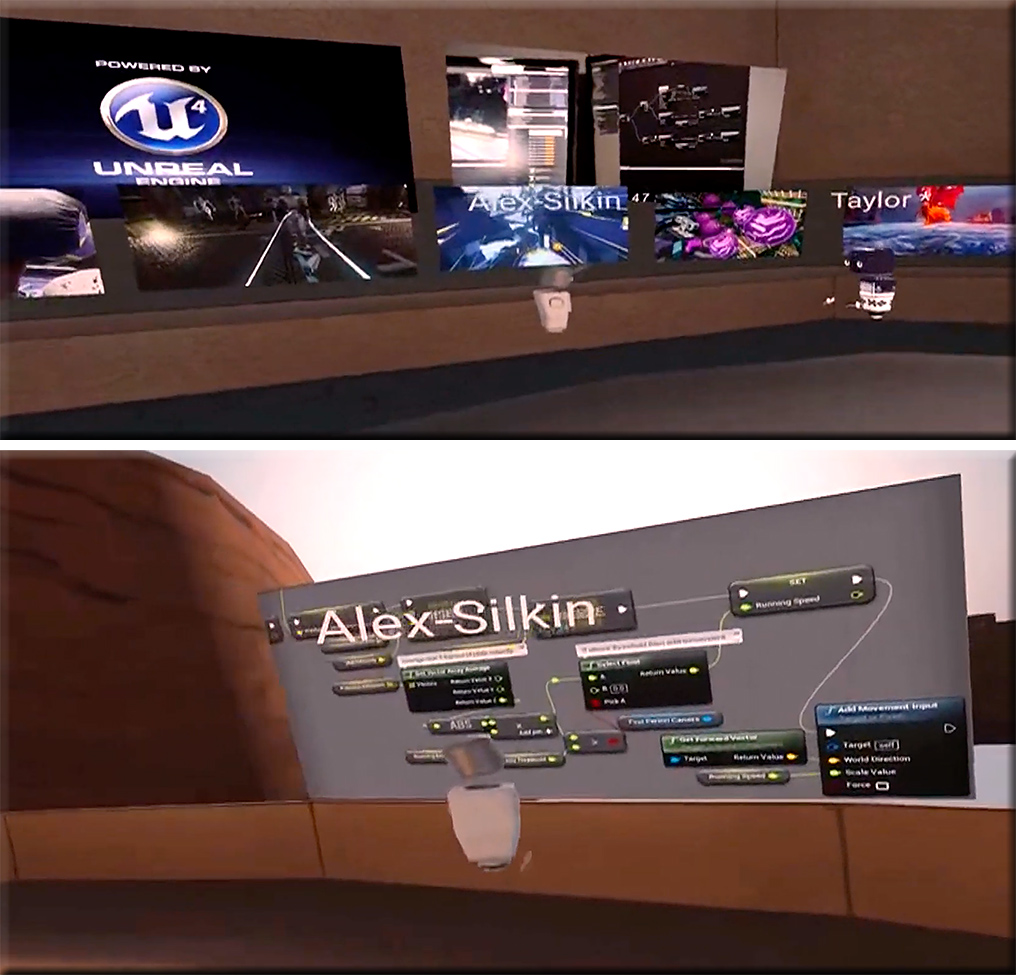

Chemistry lessons could be taught in #virtualreality in the future#VR #MR #AugmentedReality #edtech #tech MT @paula_piccard #MixedReality #ar #xr #cr #innovation #education #teching #chemistry #future #teacher #futureofeducation pic.twitter.com/HLdWUR691v

— Fred Steube (@steube) July 9, 2019

Amy Peck (EndeavorVR) on enterprises’ slow adoption of AR and the promise in education — from thearshow.com by Jason McDowall

Description:

In this conversation, Amy and [Jason McDowall] discuss the viability of the location-based VR market and the potential for AR & VR in childhood education.

We get into the current opportunities and challenges in bringing spatial computing to the enterprise. One of these challenges is the difficulty in explaining a technology that needs to be directly experienced, so much so that Amy now insists C-level executives put on a headset as a first step in the consulting process.

We also talk about VR & AR in healthcare, and the potential impact of blockchain technology.

Fast forward to 29:15 or so for the piece

of this podcast that relates to education.

Also see:

Reality Check: The marvel of computer vision technology in today’s camera-based AR systems — from arvrjourney.com by Alex Chuang

How does mobile AR work today and how will it work tomorrow

Excerpt:

AR experiences can seem magical but what exactly is happening behind the curtain? To answer this, we must look at the three basic foundations of a camera-based AR system like our smartphone.

- How do computers know where it is in the world? (Localization + Mapping)

- How do computers understand what the world looks like? (Geometry)

- How do computers understand the world as we do? (Semantics)

5G and the tactile internet: what really is it? — from techradar.com by Catherine Ellis

With 5G, we can go beyond audio and video, communicating through touch

Excerpt:

However, the speed and capacity of 5G also opens up a wealth of new opportunities with other connected devices, including real-time interaction in ways that have never been possible before.

One of the most exciting of these is tactile, or haptic communication – transmitting a physical sense of touch remotely.

Are we there yet? Impactful technologies and the power to influence change — from campustechnology.com by Mary Grush and Ellen Wagner

Excerpt:

Learning analytics, augmented reality, artificial intelligence, and other new and emerging technologies seem poised to change the business of higher education — yet, we often hear comments like “We’re just not there yet…” or “This is a technology that is just too slow to adoption…” or other observations that make it clear that many people — including those with a high level of expertise in education technology — are thinking that the promise is not yet fulfilled. Here, CT talks with veteran education technology leader Ellen Wagner, to ask for her perspectives on the adoption of impactful technologies — in particular the factors in our leadership and development communities that have the power to influence change.