Mark Zuckerberg talks about the purchase of Oculus VR

Excerpt (emphasis DSC):

But this is just the start. After games, we’re going to make Oculus a platform for many other experiences. Imagine enjoying a court side seat at a game, studying in a classroom of students and teachers all over the world or consulting with a doctor face-to-face — just by putting on goggles in your home.

This is really a new communication platform. By feeling truly present, you can share unbounded spaces and experiences with the people in your life. Imagine sharing not just moments with your friends online, but entire experiences and adventures.

These are just some of the potential uses. By working with developers and partners across the industry, together we can build many more. One day, we believe this kind of immersive, augmented reality will become a part of daily life for billions of people.

Virtual reality was once the dream of science fiction. But the internet was also once a dream, and so were computers and smartphones. The future is coming and we have a chance to build it together. I can’t wait to start working with the whole team at Oculus to bring this future to the world, and to unlock new worlds for all of us.

If you like immersion, you’ll love this reality — from nytimes.com by Farhad Manjoo

Excerpt:

Virtual reality is coming, and you’re going to jump into it.

That’s because virtual reality is the natural extension of every major technology we use today — of movies, TV, videoconferencing, the smartphone and the web. It is the ultra-immersive version of all these things, and we’ll use it exactly the same ways — to communicate, to learn, and to entertain ourselves and escape.

…

The only question is when.

Oculus Rift just put Facebook in the movie business — from variety.com by Andrew Wallenstein

Excerpt:

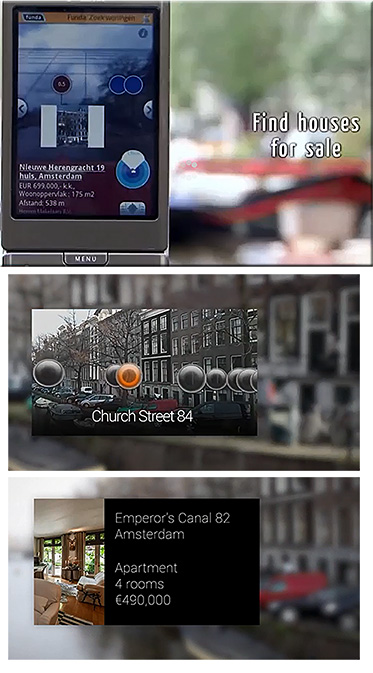

The surprise $2 billion acquisition of virtual-reality headset maker Oculus Rift by a social network company might seem to have nothing to do with movie theaters or films. But in the long term, this kind of technology is going to have a place in the entertainment business. It’s just a matter of time.

While Cinemacon-ites grapple with how best to keep people coming to movie theaters, Oculus Rift will be part of the first wave of innovation capable of bringing an incredible visual environment to people wherever they choose. But this probably won’t require people congregating in theaters for an optimal experience the way 3D does.

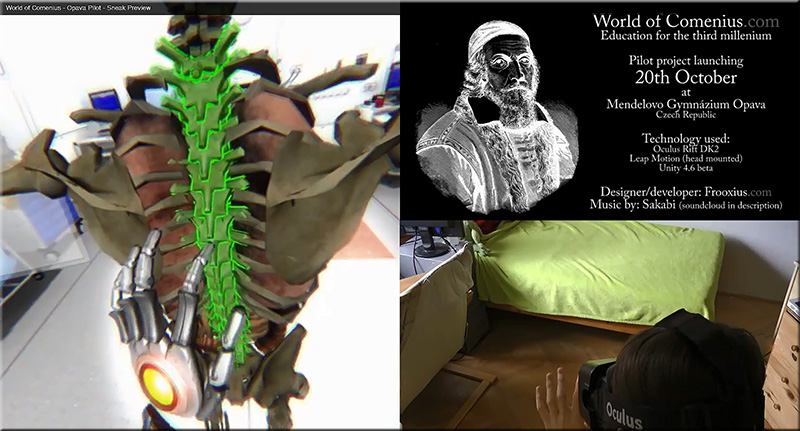

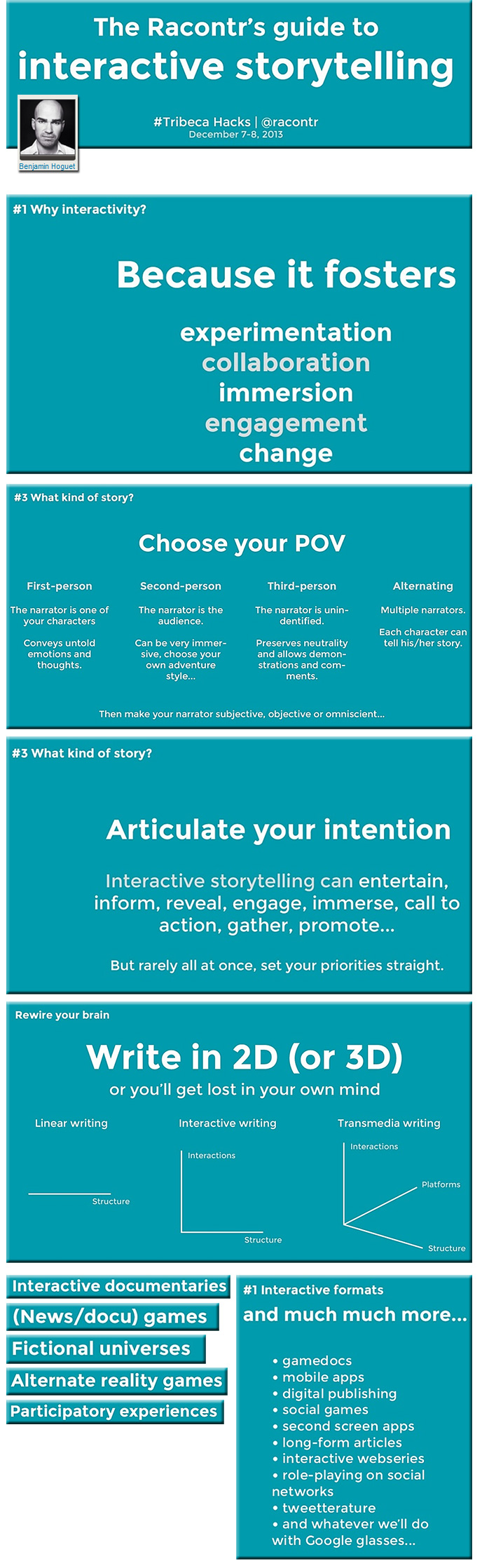

Virtual reality is thought of primarily as a vehicle for gaming, but there are applications in the works that utilize the technology for storytelling. There’s already a production company, Condition One, out with a trailer for a documentary, “Zero Point” (see video above), about VR, told through VR. Media companies are just starting to get their hands on how to use VR, which to date has been for marketing stunts like one HBO did at SXSW for “Game of Thrones.” One developer even recreated the apartment from “Seinfeld.”

An Oculus Rift hack that lets you draw in 3-D — from wired.com by Joseph Flaherty

Excerpt:

Facebook’s $2 billion acquisition of Oculus Rift has reopened conversation about the potential of virtual reality, but a key question remains: How will we actually interact with these worlds? Minecraft creator Markus Persson noted that while these tools can enable amazing experiences, moving around and creating in them is far from a solved problem.

A group of Royal College of Art students–Guillaume Couche, Daniela Paredes Fuentes, Pierre Paslier, and Oluwaseyi Sosanya–has developed a tool called GravitySketch that starts tracing an outline of how these systems could work as creative tools.

Gravity – 3D Sketching from GravitySketch on Vimeo.

Addendum later on 4/8/14:

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)