Inside Amazon’s artificial intelligence flywheel — from wired.com by Steven Levy

How deep learning came to power Alexa, Amazon Web Services, and nearly every other division of the company.

Excerpt (emphasis DSC):

Amazon loves to use the word flywheel to describe how various parts of its massive business work as a single perpetual motion machine. It now has a powerful AI flywheel, where machine-learning innovations in one part of the company fuel the efforts of other teams, who in turn can build products or offer services to affect other groups, or even the company at large. Offering its machine-learning platforms to outsiders as a paid service makes the effort itself profitable—and in certain cases scoops up yet more data to level up the technology even more.

It took a lot of six-pagers to transform Amazon from a deep-learning wannabe into a formidable power. The results of this transformation can be seen throughout the company—including in a recommendations system that now runs on a totally new machine-learning infrastructure. Amazon is smarter in suggesting what you should read next, what items you should add to your shopping list, and what movie you might want to watch tonight. And this year Thirumalai started a new job, heading Amazon search, where he intends to use deep learning in every aspect of the service.

“If you asked me seven or eight years ago how big a force Amazon was in AI, I would have said, ‘They aren’t,’” says Pedro Domingos, a top computer science professor at the University of Washington. “But they have really come on aggressively. Now they are becoming a force.”

Maybe the force.

From DSC:

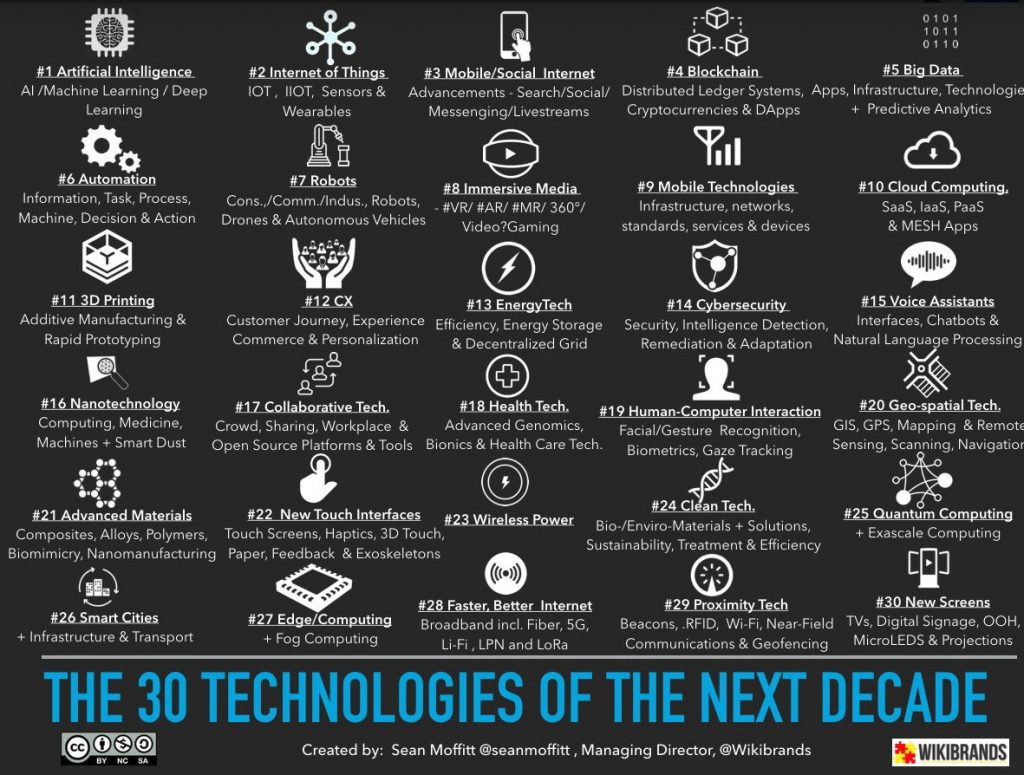

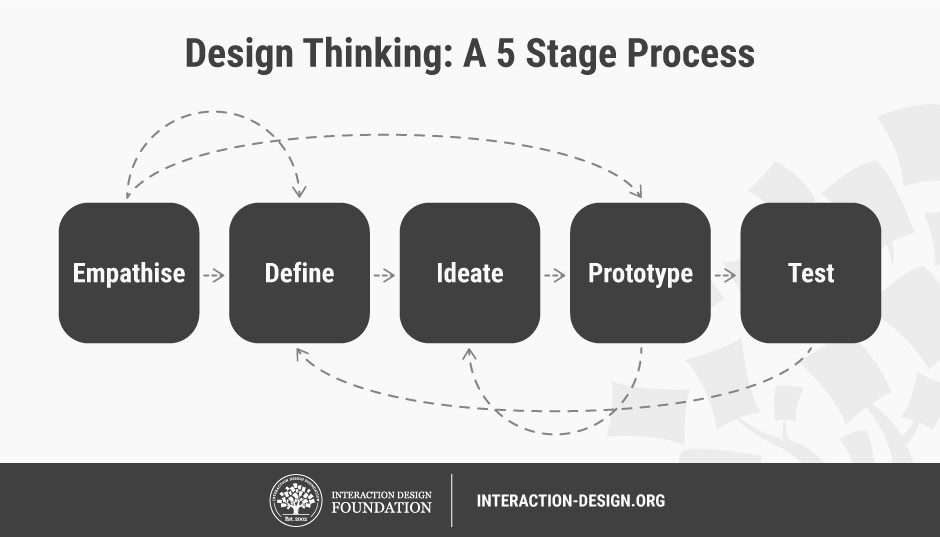

When will we begin to see more mainstream recommendation engines for learning-based materials? With the demand for people to reinvent themselves, such a next generation learning platform can’t come soon enough!

- Turning over control to learners to create/enhance their own web-based learner profiles; and allowing people to say who can access their learning profiles.

- AI-based recommendation engines to help people identify curated, effective digital playlists for what they want to learn about.

- Voice-driven interfaces.

- Matching employees to employers.

- Matching one’s learning preferences (not styles) with the content being presented as one piece of a personalized learning experience.

- From cradle to grave. Lifelong learning.

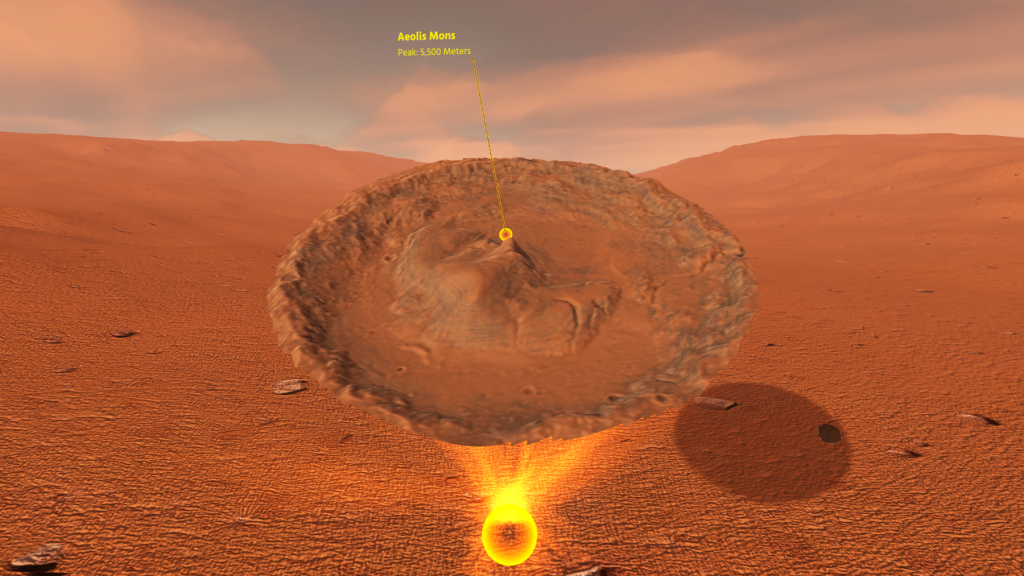

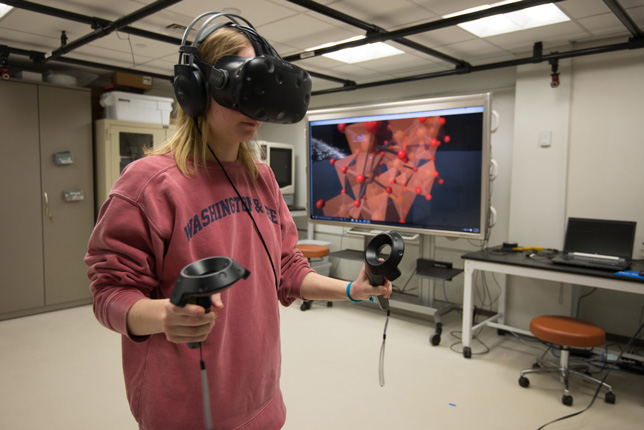

- Multimedia-based, interactive content.

- Asynchronously and synchronously connecting with others learning about the same content.

- Online-based tutoring/assistance; remote assistance.

- Reinvent. Staying relevant. Surviving.

- Competency-based learning.

We’re about to embark on a period in American history where career reinvention will be critical, perhaps more so than it’s ever been before. In the next decade, as many as 50 million American workers—a third of the total—will need to change careers, according to McKinsey Global Institute. Automation, in the form of AI (artificial intelligence) and RPA (robotic process automation), is the primary driver. McKinsey observes: “There are few precedents in which societies have successfully retrained such large numbers of people.”

Also relevant/see:

Online education’s expansion continues in higher ed with a focus on tech skills — from educationdive.com by James Paterson

Dive Brief:

- Online learning continues to expand in higher ed with the addition of several online master’s degrees and a new for-profit college that offers a hybrid of vocational training and liberal arts curriculum online.

- Inside Higher Ed reported the nonprofit learning provider edX is offering nine master’s degrees through five U.S. universities — the Georgia Institute of Technology, the University of Texas at Austin, Indiana University, Arizona State University and the University of California, San Diego. The programs include cybersecurity, data science, analytics, computer science and marketing, and they cost from around $10,000 to $22,000. Most offer stackable certificates, helping students who change their educational trajectory.

- Former Harvard University Dean of Social Science Stephen Kosslyn, meanwhile, will open Foundry College in January. The for-profit, two-year program targets adult learners who want to upskill, and it includes training in soft skills such as critical thinking and problem solving. Students will pay about $1,000 per course, though the college is waiving tuition for its first cohort.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)