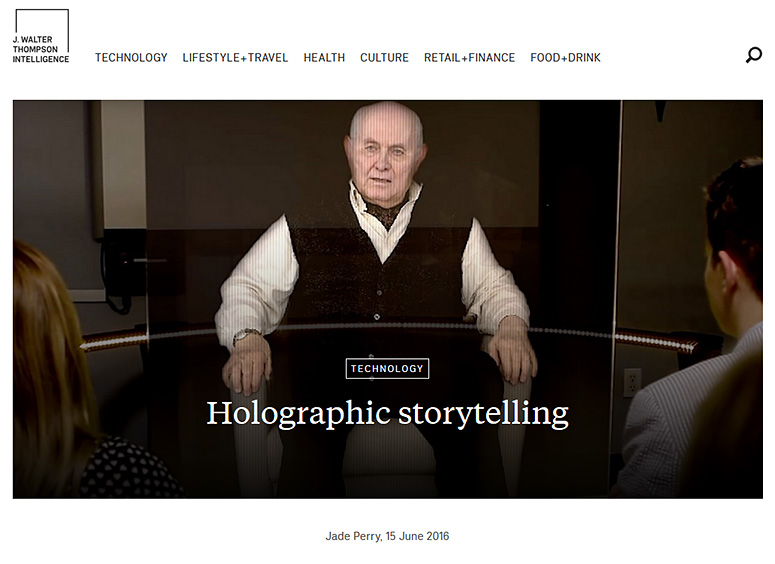

Holographic storytelling — from jwtintelligence.com by Jade Perry

Excerpt (emphasis DSC):

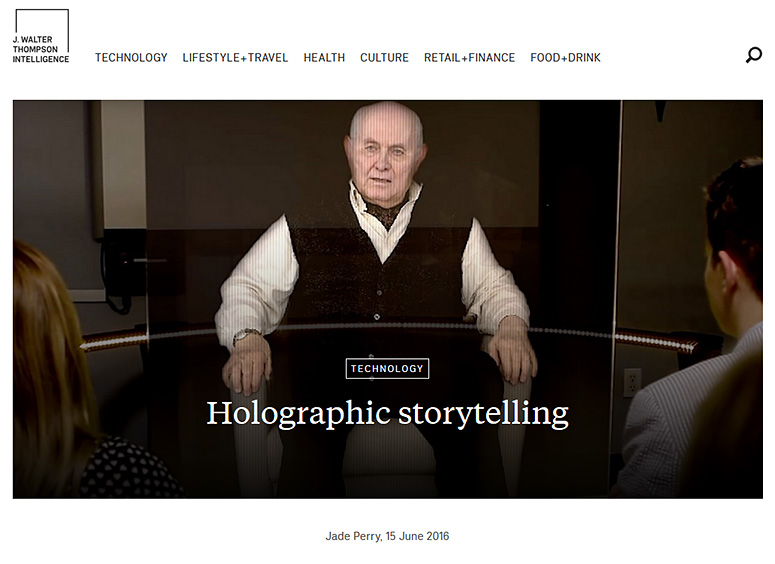

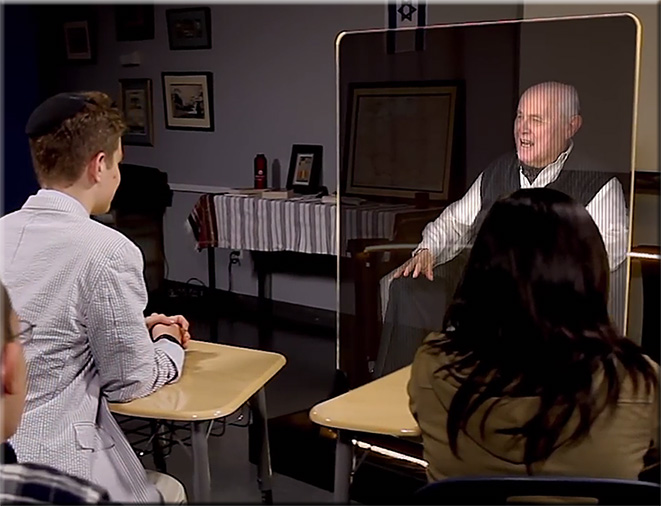

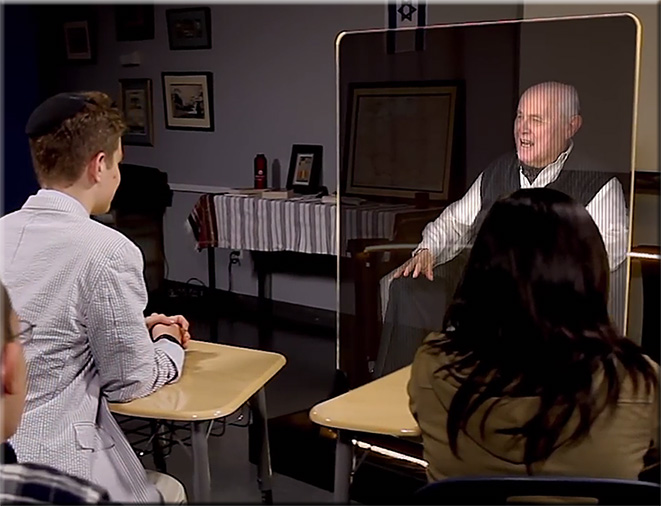

The stories of Holocaust survivors are brought to life with the help of interactive 3D technologies.

‘New Dimensions in Testimony’ is a new way of preserving history for future generations. The project brings to life the stories of Holocaust survivors with 3D video, revealing raw first-hand accounts that are more interactive than learning through a history book.

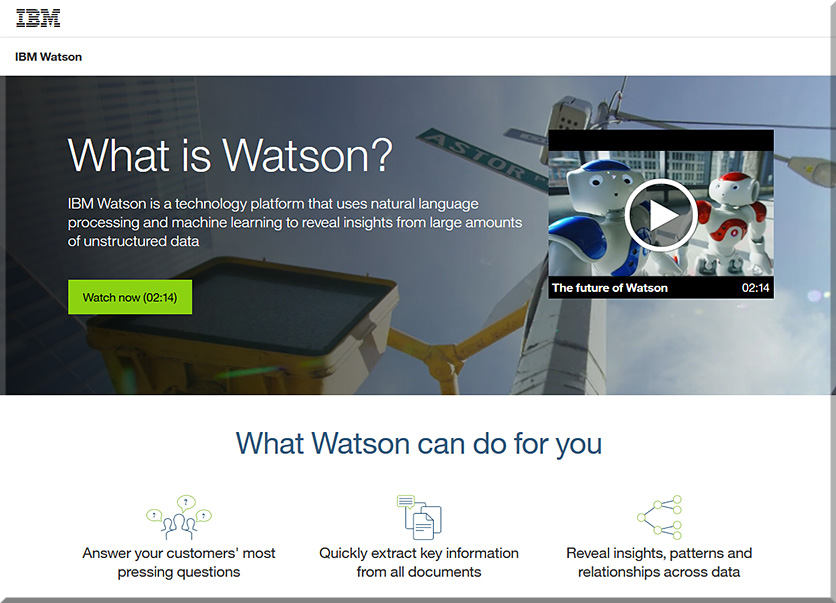

Holocaust survivor Pinchas Gutter, the first subject of the project, was filmed answering over 1000 questions, generating approximately 25 hours of footage. By incorporating natural language processing from Conscience Display, viewers were able to ask Gutter’s holographic image questions that triggered relevant responses.

From DSC:

I wonder…is this an example of a next generation, visually-based chatbot*?

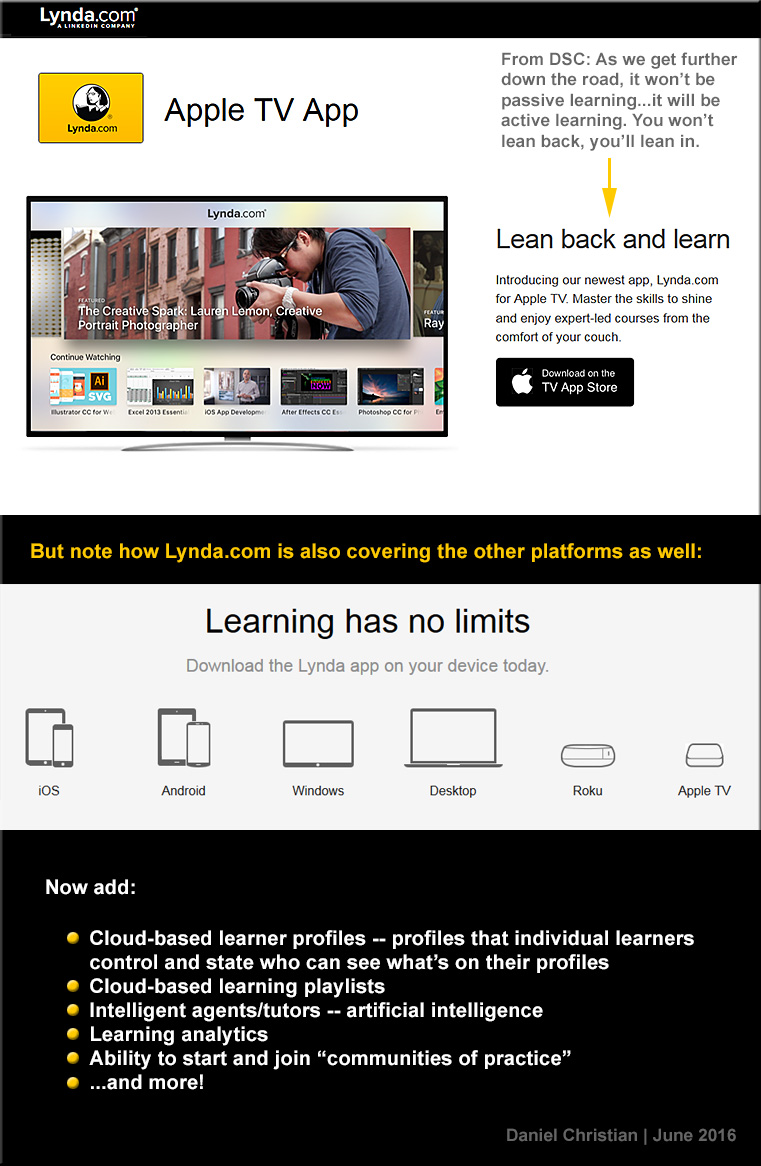

With the growth of artificial intelligence (AI), intelligent systems, and new types of human computer interaction (HCI), this type of concept could offer an on-demand learning approach that’s highly engaging — and accessible from face-to-face settings as well as from online-based learning environments. (If it could be made to take in some of the context of a particular learner and where a learner is in the relevant Zone of Proximal Development (via web-based learner profiles/data), it would be even better.)

As an aside, is this how we will obtain

customer service from the businesses of the future? See below.

*The complete beginner’s guide to chatbots — from chatbotsmagazine.com by Matt Schlicht

Everything you need to know.

Excerpt (emphasis DSC):

What are chatbots? Why are they such a big opportunity? How do they work? How can I build one? How can I meet other people interested in chatbots?

These are the questions we’re going to answer for you right now.

…

What is a chatbot?

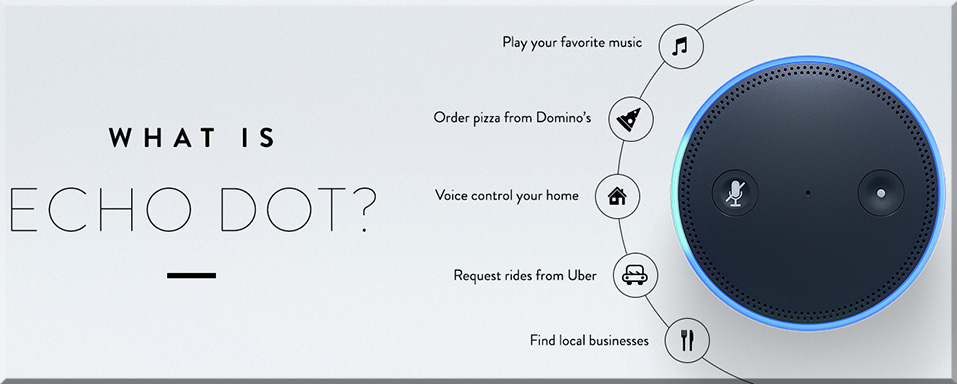

A chatbot is a service, powered by rules and sometimes artificial intelligence, that you interact with via a chat interface. The service could be any number of things, ranging from functional to fun, and it could live in any major chat product (Facebook Messenger, Slack, Telegram, Text Messages, etc.).

…

A chatbot is a service, powered by rules and sometimes artificial intelligence, that you interact with via a chat interface.

Examples of chatbots

Weather bot. Get the weather whenever you ask.

Grocery bot. Help me pick out and order groceries for the week.

News bot. Ask it to tell you when ever something interesting happens.

Life advice bot. I’ll tell it my problems and it helps me think of solutions.

Personal finance bot. It helps me manage my money better.

Scheduling bot. Get me a meeting with someone on the Messenger team at Facebook.

A bot that’s your friend. In China there is a bot called Xiaoice, built by Microsoft, that over 20 million people talk to.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)