Five things to know about Facebook’s huge augmented reality fantasy — from gizmodo.com by Michael Nunez

Excerpt:

One example of how this might work is at a restaurant. Your friend will be able to leave an augmented reality sticky note on the menu, letting you know which menu item is the best or which one’s the worst when you hold your camera up to it.

Another example is if you’re at a celebration, like New Year’s Eve or a birthday party. Facebook could use an augmented reality filter to fill the scene with confetti or morph the bar into an aquarium or any other setting corresponding with the team’s mascot. The basic examples are similar to Snapchat’s geo-filters—but the more sophisticated uses because it will actually let you leave digital objects behind for your friends to discover. Very cool!

“We’re going to make the camera the first mainstream AR platform,” said Zuckerberg.

Here’s Everything Facebook Announced at F8, From VR to Bots — from wired.com

Excerpt:

On Tuesday, Facebook kicked off its annual F8 developer conference with a keynote address. CEO Mark Zuckerberg and others on his executive team made a bunch of announcements aimed at developers, but the implications for Facebook’s users was pretty clear. The apps that billions of us use daily—Facebook, Messenger, WhatsApp, Instagram—are going to be getting new camera tricks, new augmented reality capabilities, and more bots. So many bots!

Facebook’s bold and bizarre VR hangout app is now available for the Oculus Rift — from theverge.com by Nick Statt

Excerpt:

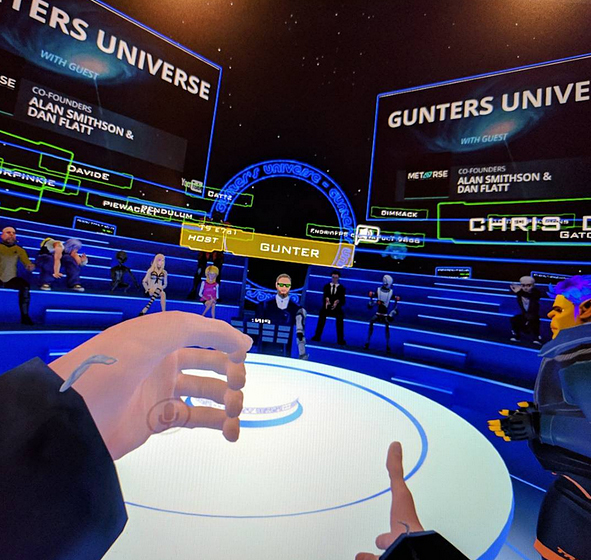

Facebook’s most fascinating virtual reality experiment, a VR hangout session where you can interact with friends as if you were sitting next to one another, is now ready for the public. The company is calling the product Facebook Spaces, and it’s being released today in beta form for the Oculus Rift.

:no_upscale()/cdn0.vox-cdn.com/uploads/chorus_image/image/54320231/facebook_spaces_drawing.0.gif)

From DSC:

Is this a piece of the future of distance education / online learning-based classrooms?

Facebook Launches Local ‘Developer Circles’ To Help Entrepreneurs Collaborate, Build Skills — from forbes.com by Kathleen Chaykowski

Excerpt:

In 2014, Facebook launched its FbStart program, which has helped several thousand early stage apps build and grow their apps through a set of free tools and mentorship meetings. On Tuesday, Facebook unveiled a new program to reach a broader range of developers, as well as students interested in technology.

The program, called “Developer Circles,” is intended to bring developers in local communities together offline as well as online in Facebook groups to encourage the sharing of technical know-how, discuss ideas and build new projects. The program is also designed to serve students who may not yet be working on an app, but who are interested in building skills to work in computer science.

Facebook launches augmented reality Camera Effects developer platform — from techcrunch.com by Josh Constine

Excerpt:

Facebook will rely on an army of outside developers to contribute augmented reality image filters and interactive experiences to its new Camera Effects platform. After today’s Facebook F8 conference, the first effects will become available inside Facebook’s Camera feature on smartphones, but the Camera Effects platform is designed to eventually be compatible with future augmented reality hardware, such as eyeglasses.

While critics thought Facebook was just mindlessly copying Snapchat with its recent Stories and Camera features in Facebook, Messenger, Instagram and WhatsApp, Mark Zuckerberg tells TechCrunch his company was just laying the groundwork for today’s Camera Effects platform launch.

Mark Zuckerberg Sees Augmented Reality Ecosystem in Facebook — from nytimes.com by Mike Isaac

Excerpt:

On Tuesday, Mr. Zuckerberg introduced what he positioned as the first mainstream augmented reality platform, a way for people to view and digitally manipulate the physical world around them through the lens of their smartphone cameras.

Facebook Launches Social VR App ‘Facebook Spaces’ in Beta for Rift — from virtualrealitypulse.com by Ben Lang

Addendums on 4/20/17:

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)

:no_upscale()/cdn0.vox-cdn.com/uploads/chorus_image/image/54320231/facebook_spaces_drawing.0.gif)