100 Data and Analytics Predictions Through 2021 — from Gartner

From DSC:

I just wanted to include some excerpts (see below) from Gartner’s 100 Data and Analytics Predictions Through 2021 report. I do so to illustrate how technology’s impact continues to expand/grow in influence throughout many societies around the globe, as well as to say that if you want a sure thing job in the next 1-15 years, I would go into studying data science and/or artificial intelligence!

Excerpts:

As evidenced by its pervasiveness within our vast array of recently published Predicts 2017 research, it is clear that data and analytics are increasingly critical elements across most industries, business functions and IT disciplines. Most significantly, data and analytics are key to a successful digital business. This collection of more than 100 data-and-analytics-related Strategic Planning Assumptions (SPAs) or predictions through 2021, heralds several transformations and challenges ahead that CIOs and data and analytics leaders should embrace and include in their planning for successful strategies. Common themes across the discipline in general, and within particular business functions and industries, include:

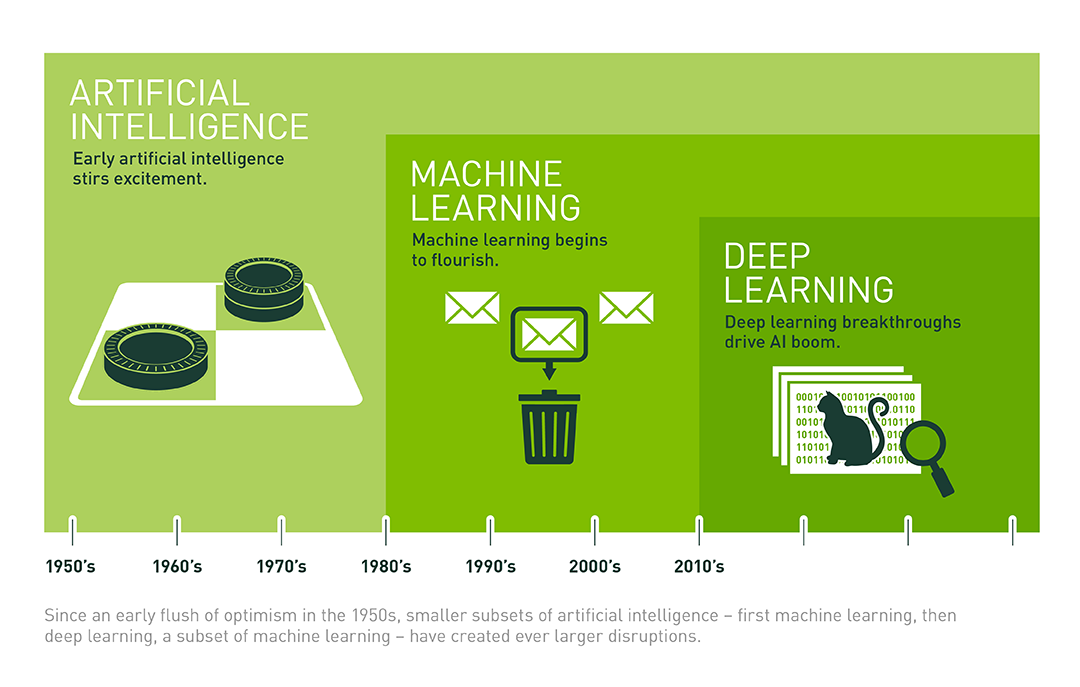

- Artificial intelligence (AI) is emerging as a core business and analytic competency. Beyond yesteryear’s hard-coded algorithms and manual data science activities, machine learning (ML) promises to transform business processes, reconfigure workforces, optimize infrastructure behavior and blend industries through rapidly improved decision making and process optimization.

- Natural language is beginning to play a dual role in many organizations and applications as a source of input for analytic and other applications, and a variety of output, in addition to traditional analytic visualizations.

- Information itself is being recognized as a corporate asset (albeit not yet a balance sheet asset), prompting organizations to become more disciplined about monetizing, managing and measuring it as they do with other assets. This includes “spending” it like cash, selling/licensing it to others, participating in emerging data marketplaces, applying asset management principles to improve its quality and availability, and quantifying its value and risks in a variety of ways.

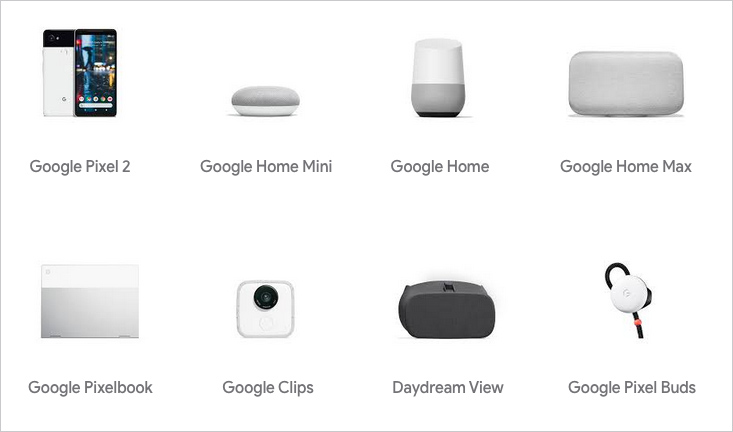

- Smart devices that both produce and consume Internet of Things (IoT) data will also move intelligent computing to the edge of business functions, enabling devices in almost every industry to operate and interact with humans and each other without a centralized command and control. The resulting opportunities for innovation are unbounded.

- Trust becomes the watchword for businesses, devices and information, leading to the creation of digital ethics frameworks, accreditation and assessments. Most attempts at leveraging blockchain as a trust mechanism fail until technical limitations, particularly performance, are solved.

…

Education

Significant changes to the global education landscape have taken shape in 2016, and spotlight new and interesting trends for 2017 and beyond. “Predicts 2017: Education Gets Personal” is focused on several SPAs, each uniquely contributing to the foundation needed to create the digitalized education environments of the future. Organizations and institutions will require new strategies to leverage existing and new technologies to maximize benefits to the organization in fresh and

innovative ways.

- By 2021, more than 30% of institutions will be forced to execute on a personalization strategy to maintain student enrollment.

- By 2021, the top 100 higher education institutions will have to adopt AI technologies to stay competitive in research.

…

Artificial Intelligence

Business and IT leaders are stepping up to a broad range of opportunities enabled by AI, including autonomous vehicles, smart vision systems, virtual customer assistants, smart (personal) agents and natural-language processing. Gartner believes that this new general-purpose technology is just beginning a 75-year technology cycle that will have far-reaching implications for every industry. In “Predicts 2017: Artificial Intelligence,” we reflect on the near-term opportunities, and the potential burdens and risks that organizations face in exploiting AI. AI is changing the way in which organizations innovate and communicate their processes, products and services.

Practical strategies for employing AI and choosing the right vendors are available to data and analytics leaders right now.

- By 2019, more than 10% of IT hires in customer service will mostly write scripts for bot interactions.

- Through 2020, organizations using cognitive ergonomics and system design in new AI projects will achieve long-term success four times more often than others.

- By 2020, 20% of companies will dedicate workers to monitor and guide neural networks.

- By 2019, startups will overtake Amazon, Google, IBM and Microsoft in driving the AI economy with disruptive business solutions.

- By 2019, AI platform services will cannibalize revenues for 30% of market-leading companies. “Predicts 2017: Drones”

- By 2020, the top seven commercial drone manufacturers will all offer analytical software packages.

“Predicts 2017: The Reinvention of Buying Behavior in Vertical-Industry Markets”

- By 2021, 30% of net new revenue growth from industry-specific solutions will include AI technology.

…

Advanced Analytics and Data Science

Advanced analytics and data science are fast becoming mainstream solutions and competencies in most organizations, even supplanting traditional BI and analytics resources and budgets. They allow more types of knowledge and insights to be extracted from data. To become and remain competitive, enterprises must seek to adopt advanced analytics, and adapt their business models, establish specialist data science teams and rethink their overall strategies to keep pace with the competition. “Predicts 2017: Analytics Strategy and Technology” offers advice on overall strategy, approach and operational transformation to algorithmic business that leadership needs to build to reap the benefits.

- By 2018, deep learning (deep neural networks [DNNs]) will be a standard component in 80% of data scientists’ tool boxes.

- By 2020, more than 40% of data science tasks will be automated, resulting in increased productivity and broader usage by citizen data scientists.

- By 2019, natural-language generation will be a standard feature of 90% of modern BI and analytics platforms.

- By 2019, 50% of analytics queries will be generated using search, natural-language query or voice, or will be autogenerated.

- By 2019, citizen data scientists will surpass data scientists in the amount of advanced analysis

produced.

By 2020, 95% of video/image content will never be viewed by humans; instead, it will be vetted by machines that provide some degree of automated analysis.

Through 2020, lack of data science professionals will inhibit 75% of organizations from achieving the full potential of IoT.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)