From DSC:

First some recent/relevant postings:

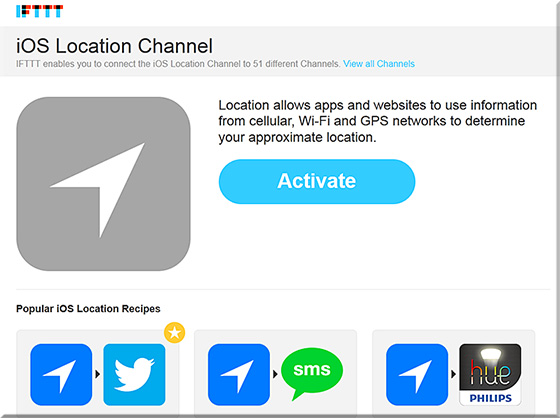

IFTTT’s ingenious new feature: Controlling apps with your location — from wired.com by Kyle VanHemert

An update to the IFTTT app lets you use your location in recipes. Image: IFTTT

Excerpt:

IFTTT stands athwart history. At a point where the software world is obsessed with finding ever more specialized apps for increasingly specific problems, the San Francisco-based company is gleefully doing just the opposite. It simply wants to give people a bunch of tools and let them figure it out. It all happens with simple conditional statements the company calls “recipes.” So, you can use the service to execute the following command: If I take a screenshot, then upload it to Dropbox. If this RSS feed is updated, then send me a text message. It’s great for kluging together quick, automated solutions for the little workflows that slip into the cracks between apps and services.

If This, Then That (IFTTT)

4 reasons why Apple’s iBeacon is about to disrupt interaction design — from wired.com by Kyle VanHemert

Excerpt:

You step inside Walmart and your shopping list is transformed into a personalized map, showing you the deals that’ll appeal to you most. You pause in front of a concert poster on the street, pull out your phone, and you’re greeted with an option to buy tickets with a single tap. You go to your local watering hole, have a round of drinks, and just leave, having paid—and tipped!—with Uber-like ease. Welcome to the world of iBeacon.

It sounds absurd, but it’s true: Here we are in 2013, and one of the most exciting things going on in consumer technology is Bluetooth. Indeed, times have changed. This isn’t the maddening, battery-leeching, why-won’t-it-stay-paired protocol of yore. Today we have Bluetooth Low Energy which solves many of the technology’s perennial problems with new protocols for ambient, continuous, low-power connectivity. It’s quickly becoming big deal.

The Internet of iThings: Apple’s iBeacon is already in almost 200 million iPhones and iPads — from forbes.com by Anthony Kosner

Excerpt (emphasis DSC):

Because of iBeacons’ limited range, they are well-suited for transmitting content that is relevant in the immediate proximity.

From DSC:

Along the lines of the above postings…I recently had a meeting whereby the topic of iBeacons came up. It was mentioned that museums will be using this sort of thing; i.e. approaching a piece of art will initiate an explanation of that piece on the museum’s self-guided tour application.

That idea made me wonder whether such technology could be used in a classroom…and I quickly thought, “Yes!”

For example, if a student goes to the SW corner of the room, they approach a table. That table has an iBeacon like device on it, which triggers a presentation within a mobile application on the student’s device. The students reviews the presentation and moves onto the SE corner of the room whereby they approach a different table with another/different iBeacon on it. That beacon triggers a quiz on the material they just reviewed, and then proceeds to build upon that information. Etc. Etc. Physically-based scaffolding along with some serious blended/hybrid learning. It’s like taking the concept of QR codes to the next level.

Some iBeacon vendors out there include:

Data mining, interaction design, user interface design, and user experience design may never be the same again.

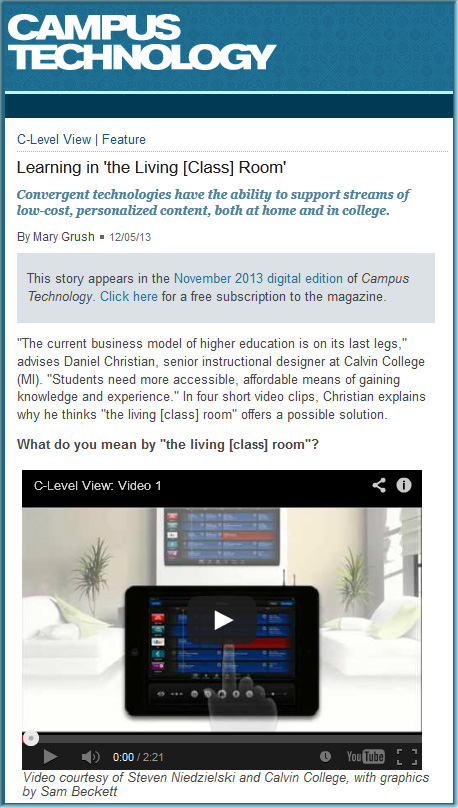

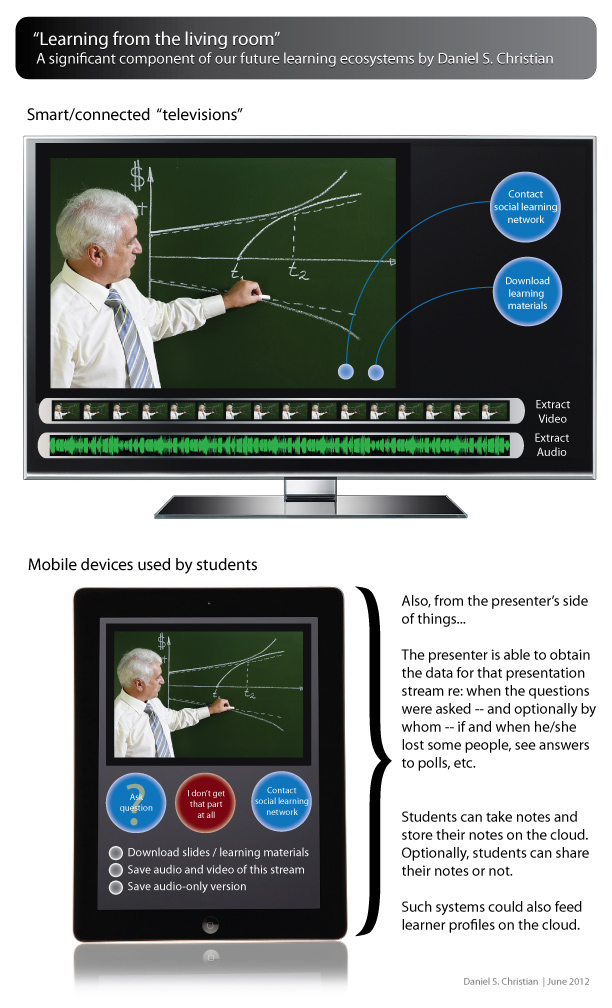

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)