Thriving in an age of continuous reinvention — from pwc.com

As existential threats converge, many companies are taking steps to reinvent themselves. Is it enough? And what will it take to succeed?

.

.

Firms must continue to evolve to remain relevant — from lawyersweekly.com.au by Emma Musgrave

Law firms of all shapes and sizes must continue to reinvent themselves beyond the COVID-19 pandemic, according to two senior leaders at Piper Alderman.

“So [it’s] not saying, ‘We’re going to roll out ChatGPT across the board and use that’; it’s finding some particular cases that might be useful,” he explained.

“We’ve had, for example, [instances] where lawyers have said, ‘We’ve got a bunch of documents we use on a regular basis or a bunch of devices we use on a regular basis. Can we put these into ChatGPT and see if we can [find a] better way of pulling data out of things?’ And so use cases like that where people are coming up with ideas and trying them out and seeing how they go and [questioning whether] we roll this out more widely? I think that’s the approach that seems to be the best.”

Is Legal Technology the Future of Legal Services? — from lawfuel.com by Kelli Hall

Impact of Legal Technology on the Legal Industry

Revolutionizing Law Firm Strategies With AI And SEO — from abovethelaw.com by Annette Choti

Explore how AI and SEO are transforming law firm strategies, from automated keyword research to predictive SEO and voice recognition technology.

AI and SEO are two powerful technologies transforming the digital world for legal offices. AI can enhance SEO strategies, offering a competitive edge in search engine rankings. AI can streamline your content creation process. Learn about machine learning’s role in enhancing content optimization, contributing to more targeted and effective marketing efforts.

Navigating Gen AI In Legal: Insights From CES And A Dash Of Tequila Thinking — from abovethelaw.com by Stephen Embry

What should be our true north in making decisions about how to use technology?

Embracing Gen AI in Legal

So in all the Gen AI smoke and handwringing, lets first identify what we excel at as lawyers. What only we as lawyers are qualified to do. Then, when it comes to technology and the flavor of the day, Gen AI, let’s look relentlessly at how we can eliminate the time we spend on anything else. Let technology free us up for the work only we can do.

That’s Satya Nadella’s advice. And Microsoft has done pretty well under his leadership.

From Gavels to Algorithms: Judge Xavier Rodriguez Discusses the Future of Law and AI — from jdsupra.com by

It’s a rare privilege to converse with a visionary like Judge Xavier Rodriguez, who has seamlessly blended the realms of justice, law, and technology. His journey from a medieval history enthusiast to a United States district court judge specializing in eDiscovery and AI is inspiring.

…

Judge Rodriguez provides an insightful perspective on the need for clear AI regulations. He delves into the technical aspects and underscores the potential of AI to democratize the legal system. He envisions AI as a transformative force capable of simplifying the complexities that often make legal services out of reach for many.

Judge Rodriguez champions a progressive approach to legal education, emphasizing the urgency of integrating technology competence into the curriculum. This foresight will prepare future lawyers for a world where AI tools are as commonplace as legal pads, fostering a sense of anticipation for the future of legal practice.

From DSC:

As a bit of context here…

After the Jewish people had been exiled to various places, the walls of Jerusalem lay in ruins until the fifth century B.C.E.. At that point, a man named Nehemiah returned to Jerusalem as the provincial governor and completed the repairs of the walls. The verses below really stuck out at me in regards to what a leader should behave/look/be like. He was a servant leader, not demanding choice treatment, not squeezing the people for every last drop, and not using his position to treat himself extra right.

I don’t like to get political on this blog, as I already lose a great deal of readership due to including matters of faith. But today’s leaders (throughout all kinds of organizations) need to learn from Nehemiah’s example, regardless of whatever their beliefs/faiths may be.

14 Moreover, from the twentieth year of King Artaxerxes, when I was appointed to be their governor in the land of Judah, until his thirty-second year—twelve years—neither I nor my brothers ate the food allotted to the governor. 15 But the earlier governors—those preceding me—placed a heavy burden on the people and took forty shekels[a] of silver from them in addition to food and wine. Their assistants also lorded it over the people. But out of reverence for God I did not act like that. 16 Instead, I devoted myself to the work on this wall. All my men were assembled there for the work; we[b] did not acquire any land.

17 Furthermore, a hundred and fifty Jews and officials ate at my table, as well as those who came to us from the surrounding nations. 18 Each day one ox, six choice sheep and some poultry were prepared for me, and every ten days an abundant supply of wine of all kinds. In spite of all this, I never demanded the food allotted to the governor, because the demands were heavy on these people.

19 Remember me with favor, my God, for all I have done for these people.

The Evolution of Collaboration: Unveiling the EDUCAUSE Corporate Engagement Program — from er.educause.edu

The program is designed to strengthen the collaboration between private industry and higher education institutions—and evolve the higher education technology market. The new program will do so by taking the following actions:

By building better bridges between our corporate and institutional communities, we can help accelerate our shared mission of furthering the promise of higher education.

Speaking of collaborations, also see:

Could the U.S. become an “Apprentice Nation?” — from Michael B. Horn and Ryan Craig

Intermediaries do the heavy lifting for the employers.

Bottom line: As I discussed with Michael later in the show, we already have the varied system that Leonhardt imagines—it’s just that it’s often by chaos and neglect. Just like we didn’t say to 8th graders a century ago, “go find your own high school,” we need to design a post-high school system with clear and well-designed pathways that include:

Listen to the complete episode here and subscribe to the podcast.

Listen to the complete episode here and subscribe to the podcast.

More Chief Online Learning Officers Step Up to Senior Leadership Roles

In 2024, I think we will see more Chief Online Learning Officers (COLOs) take on more significant roles and projects at institutions.In recent years, we have seen many COLOs accept provost positions. The typical provost career path that runs up through the faculty ranks does not adequately prepare leaders for the digital transformation occurring in postsecondary education.

As we’ve seen with the professionalization of the COLO role, in general, these same leaders proved to be incredibly valuable during the pandemic due to their unique skills: part academic, part entrepreneur, part technologist, COLOs are unique in higher education. They sit at the epicenter of teaching, learning, technology, and sustainability. As institutions are evolving, look for more online and professional continuing leaders to take on more senior roles on campuses.

Julie Uranis, Senior Vice President, Online and Strategic Initiatives, UPCEA

Instructional Designers as Institutional Change Agents — from er.educause.edu/ by Aaron Bond, Barb Lockee and Samantha Blevins

Systems thinking and change strategies can be used to improve the overall functioning of a system. Because instructional designers typically use systems thinking to facilitate behavioral changes and improve institutional performance, they are uniquely positioned to be change agents at higher education institutions.

In higher education, instructional designers are often seen as “change agents” because they help to facilitate behavioral changes and improve performance at their institutions. Due to their unique position of influence among higher education leaders and faculty and their use of systems thinking, instructional designers can help bridge institutional priorities and the specific needs of various stakeholders. COVID-19 and the switch to emergency remote teaching raised awareness of the critical services instructional designers provide, including preparing faculty to teach—and students to learn—in well-designed learning environments. Today, higher education institutions increasingly rely on the experience and expertise of instructional designers.

Figure 1. How Instructional Designers Employ Systems Thinking

.

Expanding Bard’s understanding of YouTube videos — via AI Valley

Reshaping the tree: rebuilding organizations for AI — from oneusefulthing.org by Ethan Mollick

Technological change brings organizational change.

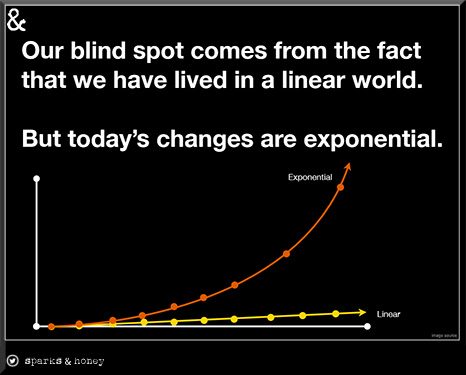

I am not sure who said it first, but there are only two ways to react to exponential change: too early or too late. Today’s AIs are flawed and limited in many ways. While that restricts what AI can do, the capabilities of AI are increasing exponentially, both in terms of the models themselves and the tools these models can use. It might seem too early to consider changing an organization to accommodate AI, but I think that there is a strong possibility that it will quickly become too late.

From DSC:

Readers of this blog have seen the following graphic for several years now, but there is no question that we are in a time of exponential change. One would have had an increasingly hard time arguing the opposite of this perspective during that time.

Nvidia’s revenue triples as AI chip boom continues — from cnbc.com by Jordan Novet; via GSV

KEY POINTS

Here’s how the company did, compared to the consensus among analysts surveyed by LSEG, formerly known as Refinitiv:

Nvidia’s revenue grew 206% year over year during the quarter ending Oct. 29, according to a statement. Net income, at $9.24 billion, or $3.71 per share, was up from $680 million, or 27 cents per share, in the same quarter a year ago.

DC: Anyone surprised? This is why the U.S. doesn’t want high-powered chips going to China. History repeats itself…again. The ways of the world/power continue on.

Pentagon’s AI initiatives accelerate hard decisions on lethal autonomous weapons https://t.co/PTDmJugiE2

— Daniel Christian (he/him/his) (@dchristian5) November 27, 2023

OpenAI announces leadership transition — from openai.com

Chief technology officer Mira Murati appointed interim CEO to lead OpenAI; Sam Altman departs the company. Search process underway to identify permanent successor.

Excerpt (emphasis DSC):

The board of directors of OpenAI, Inc., the 501(c)(3) that acts as the overall governing body for all OpenAI activities, today announced that Sam Altman will depart as CEO and leave the board of directors. Mira Murati, the company’s chief technology officer, will serve as interim CEO, effective immediately.

…

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

…

As a part of this transition, Greg Brockman will be stepping down as chairman of the board and will remain in his role at the company, reporting to the CEO.

From DSC:

I’m not here to pass judgment, but all of us on planet Earth should be at least concerned with this disturbing news.

AI is one of the most powerful set of emerging technologies on the planet right now. OpenAI is arguably the most powerful vendor/innovator/influencer/leader in that space. And Sam Altman is was the face of OpenAI — and arguably for AI itself. So this is a big deal.

What concerns me is what is NOT being relayed in this posting:

To whom much is given, much is expected.

Also related/see:

OpenAI CEO Sam Altman ousted, shocking AI world — from washingtonpost.com by Gerrit De Vynck and Nitasha Tiku

The artificial intelligence company’s directors said he was not ‘consistently candid in his communications with the board’

Altman’s sudden departure sent shock waves through the technology industry and the halls of government, where he had become a familiar presence in debates over the regulation of AI. His rise and apparent fall from tech’s top rung is one of the fastest in Silicon Valley history. In less than a year, he went from being Bay Area famous as a failed start-up founder who reinvented himself as a popular investor in small companies to becoming one of the most influential business leaders in the world. Journalists, politicians, tech investors and Fortune 500 CEOs alike had been clamoring for his attention.

OpenAI’s Board Pushes Out Sam Altman, Its High-Profile C.E.O. — from nytimes.com by Cade Metz

Sam Altman, the high-profile chief executive of OpenAI, who became the face of the tech industry’s artificial intelligence boom, was pushed out of the company by its board of directors, OpenAI said in a blog post on Friday afternoon.

From DSC:

Updates — I just saw these items

After learning today’s news, this is the message I sent to the OpenAI team: https://t.co/NMnG16yFmm pic.twitter.com/8x39P0ejOM

— Greg Brockman (@gdb) November 18, 2023

.

Sam Altman fired as CEO of OpenAI — from theverge.com by Jay Peters

In a sudden move, Altman is leaving after the company’s board determined that he ‘was not consistently candid in his communications.’ President and co-founder Greg Brockman has also quit.

As you saw at Microsoft Ignite this week, we’re continuing to rapidly innovate for this era of AI, with over 100 announcements across the full tech stack – from AI systems, models, and tools in Azure, to Copilot. Most importantly, we’re committed to delivering all of this to our…

— Satya Nadella (@satyanadella) November 17, 2023

AI Pedagogy Project, metaLAB (at) Harvard

Creative and critical engagement with AI in education. A collection of assignments and materials inspired by the humanities, for educators curious about how AI affects their students and their syllabi

AI Guide

Focused on the essentials and written to be accessible to a newcomer, this interactive guide will give you the background you need to feel more confident with engaging conversations about AI in your classroom.

From #47 of SAIL: Sensemaking AI Learning — by George Siemens

Excerpt (emphasis DSC):

Welcome to Sensemaking, AI, and Learning (SAIL), a regular look at how AI is impacting education and learning.

Over the last year, after dozens of conferences, many webinars, panels, workshops, and many (many) conversations with colleagues, it’s starting to feel like higher education, as a system, is in an AI groundhog’s day loop. I haven’t heard anything novel generated by universities. We have a chatbot! Soon it will be a tutor! We have a generative AI faculty council! Here’s our list of links to sites that also have lists! We need AI literacy! My mantra over the last while has been that higher education leadership is failing us on AI in a more dramatic way than it failed us on digitization and online learning. What will your universities be buying from AI vendors in five years because they failed to develop a strategic vision and capabilities today?

AI + the Education System — from drphilippahardman.substack.com Dr. Philippa Hardman

The key to relevance, value & excellence?

The magic school of the future is one that helps students learn to work together and care for each other — from stefanbauschard.substack.com by Stefan Bauschard

AI is going to alter economic and professional structures. Will we alter the educational structures?

(e) What is really required is a significant re-organization of schooling and curriculum. At a meta-level, the school system is focused on developing the type of intelligence I opened with, and the economic value of that is going to rapidly decline.

(f). This is all going to happen very quickly (faster than any previous change in history), and many people aren’t paying attention. AI is already here.

Building a Consumer-Centered Legal Market: Takeaways from IAALS’ Convening on Regulatory Reform — from iaals.du.edu by Jessica Bednarz

Two initial high-level takeaways from the convening include:

IAALS will share a more comprehensive list of lessons learned and recommendations for building and sustaining regulatory reform in its post-convening report, currently set to be released in early 2024.

Growing Enrollment, Shrinking Future — from insidehighered.com by Liam Knox

Undergraduate enrollment rose for the first time since 2020, stoking hopes for a long-awaited recovery. But surprising areas of decline may dampen that optimism.

There is good news and bad news in the National Student Clearinghouse Research Center’s latest enrollment report.

First, the good news: undergraduate enrollment climbed by 2.1 percent this fall, its first total increase since 2020. Enrollment increases for Black, Latino and Asian students—by 2.2 percent, 4.4 percent and 4 percent, respectively—were especially notable after last year’s declines.

The bad news is that freshman enrollment declined by 3.6 percent, nearly undoing last year’s gain of 4.6 percent and leaving first-year enrollment less than a percentage point higher than it was in fall 2021, during the thick of the pandemic. Those declines were most pronounced for white students—and, perhaps most surprisingly, at four-year institutions with lower acceptance rates, reversing years of growth trends for the most selective colleges and universities.

An Army of Temps: AFT Contingent Faculty Quality of Work/Life Report 2022 — from aft.org (American Federation of Teachers) by Randi Weingarten, Fedrick C. Ingram, and Evelyn DeJesus

This recent survey adds to our understanding of how contingency plays out in the lives of millions of college and university faculty.

From DSC:

A college or university’s adjunct faculty members — if they are out there practicing what they are teaching about — are some of the most valuable people within higher education. They have real-life, current experience. They know which skills are necessary to thrive in their fields. They know their sections of the marketplace.

Some parts of rural America are changing fast. Can higher education keep up? — from usatoday.com by Nick Fouriezos (Open Campus)

More states have started directly tying academic programming to in-demand careers.

Across rural America, both income inequality and a lack of affordable housing are on the rise. Remote communities like the Tetons are facing not just an economic challenge, but also an educational one, as changing workforce needs meet a critical skills and training gap.

…

Earlier this month, Montana announced that 12 of its colleges would establish more than a dozen “micro-pathways” – stackable credential programs that can be completed in less than a year – to put people on a path to either earning an associate degree or immediately getting hired in industries such as health, construction, manufacturing and agriculture.

“Despite unemployment hitting record lows in Montana, rural communities continue to struggle economically, and many low-income families lack the time and resources to invest in full-time education and training,” the Montana University System announced in a statement with its partner on the project, the national nonprofit Education Design Lab.

Colleges Must Respond to America’s Skill-Based Economy — from edsurge.com by Mordecai I. Brownlee (Columnist)

To address our children’s hunger and our communities’ poverty, our educational system must be redesigned to remove the boundaries between high school, college and careers so that more Americans can train for and secure employment that will sustain them.

In 2021, Jobs for the Future outlined a pathway toward realizing such a revolution in The Big Blur report, which argues for a radical restructuring of education for grades 11 through 14 by erasing the arbitrary dividing line between high school and college. Ideas for accomplishing this include courses and work experiences for students designed for career preparation.

The New Arms Race in Higher Ed — from jeffselingo.com by Jeff Selingo

Bottom line: Given all the discussion about the value of a college education, if you’re looking for “amenities” on campuses these days be sure to find out how faculty are engaging students (both in person and with tools like AR/VR), whether they’re teaching students about using AI, and ways institutions are certifying learning with credentials that have currency in the job market.

In that same newsletter, also see Jeff’s section entitled, “Where Canada leads the U.S. in higher ed.”

College Uncovered — from hechingerreport.org

Thinking of going to college? Sending your kid? You may already be caught up in the needless complexity of the admissions process, with its never-ending stress and that “you’ll-be-lucky-to-get-in” attitude from colleges that nonetheless pretend to have your interests at heart.

What aren’t they telling you? A lot, as it turns out — beginning with how much they actually cost, how long it will take to finish, the likelihood that graduates get jobs and the myriad advantages that wealthy applicants enjoy. They don’t want you to know that transfer credits often aren’t accepted, or that they pay testing companies for the names of prospects to recruit and sketchy advice websites for the contact information of unwitting students. And they don’t reveal tricks such as how to get admitted even if you’re turned down as a freshman.

But we will. In College Uncovered, from The Hechinger Report and GBH News, two experienced higher education journalists pull back the ivy on how colleges and universities really work, providing information students and their parents need to have before they make one of the most expensive decisions in their lives: whether and where to go to college. We expose the problems, pitfalls and risks, with inside information you won’t hear on other podcasts, including disconcerting facts that they’ve sometimes pried from unwilling universities.

.

Fall 2023 enrollment trends in 5 charts — from highereddive.com by Natalie Schwartz

We’re breaking down some of the biggest developments this term, based on preliminary figures from the National Student Clearinghouse Research Center.

Short-term credentials continued to prove popular among undergraduate and graduate students. In fall 2023, enrollment in undergraduate certificate programs shot up 9.9% compared to the year before, while graduate certificate enrollment rose 5.7%.

Degree programs didn’t fare as well. Master’s programs saw the smallest enrollment increase, of 0.2%, followed by bachelor’s degree programs, which saw headcounts rise 0.9%.

President Speaks: Colleges need an overhaul to meet the future head on — from highereddive.com by Beth Martin

Higher education faces an existential threat from forces like rapidly changing technology and generational shifts, one university leader argues.

Higher education must increasingly equip students with the skills and mindset to become lifelong learners — to learn how to learn, essentially — so that no matter what the future looks like, they will have the skills, mindset and wherewithal to learn whatever it is that they need and by whatever means. That spans from the commitment of a graduate program or something as quick as a microcredential.

Having survived the pandemic, university administrators, faculty, and staff no longer have their backs against the wall. Now is the time to take on these challenges and meet the future head on.