From DSC:

The recent drama over at OpenAI reminds me of how important a few individuals are in influencing the lives of millions of people.

We have reached an agreement in principle for Sam Altman to return to OpenAI as CEO with a new initial board of Bret Taylor (Chair), Larry Summers, and Adam D’Angelo.

We are collaborating to figure out the details. Thank you so much for your patience through this.

— OpenAI (@OpenAI) November 22, 2023

The C-Suites (i.e., the Chief Executive Officers, Chief Financial Officers, Chief Operating Officers, and the like) of companies like OpenAI, Alphabet (Google), Meta (Facebook), Microsoft, Netflix, NVIDIA, Amazon, Apple, and a handful of others have enormous power. Why? Because of the enormous power and reach of the technologies that they create, market, and provide.

We need to be praying for the hearts of those in the C-Suites of these powerful vendors — as well as for their Boards.

LORD, grant them wisdom and help mold their hearts and perspectives so that they truly care about others. May their decisions not be based on making money alone…or doing something just because they can.

What happens in their hearts and minds DOES and WILL continue to impact the rest of us. And we’re talking about real ramifications here. This isn’t pie-in-the-sky thinking or ideas. This is for real. With real consequences. If you doubt that, go ask the families of those whose sons and daughters took their own lives due to what happened out on social media platforms. Disclosure: I use LinkedIn and Twitter quite a bit. I’m not bashing these platforms per se. But my point is that there are real impacts due to a variety of technologies. What goes on in the hearts and minds of the leaders of these tech companies matters.

Some relevant items:

Navigating Attention-Driving Algorithms, Capturing the Premium of Proximity for Virtual Teams, & New AI Devices — from implactions.com by Scott Belsky

Excerpts (emphasis DSC):

No doubt, technology influences us in many ways we don’t fully understand. But one area where valid concerns run rampant is the attention-seeking algorithms powering the news and media we consume on modern platforms that efficiently polarize people. Perhaps we’ll call it The Law of Anger Expansion: When people are angry in the age of algorithms, they become MORE angry and LESS discriminate about who and what they are angry at.

…

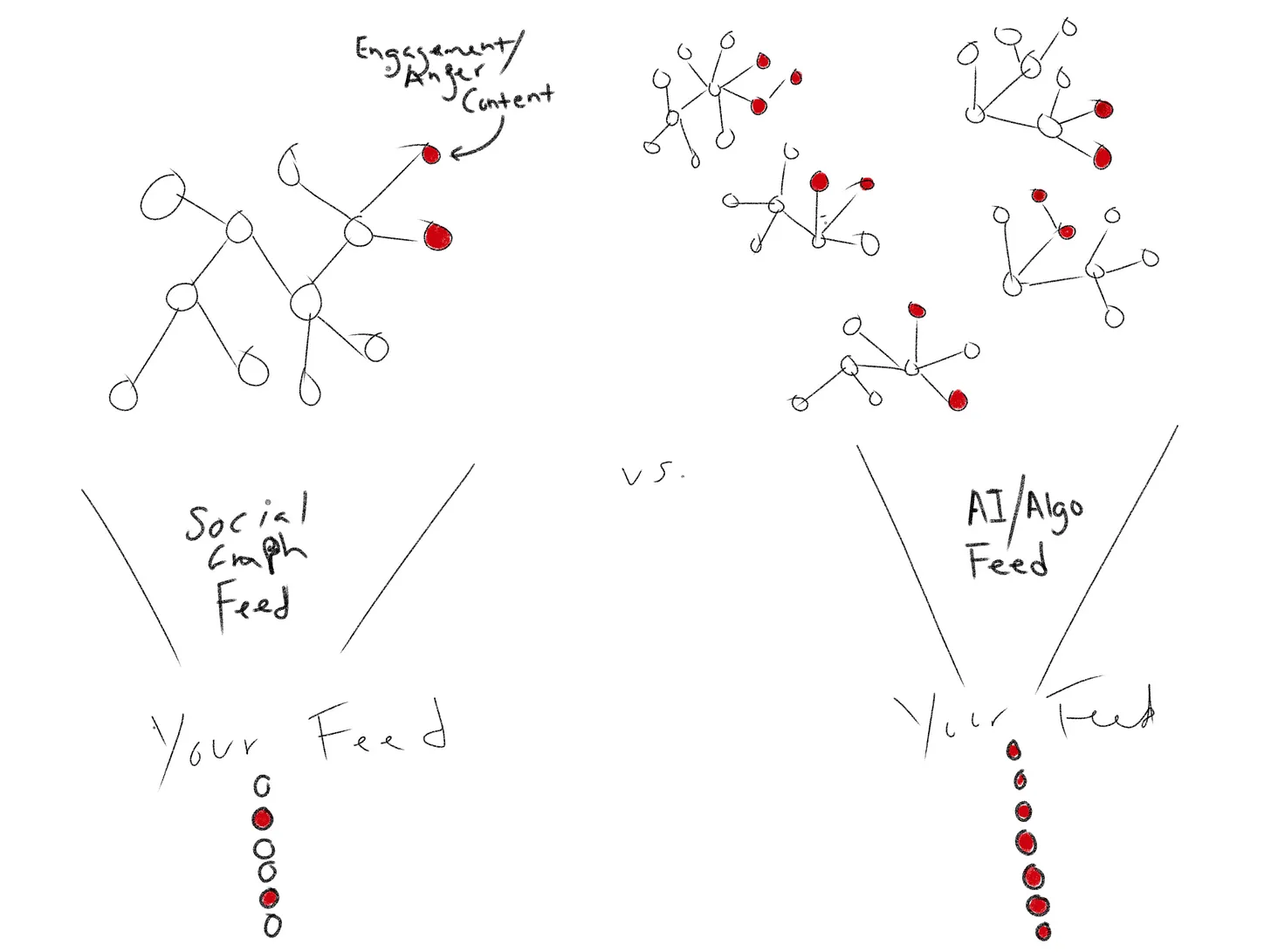

Algorithms that optimize for grabbing attention, thanks to AI, ultimately drive polarization.

…

The AI learns quickly that a rational or “both sides” view is less likely to sustain your attention (so you won’t get many of those, which drives the sensation that more of the world agrees with you). But the rage-inducing stuff keeps us swiping.

Our feeds are being sourced in ways that dramatically change the content we’re exposed to.

And then these algorithms expand on these ultimately destructive emotions – “If you’re afraid of this, maybe you should also be afraid of this” or “If you hate those people, maybe you should also hate these people.”

…

How do we know when we’ve been polarized? This is the most important question of the day.

…

Whatever is inflaming you is likely an algorithm-driven expansion of anger and an imbalance of context.