Why Natural Language Processing is the Future of Business Intelligence — from dzone.com by Gur Tirosh

Until now, we have been interacting with computers in a way that they understand, rather than us. We have learned their language. But now, they’re learning ours.

Excerpt:

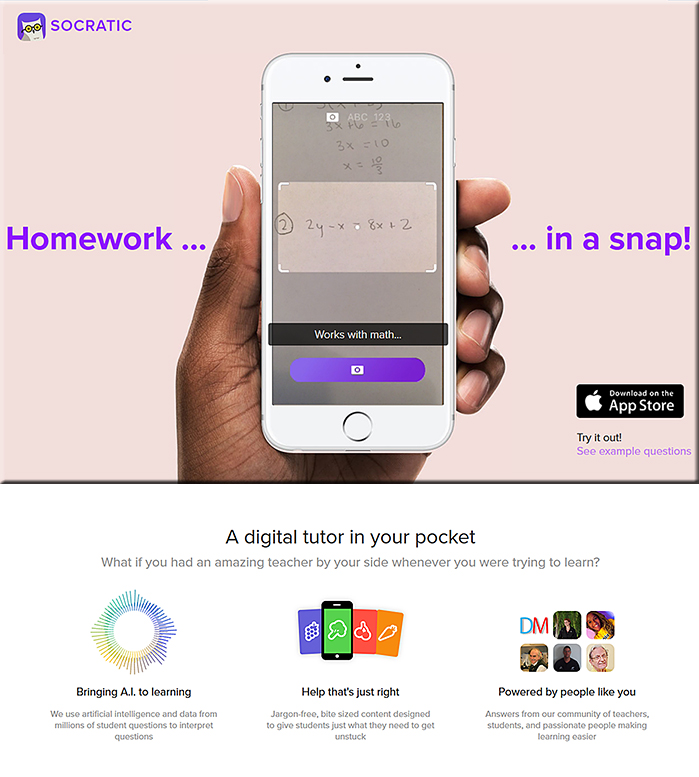

Every time you ask Siri for directions, a complex chain of cutting-edge code is activated. It allows “her” to understand your question, find the information you’re looking for, and respond to you in a language that you understand. This has only become possible in the last few years. Until now, we have been interacting with computers in a way that they understand, rather than us. We have learned their language.

But now, they’re learning ours.

The technology underpinning this revolution in human-computer relations is Natural Language Processing (NLP). And it’s already transforming BI, in ways that go far beyond simply making the interface easier. Before long, business transforming, life changing information will be discovered merely by talking with a chatbot.

This future is not far away. In some ways, it’s already here.

What Is Natural Language Processing?

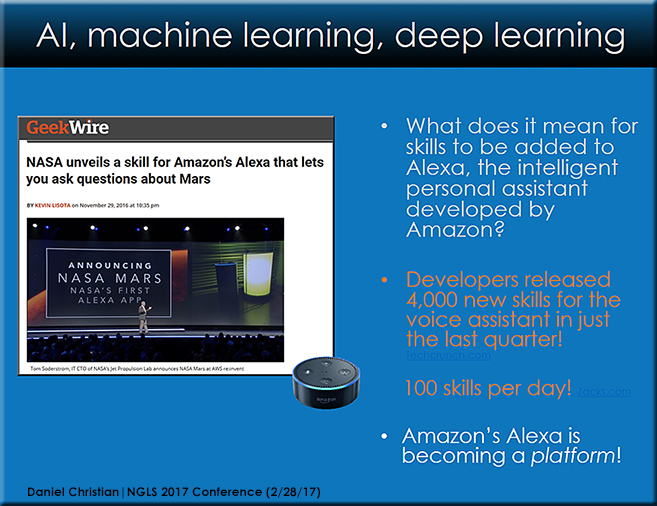

NLP, otherwise known as computational linguistics, is the combination of Machine Learning, AI, and linguistics that allows us to talk to machines as if they were human.

But NLP aims to eventually render GUIs — even UIs — obsolete, so that interacting with a machine is as easy as talking to a human.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)