From DSC:

I know Quentin Schultze from our years working together at Calvin College, in Grand Rapids, Michigan (USA). I have come to greatly appreciate Quin as a person of faith, as an innovative/entrepreneurial professor, as a mentor to his former students, and as an excellent communicator.

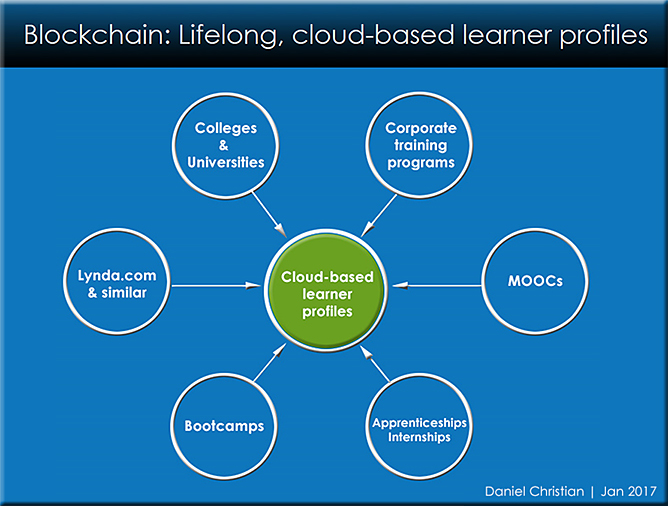

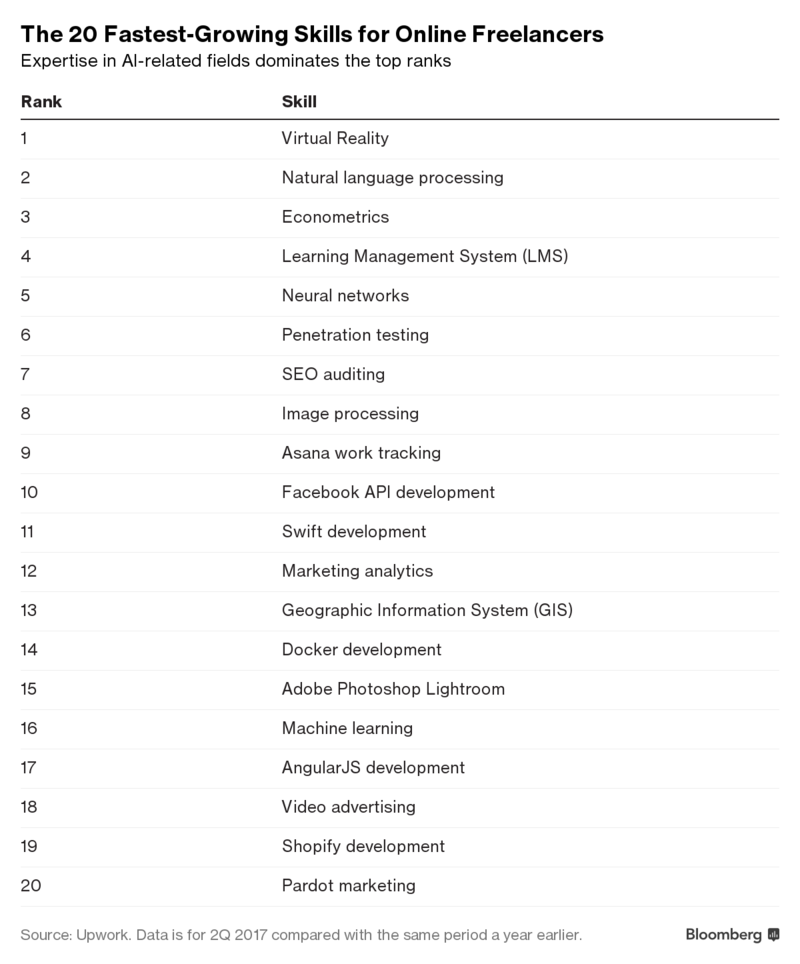

Quin has written a very concise, wisdom-packed book that I would like to recommend to those people who are seeking to be better communicators, leaders, and servants. But I would especially like to recommend this book to the leadership at Google, Amazon, Apple, Microsoft, IBM, Facebook, Nvidia, the major companies developing robots, and other high-tech companies. Why do I list these organizations? Because given the exponential pace of technological change, these organizations — and their leaders — have an enormous responsibility to make sure that the technologies that they are developing result in positive changes for societies throughout the globe. They need wisdom, especially as they are working on emerging technologies such as Artificial Intelligence (AI), personal assistants and bots, algorithms, robotics, the Internet of Things, big data, blockchain and more. These technologies continue to exert an increasingly powerful influence on numerous societies throughout the globe today. And we haven’t seen anything yet! Just because we can develop and implement something, doesn’t mean that we should. Again, we need wisdom here.

But as Quin states, it’s not just about knowledge, the mind and our thoughts. It’s about our hearts as well. That is, we need leaders who care about others, who can listen well to others, who can serve others well while avoiding gimmicks, embracing diversity, building trust, fostering compromise and developing/exhibiting many of the other qualities that Quin writes about in his book. Our societies desperately need leaders who care about others and who seek to serve others well.

I highly recommend you pick up a copy of Quin’s book. There are few people who can communicate as much in as few words as Quin can. In fact, I wish that more writing on the web and more articles/research coming out of academia would be as concisely and powerfully written as Quin’s book, Communicate Like a True Leader: 30 Days of Life-Changing Wisdom.

To lead is to accept responsibility and act responsibly.

— Quentin Schultze

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)