From DSC:

I’ve been thinking about Applicant Tracking Systems (ATSs) for a while now, but the article below made me revisit my reflections on them. (By the way, my thoughts below are not meant to be a slam on Google. I like Google and I use their tools daily.) I’ve included a few items below, but there were some other articles/vendors’ products that I had seen on this topic that focused specifically on ATSs, but I couldn’t locate them all.

How Google’s AI-Powered Job Search Will Impact Companies And Job Seekers — from forbes.com by Forbes Coaches Council

Excerpt:

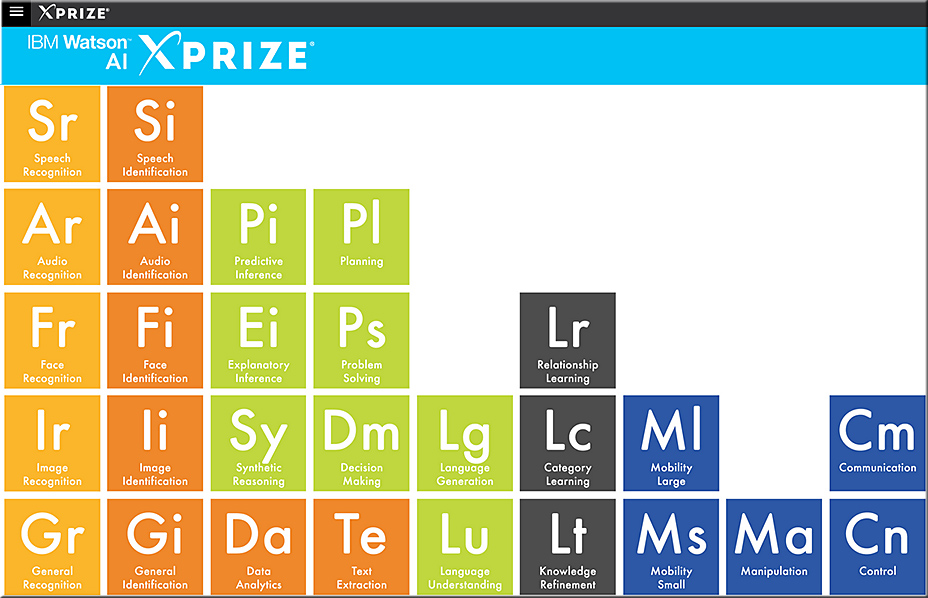

In mid-June, Google announced the implementation of an AI-powered search function aimed at connecting job seekers with jobs by sorting through posted recruitment information. The system allows users to search for basic phrases, such as “jobs near me,” or perform searches for industry-specific keywords. The search results can include reviews from Glassdoor or other companies, along with the details of what skills the hiring company is looking to acquire.

As this is a relatively new development, what the system will mean is still an open question. To help, members from the Forbes Coaches Council offer their analysis on how the search system will impact candidates or companies. Here’s what they said…

5. Expect competition to increase.

Google jumping into the job search market may make it easier than ever to apply for a role online. For companies, this could likely tax the already strained-ATS system, and unless fixed, could mean many more resumes falling into that “black hole.” For candidates, competition might be steeper than ever, which means networking will be even more important to job search success. – Virginia Franco

10. Understanding keywords and trending topics will be essential.

Since Google’s AI is based on crowd-gathered metrics, the importance of keywords and understanding trending topics is essential for both employers and candidates. Standing out from the crowd or getting relevant results will be determined by how well you speak the expected language of the AI. Optimizing for the search engine’s results pages will make or break your search for a job or candidate. – Maurice Evans, IGROWyourBiz, Inc

Also see:

In Unilever’s radical hiring experiment, resumes are out, algorithms are in — from foxbusiness.com by

Excerpt:

Before then, 21-year-old Ms. Jaffer had filled out a job application, played a set of online games and submitted videos of herself responding to questions about how she’d tackle challenges of the job. The reason she found herself in front of a hiring manager? A series of algorithms recommended her.

The Future of HR: Is it Dying? — from hrtechnologist.com by Rhucha Kulkarni

Excerpt (emphasis DSC):

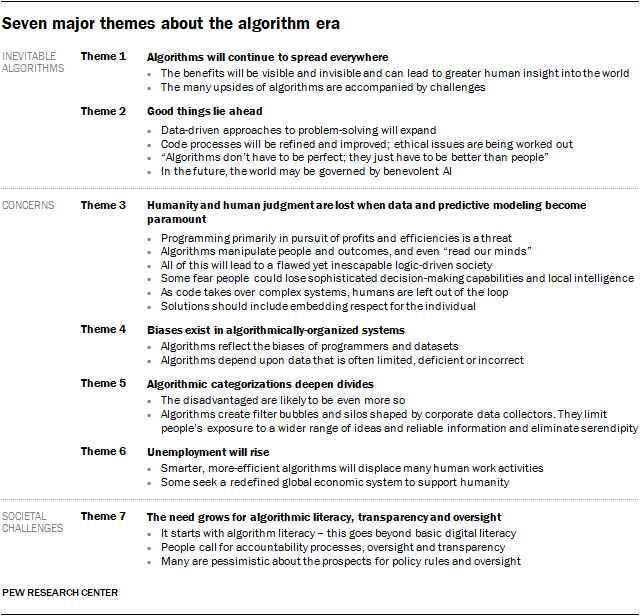

The debate is on, whether man or machine will win the race, as they are pitted against each other in every walk of life. Experts are already worried about the social disruption that is inevitable, as artificial intelligence (AI)-led robots take over the jobs of human beings, leaving them without livelihoods. The same is believed to happen to the HR profession, says a report by Career Builder. HR jobs are at threat, like all other jobs out there, as we can expect certain roles in talent acquisition, talent management, and mainstream business being automated over the next 10 years. To delve deeper into the imminent problem, Career Builder carried out a study of 719 HR professionals in the private sector, specifically looking for the rate of adoption of emerging technologies in HR and what HR professionals perceived about it.

The change is happening for real, though different companies are adopting technologies at varied paces. Most companies are turning to the new-age technologies to help carry out talent acquisition and management tasks that are time-consuming and labor-intensive.

From DSC:

Are you aware that if you apply for a job at many organizations nowadays, your resume has a significant chance of not ever making it in front of a human’s eyeballs for their review? Were you aware that an Applicant Tracking System (an ATS) will likely syphon off and filter out your resume unless you have the exact right keywords in your resume and unless you mentioned those keywords the optimal number of times?

And were you aware that many advisors assert that you should use a 1 page resume — a 2 page resume at most? Well…assuming that you have to edit big time to get to a 1-2 page resume, how does that editing help you get past the ATSs out there? When you significantly reduce your resume’s size/information, you hack out numerous words that the ATS may be scanning for. (BTW, advisors recommend creating a Wordle from the job description to ascertain the likely keywords; but still, you don’t know which exact keywords the ATS will be looking for in your specific case/job application and how many times to use those keywords. Numerous words can be of similar size in the resulting Wordle graphic…so is that 1-2 page resume helping you or hurting you when you can only submit 1 resume for a position/organization?)

Vendors are hailing these ATS systems as being major productivity boosters for their HR departments…and that might be true in some cases. But my question is, at what cost?

At this point in time, I still believe that humans are better than software/algorithms at making judgement calls. Perhaps I’m giving hiring managers too much credit, but I’d rather have a human being make the call at this point. I want a pair of human eyeballs to scan my resume, not a (potentially) narrowly defined algorithm. A human being might see transferable skills better than a piece of code at this point.

Just so you know…in light of these keyword-based means of passing through the first layer of filtering, people are now playing games with their resumes and are often stretching the truth — if not outright lying:

Excerpt (emphasis DSC):

Employer Applicant Tracking Systems Expect an Exact Match

Most companies use some form of applicant tracking system (ATS) to take in résumés, sort through them, and narrow down the applicant pool. With the average job posting getting more than 100 applicants, recruiters don’t want to go bleary-eyed sorting through them. Instead, they let the ATS do the dirty work by telling it to pass along only the résumés that match their specific requirements for things like college degrees, years of experience, and salary expectations. The result? Job seekers have gotten wise to the finicky nature of the technology and are lying on their résumés and applications in hopes of making the cut.

From DSC:

I don’t see this as being very helpful. But perhaps that’s because I don’t like playing games with people and/or with other organizations. I’m not a game player. What you see is what you get. I’ll be honest and transparent about what I can — and can’t — deliver.

But students, you should know that these ATS systems are in place. Those of us in higher education should know about these ATS systems, as many of us are being negatively impacted by the current landscape within higher education.

Also see:

Why Your Approach To Job Searching Is Failing — from forbes.com by Jeanna McGinnis

Excerpt:

Is Your Resume ATS Friendly?

Did you know that an ATS (applicant tracking system) will play a major role in whether or not your resume is selected for further review when you’re applying to opportunities through online job boards?

It’s true. When you apply to a position a company has posted online, a human usually isn’t the first to review your resume, a computer program is. Scouring your resume for keywords, terminology and phrases the hiring manager is targeting, the program will toss your resume if it can’t understand the content it’s reading. Basically, your resume doesn’t stand a chance of making it to the next level if it isn’t optimized for ATS.

To ensure your resume makes it past the evil eye of ATS, format your resume correctly for applicant tracking programs, target it to the opportunity and check for spelling errors. If you don’t, you’re wasting your time applying online.