AWS unveils ‘Transcribe’ and ‘Translate’ machine learning services — from business-standard.com

Excerpts:

- Amazon “Transcribe” provides grammatically correct transcriptions of audio files to allow audio data to be analyzed, indexed and searched.

- Amazon “Translate” provides natural sounding language translation in both real-time and batch scenarios.

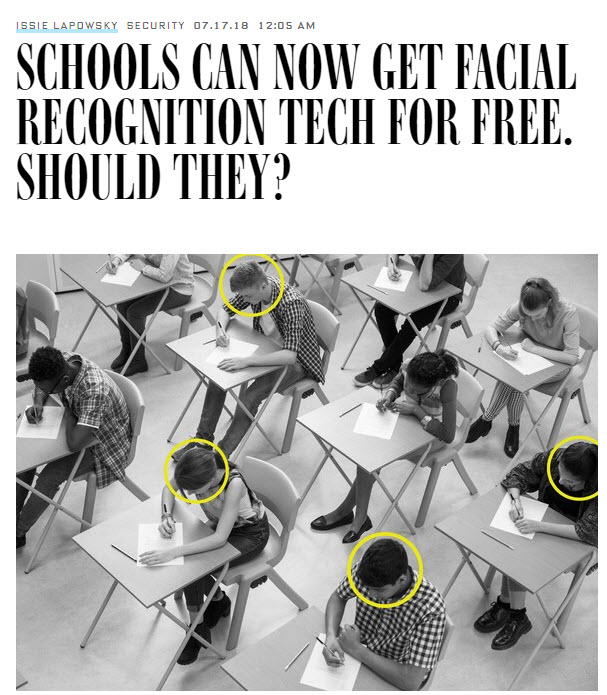

Google’s ‘secret’ smart city on Toronto’s waterfront sparks row — from bbc.com by Robin Levinson-King BBC News, Toronto

Excerpt:

The project was commissioned by the publically funded organisation Waterfront Toronto, who put out calls last spring for proposals to revitalise the 12-acre industrial neighbourhood of Quayside along Toronto’s waterfront.

Prime Minister Justin Trudeau flew down to announce the agreement with Sidewalk Labs, which is owned by Google’s parent company Alphabet, last October, and the project has received international attention for being one of the first smart-cities designed from the ground up.

But five months later, few people have actually seen the full agreement between Sidewalk and Waterfront Toronto.

As council’s representative on Waterfront Toronto’s board, Mr Minnan-Wong is the only elected official to actually see the legal agreement in full. Not even the mayor knows what the city has signed on for.

“We got very little notice. We were essentially told ‘here’s the agreement, the prime minister’s coming to make the announcement,'” he said.

“Very little time to read, very little time to absorb.”

Now, his hands are tied – he is legally not allowed to comment on the contents of the sealed deal, but he has been vocal about his belief it should be made public.

“Do I have concerns about the content of that agreement? Yes,” he said.

“What is it that is being hidden, why does it have to be secret?”

From DSC:

Google needs to be very careful here. Increasingly so these days, our trust in them (and other large tech companies) is at stake.

Addendum on 4/16/18 with thanks to Uros Kovacevic for this resource:

Human lives saved by robotic replacements — from injuryclaimcoach.com

Excerpt:

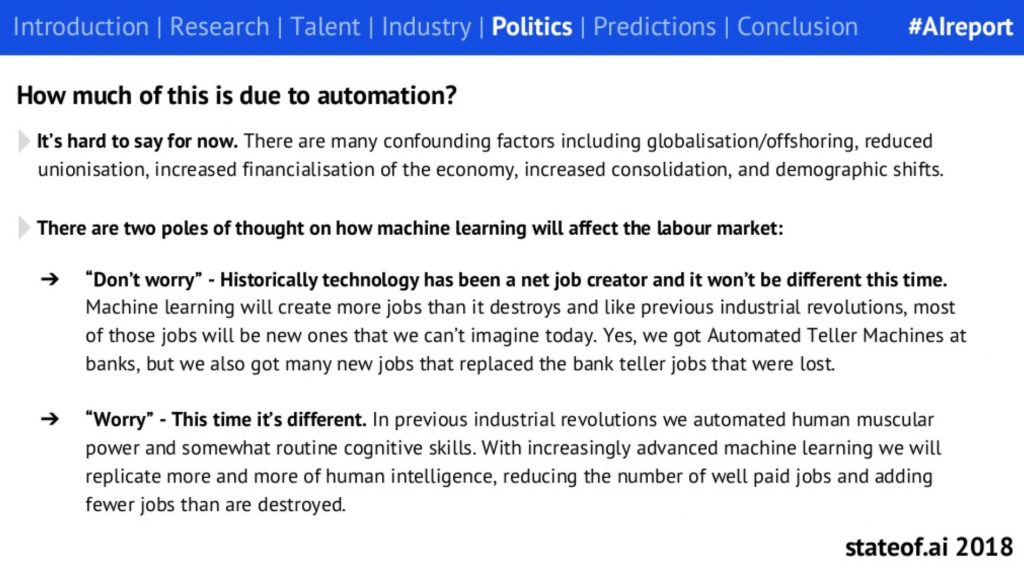

For academics and average workers alike, the prospect of automation provokes concern and controversy. As the American workplace continues to mechanize, some experts see harsh implications for employment, including the loss of 73 million jobs by 2030. Others maintain more optimism about the fate of the global economy, contending technological advances could grow worldwide GDP by more than $1.1 trillion in the next 10 to 15 years. Whatever we make of these predictions, there’s no question automation will shape the economic future of the nation – and the world.

But while these fiscal considerations are important, automation may positively affect an even more essential concern: human life. Every day, thousands of Americans risk injury or death simply by going to work in dangerous conditions. If robots replaced them, could hundreds of lives be saved in the years to come?

In this project, we studied how many fatal injuries could be averted if dangerous occupations were automated. To do so, we analyzed which fields are most deadly and the likelihood of their automation according to expert predictions. To see how automation could save Americans’ lives, keep reading.

Also related to this item is :

How AI is improving the landscape of work — from forbes.com by Laurence Bradford

Excerpts:

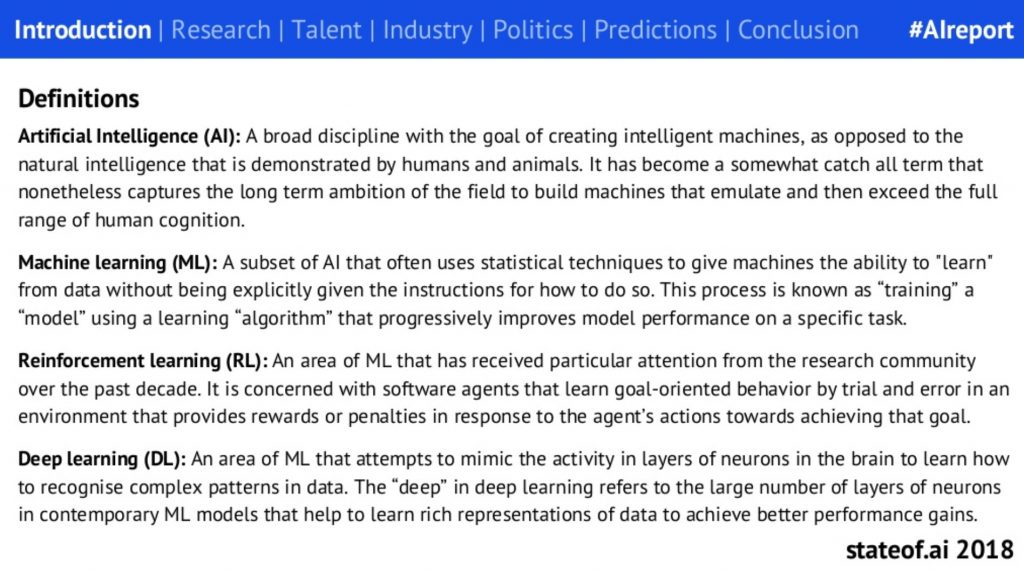

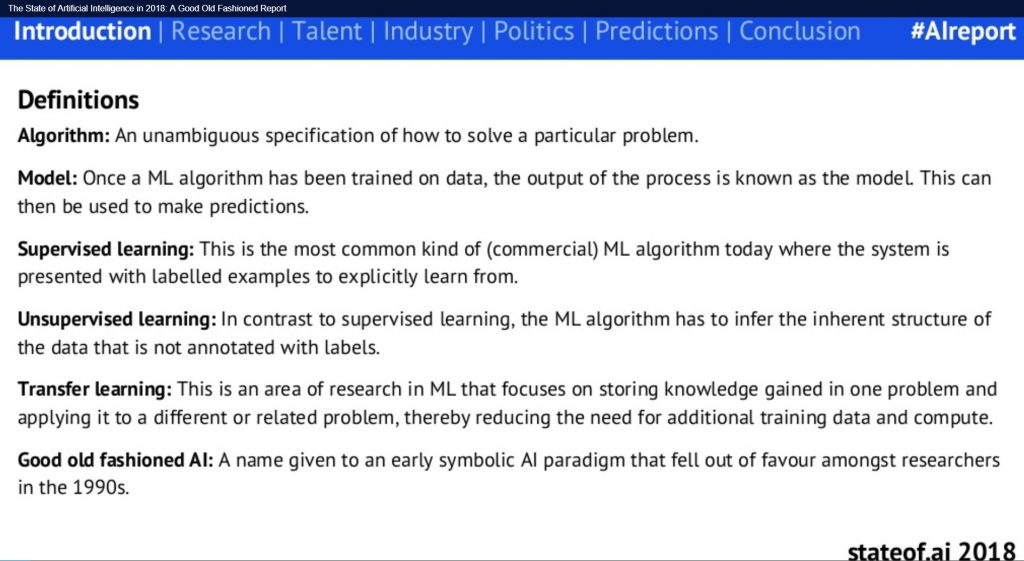

There have been a lot of sci-fi stories written about artificial intelligence. But now that it’s actually becoming a reality, how is it really affecting the world? Let’s take a look at the current state of AI and some of the things it’s doing for modern society.

- Creating New Technology Jobs

- Using Machine Learning To Eliminate Busywork

- Preventing Workplace Injuries With Automation

- Reducing Human Error With Smart Algorithms

From DSC:

This is clearly a pro-AI piece. Not all uses of AI are beneficial, but this article mentions several use cases where AI can make positive contributions to society.

It’s About Augmented Intelligence, not Artificial Intelligence — from informationweek.com

The adoption of AI applications isn’t about replacing workers but helping workers do their jobs better.

From DSC:

This article is also a pro-AI piece. But again, not all uses of AI are beneficial. We need to be aware of — and involved in — what is happening with AI.

Investing in an Automated Future — from clomedia.com by Mariel Tishma

Employers recognize that technological advances like AI and automation will require employees with new skills. Why are so few investing in the necessary learning?