The Age of AI: How Will In-house Law Departments Run in 10 Years? — from accdocket.com by Elizabeth Colombo

Excerpt:

2029 may feel far away right now, but all of this makes me wonder what in-house law might look like in 10 years. What will in-house law be like in an age of artificial intelligence (AI)? This article will look at how in-house law may be different in 10 years, focusing largely on anticipated changes to contract review and negotiation, and the workplace.

Also see:

A Primer on Using Artificial Intelligence in the Legal Profession — from jolt.law.harvard.edu by Lauri Donahue (2018)

Excerpt (emphasis DSC):

How Are Lawyers Using AI?

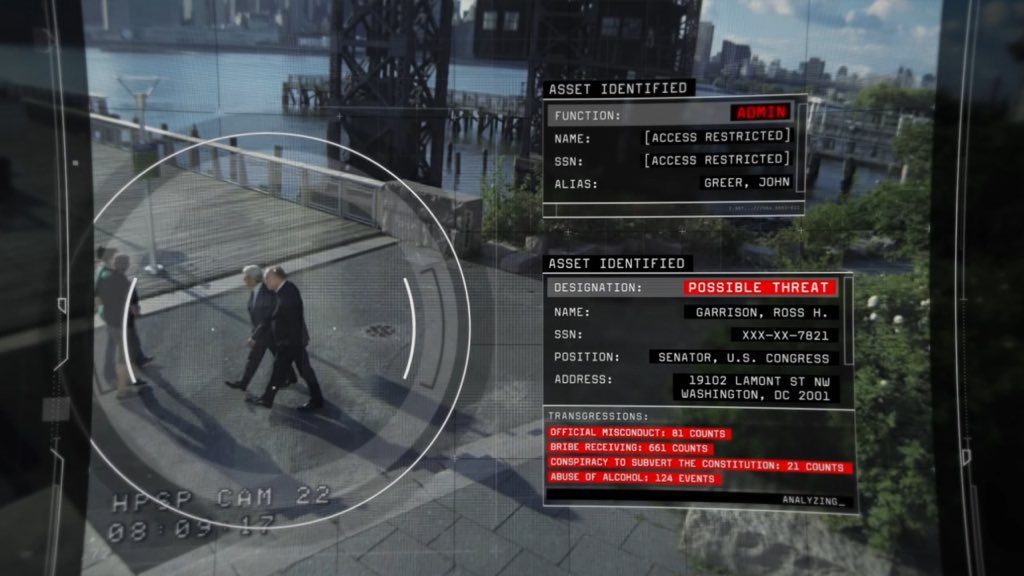

Lawyers are already using AI to do things like reviewing documents during litigation and due diligence, analyzing contracts to determine whether they meet pre-determined criteria, performing legal research, and predicting case outcomes.

…

Document Review

Analyzing Contracts

Legal Research

Predicting Results

Lawyers are often called upon to predict the future: If I bring this case, how likely is it that I’ll win — and how much will it cost me? Should I settle this case (or take a plea), or take my chances at trial? More experienced lawyers are often better at making accurate predictions, because they have more years of data to work with.

However, no lawyer has complete knowledge of all the relevant data.

Because AI can access more of the relevant data, it can be better than lawyers at predicting the outcomes of legal disputes and proceedings, and thus helping clients make decisions. For example, a London law firm used data on the outcomes of 600 cases over 12 months to create a model for the viability of personal injury cases. Indeed, trained on 200 years of Supreme Court records, an AI is already better than many human experts at predicting SCOTUS decisions.