From DSC:

When I turned on the TV the other day, our local news station was playing a piece re: the closing of several stores in Michigan, some within our area. Some of the national retail stores/chains mentioned were:

- Macy’s

- Macy’s closing 100 stores, including 4 in Michigan

Excerpt:

Four Macy’s stores in Michigan are permanently closing in a series of company cuts expected to cost 6,200 jobs. Macy’s announced 68 of the 100 stores it plans to shutter Wednesday, according to CNBC. On the list is the Macy’s at Lakeview Square Mall in Battle Creek. CNBC reports the store opened in 1983 and employs 51 associates. Also on the chopping block is the Macy’s in Lansing, Westland and the Eastland Center in Harper Woods. All four Michigan stores are slated to close by the end of 2017. - Sears and Kmart closing 150 stores — from money.cnn.com by Paul La Monica

Sears is shutting down 150 more stores, yet another sign of how tough it is for former kings of the retail industry to compete in a world now dominated by Amazon.

.

- Macy’s closing 100 stores, including 4 in Michigan

- Sears

- Internet is the new anchor: Woodland Mall Sears closing

Big box and anchor stores a vanishing species in West Michigan

Excerpt:

Despite the best economy in a decade and a nearly 4 percent increase in consumer spending this holiday, the kind of retailers that used to be the draws for shopping malls and plazas are feeling the continuing impact of the internet. The most notable recent victim of the trend is the Sears that has served as an anchor store at Woodland Mall for decades. “We hear rumors every week about what’s going on, but we don’t want to hear that — we’re working there, we don’t want to hear that kind of thing. We didn’t think that was going to happen to us. We were doing pretty good,” said 52-year-old Marty Kruizenga, who worked at the Sears Automotive at Woodland Mall. He was told Wednesday morning his store was closing.

.

- Internet is the new anchor: Woodland Mall Sears closing

- The Limited

- The Limited just shut all of its stores — from money.cnn.com by Jackie Wattles

Excerpt (emphasis DSC):

American malls just got emptier. The Limited, a once-popular women’s clothing brand that offers casual attire and workwear, no longer has any storefronts. On Saturday [1/7/17], a message on the store’s website read, “We’re sad to say that all The Limited stores nationwide have officially closed their doors. But this isn’t goodbye.” The website will still be up and running and will continue to ship nationwide, the company said.

…

The Limited is among a long list of brick-and-mortar retailers that once thrived in malls and strip shopping centers — but are now suffering at the hands of digital commerce giants like Amazon (AMZN, Tech30) and fast fashion stores such as H&M and Forever 21.

.

- The Limited just shut all of its stores — from money.cnn.com by Jackie Wattles

- And another chain that I don’t recall…

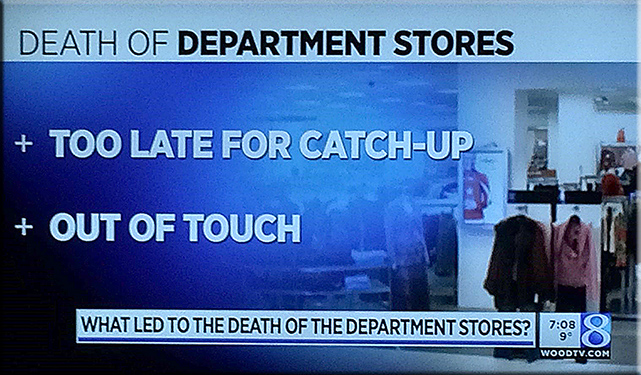

Here’s a snapshot I took of the television screen at the end of their piece:

The warnings were there but people didn’t want to address them:

Amazon is taking an increasing share of the US apparel market, according to Morgan Stanley.

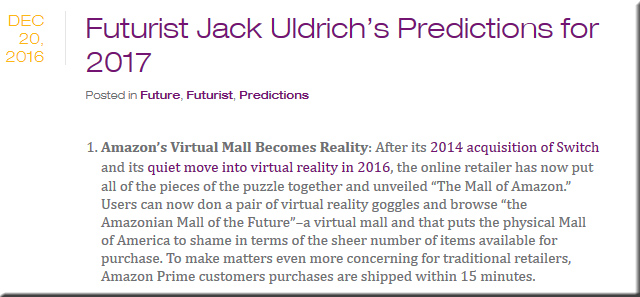

Also regarding Amazon, see this interesting prediction from Jack Uldrich:

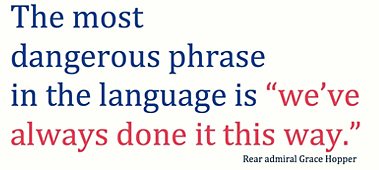

Below is a quote from a Forbes.com article entitled “Here’s What’s Wrong With Department Stores“

Are Department Stores Dead? Not yet. But they could kill themselves, under the weight of “we’ve always done it this way”. Tweaks in omni-channel strategy aren’t going to be enough to address the fundamental issues at department stores. Not with the way these trends are heading.

Along the lines of the above items, many of us can remember the Blockbuster stores closing in our areas not that long ago — having been blown out of the water by Netflix.

Although there are several lines of thought that could be pursued here (one of which might be to discuss the loss of jobs, especially to our students, as many of them work within retail)… some of the key questions that come to my mind are:

- Could this closing of many brick and mortar-based facilities happen within higher education?

.

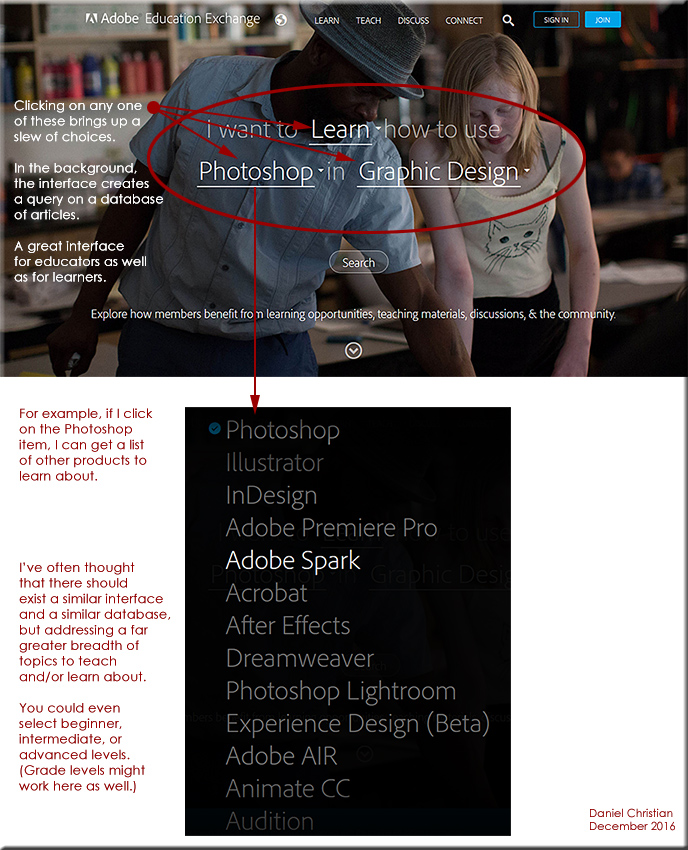

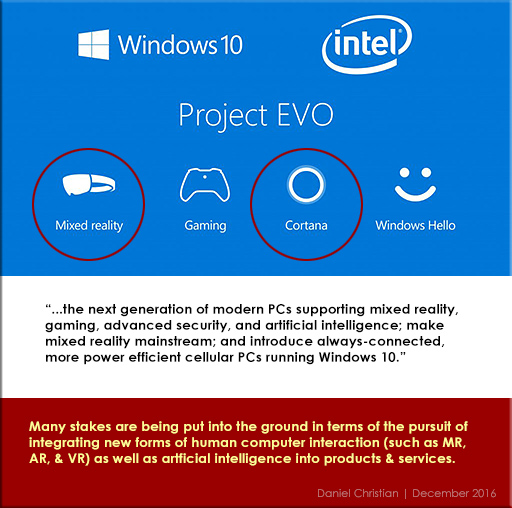

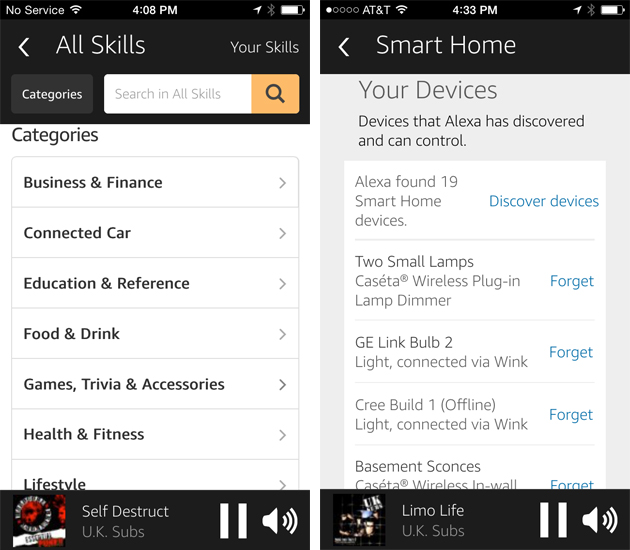

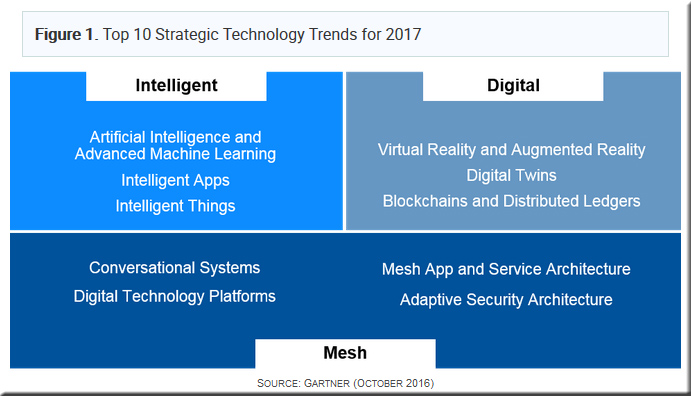

- With the advent of artificial intelligence and cognitive computing, will the innovations that take place on the Internet blow away what’s happening in the face-to-face (F2F) classrooms? As Thomas Frey asserts, by 2030, will the largest company on the internet be an education-based company that we haven’t heard of yet?

(NOTE: “Frey doesn’t go so far as to argue education bots will replace traditional schooling outright. He sees them more as a supplement, perhaps as a kind of tutor.”)

. - Or, because people enjoy learning together in a F2F environment, will F2F classrooms augment what they are doing with what’s available online/digitally?

. - Will the discussion not revolve around online vs F2F, but rather will the topic at hand be more focused on how innovative/responsive one’s institution is?

Also relevant/see:

Attention University Presidents: A Press Release From the Near Future — from futurist Jack Uldrich

(Emphasis added below by DSC)

(Editor’s Note: Change is difficult. This is especially true in organizations that have heretofore been immune to the broader forces of disruption–such as institutions of higher learning. To shake presidents, administrators, and faculty out of their stupor I have drafted the following fictional press release. I encourage all university and college presidents and their boards to read it and then discuss how they can–and must–adapt in order to remain competitive in the future.)

PRESS RELEASE (Fictional Scenario: For Internal Discussion Only)

(Note: All links in the press release highlight real advances in the field of higher education).

State College to Close at End of 2021-2022 Academic Year

Washington, DC – December 16, 2021 — State College, one of the country’s leading public universities, has decided to cease academic operations at the end of the 2021-22 school year.

rest of fictional press release here –>

Last comment from DSC:

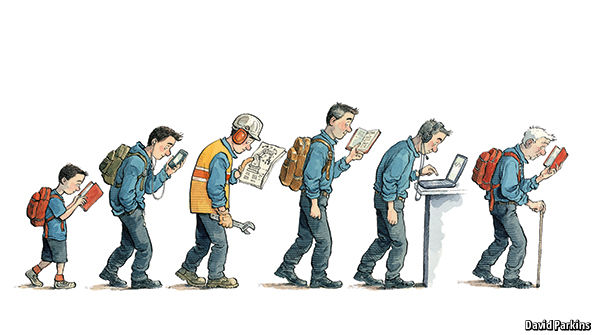

I don’t post this to be a fear monger. Rather, I post it to have those of us working with higher education take some time to reflect on this situation — because we need to be far more responsive to change than we are being. Given the increasingly rapid pace of change occurring in our world today, people will have to continue to reinvent themselves. But the difference in the near future will be in the number of times people have to reinvent themselves and how quickly they need to do it. They won’t be able to take 2-4 years off to do it.

Let’s not get blown out of the water by some alternative. Let’s respond while we still have the chance. Let’s be in touch with the changing landscapes and needs out there.

Addendums:

- Amazon’s aggressive warehouse and shipping strategy is paying off — from marketwatch.com by Tonya Garcia

. - Department store sales fall 11th straight year amid online onslaught — from marketwatch.com by Jeffry Bartash

Traditional brick-and-mortar stores under siege, lose more ground

.

- Ominous prediction says JCPenney could close 30% of its stores within 2 years — from businessinsider.com by Hayley Peterson

Excerpt:

JCPenney could close up to 30% of its stores, or roughly 300 of its more than 1,000 locations, within the next two years, according to a Cowen & Co. report. The department-store chain has largely avoided mass store closings in recent years, but the rise of e-commerce is now forcing the company to reassess its store base, Cowen & Co. analyst Oliver Chen told Business Insider following a meeting with JCPenney management.

. - An apt quote from “Obama’s Education Dept Issues Last Hurrah: A National Edtech Plan for Higher Education” — from edsurge.com by Jeff Young & Tony Wan

Colleges need to adapt to meet the changing demographics and needs of students, rather than expect them to conform to a tradition-loving system.

“Unless we become more nimble in our approach and more scalable in our solutions, we will miss out on an opportunity to embrace and serve the majority of students who will need higher education and postsecondary learning,” says the report. Later it underscores that “higher education has never mattered so much to those who seek it. It drives social mobility, energizes our economy, and underpins our democracy.”

- Equipping people to stay ahead of technological change — from economist.com by

It is easy to say that people need to keep learning throughout their careers. The practicalities are daunting.

WHEN education fails to keep pace with technology, the result is inequality. Without the skills to stay useful as innovations arrive, workers suffer—and if enough of them fall behind, society starts to fall apart. That fundamental insight seized reformers in the Industrial Revolution, heralding state-funded universal schooling. Later, automation in factories and offices called forth a surge in college graduates. The combination of education and innovation, spread over decades, led to a remarkable flowering of prosperity.

Today robotics and artificial intelligence call for another education revolution. This time, however, working lives are so lengthy and so fast-changing that simply cramming more schooling in at the start is not enough. People must also be able to acquire new skills throughout their careers.

Amazon is going to kill more American jobs than China did — from marketwatch.com

Millions of retail jobs are threatened as Amazon’s share of online purchases keeps climbing

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)