35 Ways Real People Are Using A.I. Right Now — from nytimes.com by Francesca Paris and Larry Buchanan

From DSC:

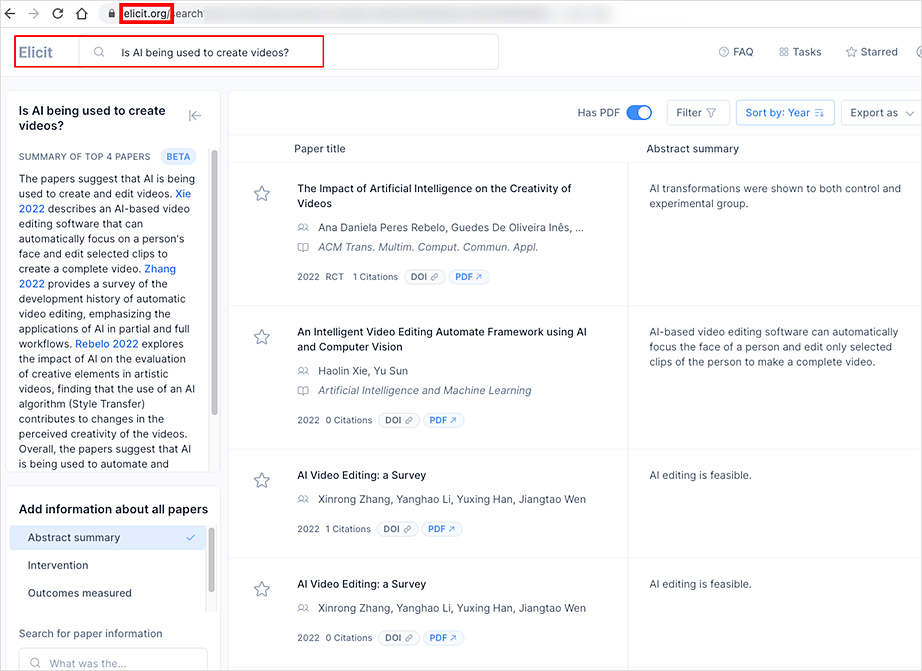

It was interesting to see how people are using AI these days. The article mentioned things from planning Gluten Free (GF) meals to planning gardens, workouts, and more. Faculty members, staff, students, researchers and educators in general may find Elicit, Scholarcy and Scite to be useful tools. I put in a question at Elicit and it looks interesting. I like their interface, which allows me to quickly resort things.

.

There Is No A.I. — from newyorker.com by Jaron Lanier

There are ways of controlling the new technology—but first we have to stop mythologizing it.

Excerpts:

If the new tech isn’t true artificial intelligence, then what is it? In my view, the most accurate way to understand what we are building today is as an innovative form of social collaboration.

…

The new programs mash up work done by human minds. What’s innovative is that the mashup process has become guided and constrained, so that the results are usable and often striking. This is a significant achievement and worth celebrating—but it can be thought of as illuminating previously hidden concordances between human creations, rather than as the invention of a new mind.

Resource per Steve Nouri on LinkedIn