From DSC:

The articles below demonstrate why the need for ethics, morals, policies, & serious reflection about what kind of future we want has never been greater!

What Ethics Should Guide the Use of Robots in Policing? — from nytimes.com

11 Police Robots Patrolling Around the World — from wired.com

Police use of robot to kill Dallas shooting suspect is new, but not without precursors — from techcrunch.com

What skills will human workers need when robots take over? A new algorithm would let the machines decide — from qz.com

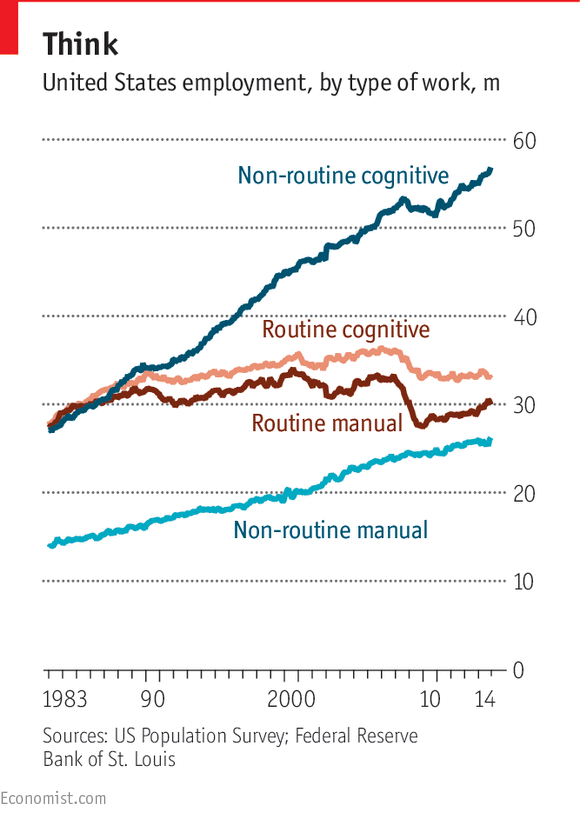

The impact on jobs | Automation and anxiety | Will smarter machines cause mass unemployment? — from economist.com

VRTO Spearheads Code of Ethics on Human Augmentation — from vrfocus.com

A code of ethics is being developed for both VR and AR industries.

Google and Microsoft Want Every Company to Scrutinize You with AI — from technologyreview.com by Tom Simonite

The tech giants are eager to rent out their AI breakthroughs to other companies.

U.S. Public Wary of Biomedical Technologies to ‘Enhance’ Human Abilities — from pewinternet.org by Cary Funk, Brian Kennedy and Elizabeth Podrebarac Sciupac

Americans are more worried than enthusiastic about using gene editing, brain chip implants and synthetic blood to change human capabilities

Human Enhancement — from pewinternet.org by David Masci

The Scientific and Ethical Dimensions of Striving for Perfection

Robolliance focuses on autonomous robotics for security and survelliance — from robohub.org by Kassie Perlongo

Company Unveils Plans to Grow War Drones from Chemicals — from interestingengineering.com

The Army’s Self-Driving Trucks Hit the Highway to Prepare for Battle — from wired.com

Russian robots will soon replace human soldiers — from interestingengineering.com

Unmanned combat robots beginning to appear — from therobotreport.com

Law-abiding robots? What should the legal status of robots be? — from robohub.org by Anders Sandberg

Excerpt:

News media are reporting that the EU is considering turning robots into electronic persons with rights and apparently industry spokespeople are concerned that Brussels’ overzealousness could hinder innovation.

The report is far more sedate. It is a draft report, not a bill, with a mixed bag of recommendations to the Commission on Civil Law Rules on Robotics in the European Parliament. It will be years before anything is decided.

Nevertheless, it is interesting reading when considering how society should adapt to increasingly capable autonomous machines: what should the legal and moral status of robots be? How do we distribute responsibility?

A remarkable opening

The report begins its general principles with an eyebrow-raising paragraph:

whereas, until such time, if ever, that robots become or are made self-aware, Asimov’s Laws must be regarded as being directed at the designers, producers and operators of robots, since those laws cannot be converted into machine code;

It is remarkable because first it alludes to self-aware robots, presumably moral agents – a pretty extreme and currently distant possibility – then brings up Isaac Asimov’s famous but fictional laws of robotics and makes a simultaneously insightful and wrong-headed claim.

Robots are getting a sense of self-doubt — from popsci.com by Dave Gershgorn

Introspection is the key to growth

Excerpt:

That murmur is self-doubt, and its presence helps keep us alive. But robots don’t have this instinct—just look at the DARPA Robotics Challenge. But for robots and drones to exist in the real world, they need to realize their limits. We can’t have a robot flailing around in the darkness, or trying to bust through walls. In a new paper, researchers at Carnegie Mellon are working on giving robots introspection, or a sense of self-doubt. By predicting the likelihood of their own failure through artificial intelligence, robots could become a lot more thoughtful, and safer as well.

Scientists Create Successful Biohybrid Being Using 3-D Printing and Genetic Engineering — from inc.com by Lisa Calhoun

Scientists genetically engineered and 3-D-printed a biohybrid being, opening the door further for lifelike robots and artificial intelligence

Excerpt:

If you met this lab-created critter over your beach vacation, you’d swear you saw a baby ray. In fact, the tiny, flexible swimmer is the product of a team of diverse scientists. They have built the most successful artificial animal yet. This disruptive technology opens the door much wider for lifelike robots and artificial intelligence.

From DSC:

I don’t think I’d use the term disruptive here — though that may turn out to be the case. The word disruptive doesn’t come close to carrying/relaying the weight and seriousness of this kind of activity; nor does it point out where this kind of thing could lead to.

Pokemon Go’s digital popularity is also warping real life — from finance.yahoo.com by Ryan Nakashima and David Hamilton

Excerpt (emphasis DSC):

Todd Richmond, a director at the Institute for Creative Technologies at the University of Southern California, says a big debate is brewing over who controls digital assets associated with real world property.

“This is the problem with technology adoption — we don’t have time to slowly dip our toe in the water,” he says. “Tenants have had no say, no input, and now they’re part of it.”

From DSC:

I greatly appreciate what Pokémon Go has been able to achieve and although I haven’t played it, I think it’s great (great for AR, great for peoples’ health, great for the future of play, etc.)! So there are many positives to it. But the highlighted portion above is not something we want to have to say occurred with artificial intelligence, cognitive computing, some types of genetic engineering, corporations tracking/using your personal medical information or data, the development of biased algorithms, etc.

Right now, artificial intelligence is the only thing that matters: Look around you — from forbes.com by Enrique Dans

Excerpts:

If there’s one thing the world’s most valuable companies agree on, it’s that their future success hinges on artificial intelligence.

…

In short, CEO Sundar Pichai wants to put artificial intelligence everywhere, and Google is marshaling its army of programmers into the task of remaking itself as a machine learning company from top to bottom.

…

Microsoft won’t be left behind this time. In a great interview a few days ago, its CEO, Satya Nadella says he intends to overtake Google in the machine learning race, arguing that the company’s future depends on it, and outlining a vision in which human and machine intelligence work together to solve humanity’s problems. In other words, real value is created when robots work for people, not when they replace them.

…

And Facebook? The vision of its founder, Mark Zuckerberg, of the company’s future, is one in which artificial intelligence is all around us, carrying out or helping to carry out just about any task you can think of…

The links I have included in this column have been carefully chosen as recommended reading to support my firm conviction that machine learning and artificial intelligence are the keys to just about every aspect of life in the very near future: every sector, every business.

10 jobs that A.I. and chatbots are poised to eventually replace — from venturebeat.com by Felicia Schneiderhan

Excerpt:

If you’re a web designer, you’ve been warned.

Now there is an A.I. that can do your job. Customers can direct exactly how their new website should look. Fancy something more colorful? You got it. Less quirky and more professional? Done. This A.I. is still in a limited beta but it is coming. It’s called The Grid and it came out of nowhere. It makes you feel like you are interacting with a human counterpart. And it works.

Artificial intelligence has arrived. Time to sharpen up those resumes.

Augmented Humans: Next Great Frontier, or Battleground? — from nextgov.com by John Breeden

Excerpt:

It seems like, in general, technology always races ahead of the moral implications of using it. This seems to be true of everything from atomic power to sequencing genomes. Scientists often create something because they can, because there is a perceived need for it, or even by accident as a result of research. Only then does the public catch up and start to form an opinion on the issue.

…

Which brings us to the science of augmenting humans with technology, a process that has so far escaped the public scrutiny and opposition found with other radical sciences. Scientists are not taking any chances, with several yearly conferences already in place as a forum for scientists, futurists and others to discuss the process of human augmentation and the moral implications of the new science.

That said, it seems like those who would normally oppose something like this have remained largely silent.

Google Created Its Own Laws of Robotics — from fastcodesign.com by John Brownlee

Building robots that don’t harm humans is an incredibly complex challenge. Here are the rules guiding design at Google.

Google identifies five problems with artificial intelligence safety — from which-50.com

DARPA is giving $2 million to the person who creates an AI hacker — from futurism.com