Brainyacts #57: Education Tech— from thebrainyacts.beehiiv.com by Josh Kubicki

Excerpts:

Let’s look at some ideas of how law schools could use AI tools like Khanmigo or ChatGPT to support lectures, assignments, and discussions, or use plagiarism detection software to maintain academic integrity.

- Personalized learning

- Virtual tutors and coaches

- Interactive simulations

- Enhanced course materials

- Collaborative learning

- Automated assessment and feedback

- Continuous improvement

- Accessibility and inclusivity

AI Will Democratize Learning — from td.org by Julia Stiglitz and Sourabh Bajaj

Excerpts:

In particular, we’re betting on four trends for AI and L&D.

- Rapid content production

- Personalized content

- Detailed, continuous feedback

- Learner-driven exploration

In a world where only 7 percent of the global population has a college degree, and as many as three quarters of workers don’t feel equipped to learn the digital skills their employers will need in the future, this is the conversation people need to have.

…

Taken together, these trends will change the cost structure of education and give learning practitioners new superpowers. Learners of all backgrounds will be able to access quality content on any topic and receive the ongoing support they need to master new skills. Even small L&D teams will be able to create programs that have both deep and broad impact across their organizations.

The Next Evolution in Educational Technologies and Assisted Learning Enablement — from educationoneducation.substack.com by Jeannine Proctor

Excerpt:

Generative AI is set to play a pivotal role in the transformation of educational technologies and assisted learning. Its ability to personalize learning experiences, power intelligent tutoring systems, generate engaging content, facilitate collaboration, and assist in assessment and grading will significantly benefit both students and educators.

How Generative AI Will Enable Personalized Learning Experiences — from campustechnology.com by Rhea Kelly

Excerpt:

With today’s advancements in generative AI, that vision of personalized learning may not be far off from reality. We spoke with Dr. Kim Round, associate dean of the Western Governors University School of Education, about the potential of technologies like ChatGPT for learning, the need for AI literacy skills, why learning experience designers have a leg up on AI prompt engineering, and more. And get ready for more Star Trek references, because the parallels between AI and Sci Fi are futile to resist.

The Promise of Personalized Learning Never Delivered. Today’s AI Is Different — from the74million.org by John Bailey; with thanks to GSV for this resource

Excerpts:

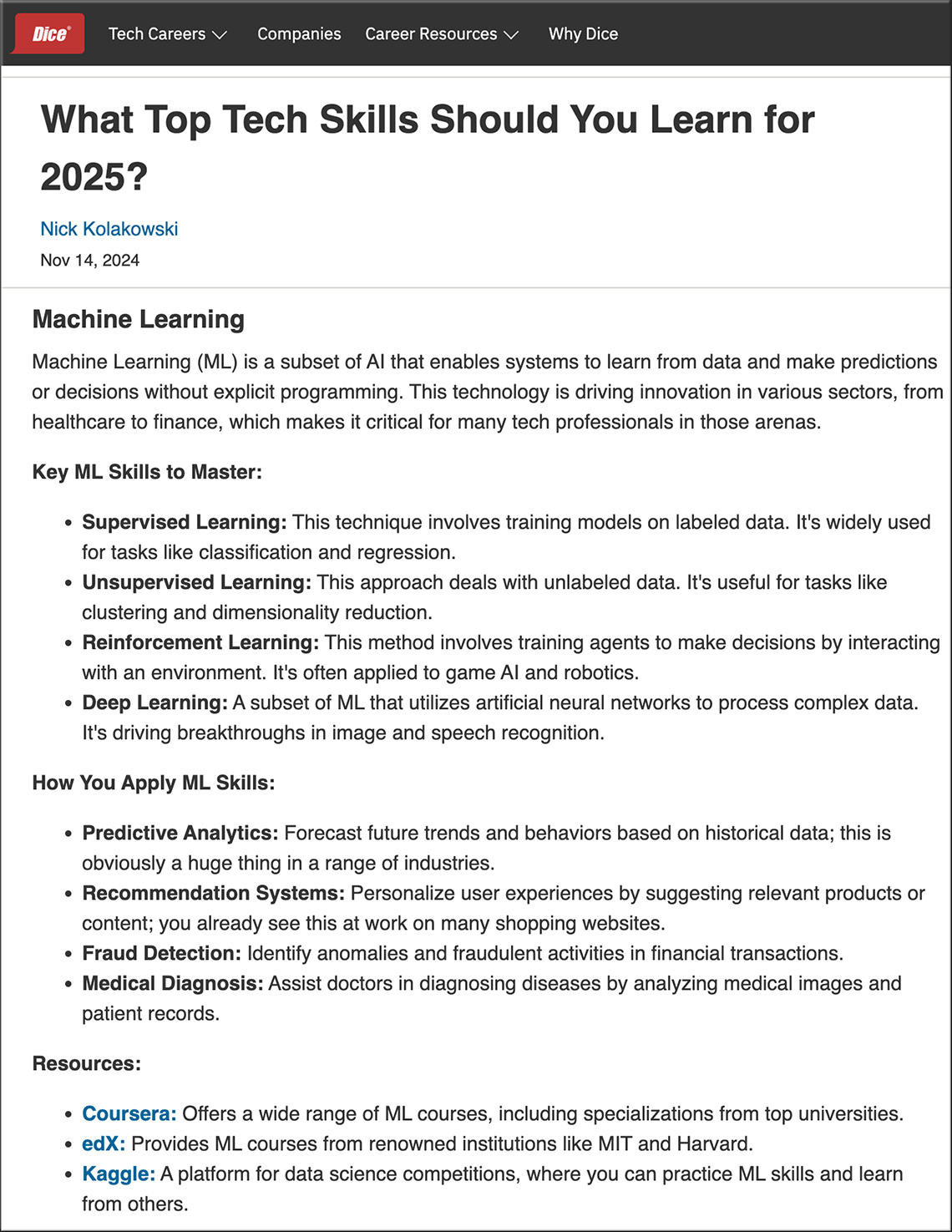

There are four reasons why this generation of AI tools is likely to succeed where other technologies have failed:

-

- Smarter capabilities

- Reasoning engines

- Language is the interface

- Unprecedented scale

Latest NVIDIA Graphics Research Advances Generative AI’s Next Frontier — from blogs.nvidia.com by Aaron Lefohn

NVIDIA will present around 20 research papers at SIGGRAPH, the year’s most important computer graphics conference.

Excerpt:

NVIDIA today introduced a wave of cutting-edge AI research that will enable developers and artists to bring their ideas to life — whether still or moving, in 2D or 3D, hyperrealistic or fantastical.

Around 20 NVIDIA Research papers advancing generative AI and neural graphics — including collaborations with over a dozen universities in the U.S., Europe and Israel — are headed to SIGGRAPH 2023, the premier computer graphics conference, taking place Aug. 6-10 in Los Angeles.

The papers include generative AI models that turn text into personalized images; inverse rendering tools that transform still images into 3D objects; neural physics models that use AI to simulate complex 3D elements with stunning realism; and neural rendering models that unlock new capabilities for generating real-time, AI-powered visual details.

Also relevant to the item from Nvidia (above), see:

Unreal Engine’s Metahuman Creator — with thanks to Mr. Steven Chevalia for this resource

Excerpt:

MetaHuman is a complete framework that gives any creator the power to use highly realistic human characters in any way imaginable.

It includes MetaHuman Creator, a free cloud-based app that enables you to create fully rigged photorealistic digital humans in minutes.