Agentic AI and the New Era of Corporate Learning for 2026 — from hrmorning.com by Carol Warner

That gap creates compliance risk and wasted investment. It leaves HR leaders with a critical question: How do you measure and validate real learning when AI is doing the work for employees?

Designing Training That AI Can’t Fake

Employees often find static slide decks and multiple-choice quizzes tedious, while AI can breeze through them. If employees would rather let AI take training for them, it’s a red flag about the content itself.

One of the biggest risks with agentic AI is disengagement. When AI can complete a task for employees, their incentive to engage disappears unless they understand why the skill matters, Rashid explains. Personalization and context are critical. Training should clearly connect to what employees value most – career mobility, advancement, and staying relevant in a fast-changing market.

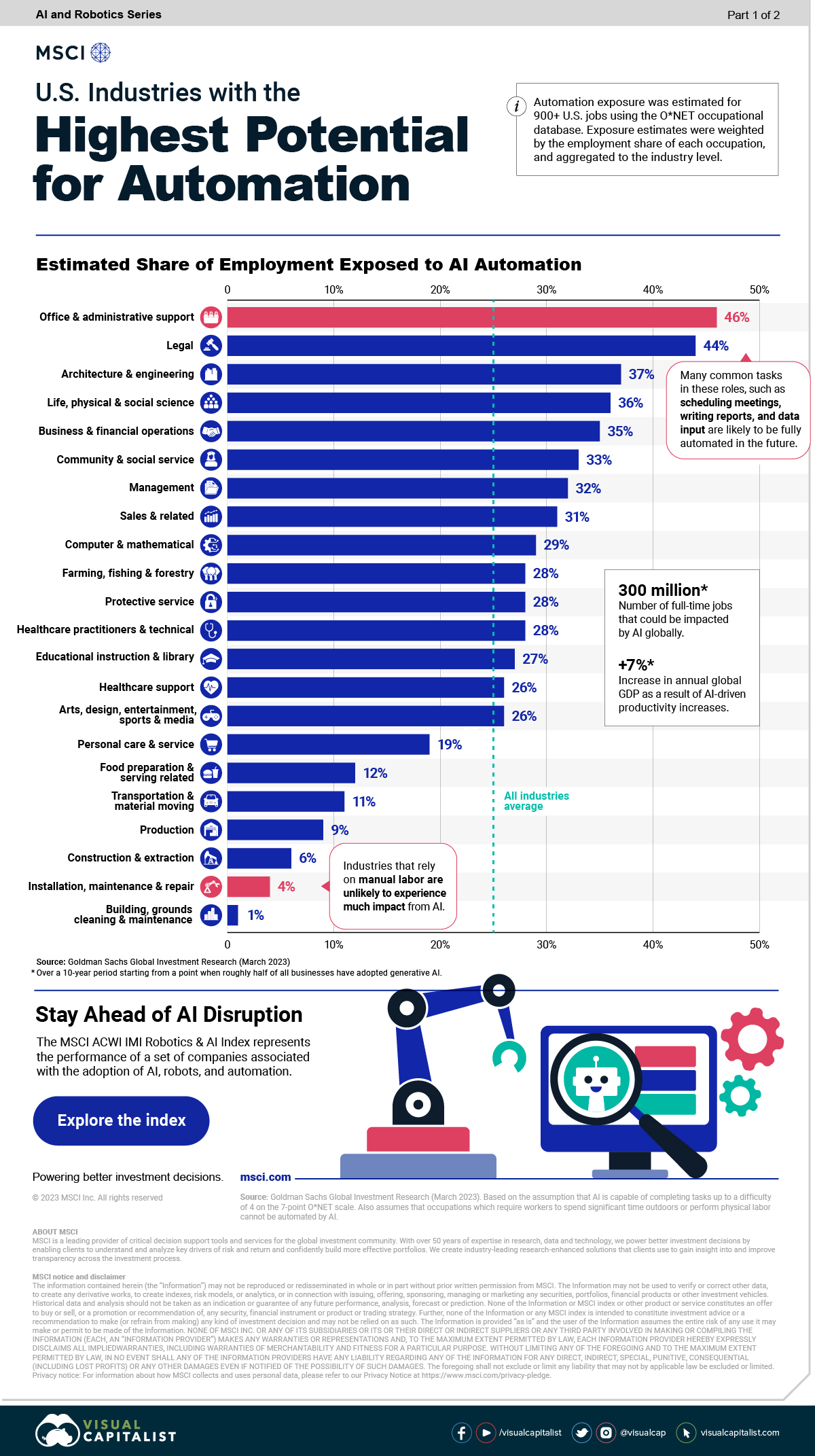

Nearly half of executives believe today’s skills will expire within two years, making continuous learning essential for job security and growth. To make training engaging, Rashid recommends:

- Delivering content in formats employees already consume – short videos, mobile-first modules, interactive simulations, or micro-podcasts that fit naturally into workflows. For frontline workers, this might mean replacing traditional desktop training with mobile content that integrates into their workday.

- Aligning learning with tangible outcomes, like career opportunities or new responsibilities.

- Layering in recognition, such as digital badges, leaderboards, or team shout-outs, to reinforce motivation and progress

Microsoft 365 Copilot AI agents reach a new milestone — is teamwork about to change? — from windowscentral.comby Adam Hales

Microsoft expands Copilot with collaborative agents in Teams, SharePoint and more to boost productivity and reshape teamwork.

Microsoft is pitching a recent shift of AI agents in Microsoft Teams as more than just smarter assistance. Instead, these agents are built to behave like human teammates inside familiar apps such as Teams, SharePoint, and Viva Engage. They can set up meeting agendas, keep files in order, and even step in to guide community discussions when things drift off track.

…

Unlike tools such as ChatGPT or Claude, which mostly wait for prompts, Microsoft’s agents are designed to take initiative. They can chase up unfinished work, highlight items that still need decisions, and keep projects moving forward. By drawing on Microsoft Graph, they also bring in the right files, past decisions, and context to make their suggestions more useful.

Chris Dede’s comments on LinkedIn re: Aibrary

As an advisor to Aibrary, I am impressed with their educational philosophy, which is based both on theory and on empirical research findings. Aibrary is an innovative approach to self-directed learning that complements academic resources. Expanding our historic conceptions of books, libraries, and lifelong learning to new models enabled by emerging technologies is central to empowering all of us to shape our future.

.

Also see:

Why AI literacy must come before policy — from timeshighereducation.com by Kathryn MacCallum and David Parsons

When developing rules and guidelines around the uses of artificial intelligence, the first question to ask is whether the university policymakers and staff responsible for implementing them truly understand how learners can meet the expectations they set

Literacy first, guidelines second, policy third

For students to respond appropriately to policies, they need to be given supportive guidelines that enact these policies. Further, to apply these guidelines, they need a level of AI literacy that gives them the knowledge, skills and understanding required to support responsible use of AI. Therefore, if we want AI to enhance education rather than undermine it, we must build literacy first, then create supportive guidelines. Good policy can then follow.

AI training becomes mandatory at more US law schools — from reuters.com by Karen Sloan and Sara Merken

Sept 22 (Reuters) – At orientation last month, 375 new Fordham Law students were handed two summaries of rapper Drake’s defamation lawsuit against his rival Kendrick Lamar’s record label — one written by a law professor, the other by ChatGPT.

The students guessed which was which, then dissected the artificial intelligence chatbot’s version for accuracy and nuance, finding that it included some irrelevant facts.

The exercise was part of the first-ever AI session for incoming students at the Manhattan law school, one of at least eight law schools now incorporating AI training for first-year students in orientation, legal research and writing courses, or through mandatory standalone classes.