Also see:

Making Windows 11 the most inclusively designed version of Windows yet — from blogs.windows.com by Carolina Hernandez

Also see:

Making Windows 11 the most inclusively designed version of Windows yet — from blogs.windows.com by Carolina Hernandez

BlueJeans Video Conferencing Giant to Launch Native Google Glass App for Remote Assistance — from next.reality.news by Adario Strange

Excerpt:

Starting in 2022, Glass Enterprise Edition 2 users will have the option of using a native version of the BlueJeans meeting software.

Like other enterprise AR wearables on the market, the primary use case for the dynamic will be in the realm of remote assistance, in which an expert in a faraway location can see what a Google Glass wearer sees and advise that team member accordingly.

From DSC:

Remote support is also occurring in healthcare. What might “telehealth” morph into?

Tools for Building Branching Scenarios — from christytuckerlearning.com by Christy Tucker

When would you use Twine, Storyline, Rise, or other tools for building branching scenarios? It depends on the project and goals.

Excerpt:

When would you use Twine instead of Storyline or other tools for building branching scenarios? An attendee at one of my recent presentations asked me why I’d bother creating something in Twine rather than just storyboarding directly in Storyline, especially if I was using character images. Whether I would use Twine, Storyline, Rise, or something else depends on the project and the goals.

DC: What doors does this type of real-time translation feature open up for learning?https://t.co/beOXFjGZs9#learningfromthelivingclassroom #education #K12 #highereducation #training #learning #lifelonglearning #globallearning #languages #translation https://t.co/TV5InkWHwn pic.twitter.com/MGblMzQbBL

— Daniel Christian (he/him/his) (@dchristian5) August 4, 2021

From DSC:

For that matter, what does it open up for #JusticeTech? #Legaltech? #A2J? #Telehealth?

From DSC:

It will be interesting to see — post Covid19 — how vendors and their platforms continue to develop to allow for even greater degrees of web-based collaboration. I recently saw this item re: what Google is doing with their Project Starline. Very interesting indeed. Google is trying to make it so that the other person feels like they are in the same space with you.

.

Time will tell what occurs in this space...but one does wonder what this type of technology will do for online-based learning, and/or hybrid/blended learning, and/or hyflex-based learning in the future…?

Doubling down on accessibility: Microsoft’s next steps to expand accessibility in technology, the workforce and workplace — from Brad Smith, President of Microsoft

Excerpt:

Accessibility by design

Today, we are announcing a variety of new “accessible by design” features and advances in Microsoft 365, enabling more than 200 million people to build, edit and share documents. Using artificial intelligence (AI) and other advanced technologies, we aim to make more content accessible and as simple and automatic as spell check is today. For example:

More than 1 billion people around the world live with a disability, and at some point, most of us likely will face some type of temporary, situational or permanent disability. The practical impacts are huge.

Addendum on 5/6/21:

Improving Digital Inclusion & Accessibility for Those With Learning Disabilities — from inclusionhub.com by Meredith Kreisa

Learning disabilities must be taken into account during the digital design process to ensure digital inclusion and accessibility for the community. This comprehensive guide outlines common learning disabilities, associated difficulties, accessibility barriers and best practices, and more.

“Learning shouldn’t be something only those without disabilities get to do,” explains Seren Davies, a full stack software engineer and accessibility advocate who is dyslexic. “It should be for everyone. By thinking about digital accessibility, we are making sure that everyone who wants to learn can.”

…

“Learning disability” is a broad term used to describe several specific diagnoses. Dyslexia, dyscalculia, dysgraphia, nonverbal learning disorder, and oral/written language disorder and specific reading comprehension deficit are among the most prevalent.

DC: Yet another reason for Universal Design for Learning’s multiple means of presentation/media:

Encourage faculty to presume students are under-connected. Asynchronous, low-bandwidth approaches help give students more flexibility in accessing course content in the face of connectivity challenges.

— as excerpted from campustechnology.com’s article entitled, “4 Ways Institutions Can Meet Students’ Connectivity and Technology Needs“

When the Animated Bunny in the TV Show Listens for Kids’ Answers — and Answers Back — from edsurge.com by Rebecca Koenig

Excerpt:

Yet when this rabbit asks the audience, say, how to make a substance in a bottle less goopy, she’s actually listening for their answers. Or rather, an artificially intelligent tool is listening. And based on what it hears from a viewer, it tailors how the rabbit replies.

“Elinor can understand the child’s response and then make a contingent response to that,” says Mark Warschauer, professor of education at the University of California at Irvine and director of its Digital Learning Lab.

AI is coming to early childhood education. Researchers like Warschauer are studying whether and how conversational agent technology—the kind that powers smart speakers such as Alexa and Siri—can enhance the learning benefits young kids receive from hearing stories read aloud and from watching videos.

From DSC:

Looking at the above excerpt…what does this mean for elearning developers, learning engineers, learning experience designers, instructional designers, trainers, and more? It seems that, for such folks, learning how to use several new tools is showing up on the horizon.

From DSC:

I was reviewing an edition of Dr. Barbara Honeycutt’s Lecture Breakers Weekly, where she wrote:

After an experiential activity, discussion, reading, or lecture, give students time to write the one idea they took away from the experience. What is their one takeaway? What’s the main idea they learned? What do they remember?

This can be written as a reflective blog post or journal entry, or students might post it on a discussion board so they can share their ideas with their colleagues. Or, they can create an audio clip (podcast), video, or drawing to explain their One Takeaway.

From DSC:

This made me think of tools like VoiceThread — where you can leave a voice/audio message, an audio/video-based message, a text-based entry/response, and/or attach other kinds of graphics and files.

That is, a multimedia-based exit ticket. It seems to me that this could work in online- as well as blended-based learning environments.

Addendum on 2/7/21:

How to Edit Live Photos to Make Videos, GIFs & More! — from jonathanwylie.com

Exciting new tools for designers, January 2021 — from by Carrie Cousins

Excerpt:

The new year is often packed with resolutions. Make the most of those goals and resolve to design better, faster, and more efficiently with some of these new tools and resources.

Here’s what new for designers this month.

From DSC:

I was thinking about projecting images, animation, videos, etc. from a device onto a wall for all in the room to see.

One side of the surface would be more traditional (i.e., a sheet wall type of surface). The other side of the surface would be designed to be excellent for projecting images onto it and/or for use by Augmented Reality (AR), Mixed Reality (MR), and/or Virtual Reality (VR).

Along these lines, here’s another item related to Human-Computer Interaction (HCI):

Mercedes-Benz debuts dashboard that’s one giant touchscreen — from futurism.com

From DSC:

As we move into 2021, the blistering pace of emerging technologies will likely continue. Technologies such as:

Along the positive lines of this topic, I’ve been reflecting upon how we might be able to use AI in our learning experiences.

For example, when teaching in face-to-face-based classrooms — and when a lecture recording app like Panopto is being used — could teachers/professors/trainers audibly “insert” main points along the way? Similar to something like we do with Siri, Alexa, and other personal assistants (“Heh Siri, _____ or “Alexa, _____).

.

Pretend a lecture, lesson, or a training session is moving right along. Then the professor, teacher, or trainer says:

Like a verbal version of an HTML tag.

After the recording is done, the AI could locate and call out those “main points” — and create a table of contents for that lecture, lesson, training session, or presentation.

(Alternatively, one could insert a chime/bell/some other sound that the AI scans through later to build the table of contents.)

In the digital realm — say when recording something via Zoom, Cisco Webex, Teams, or another application — the same thing could apply.

Wouldn’t this be great for quickly scanning podcasts for the main points? Or for quickly scanning presentations and webinars for the main points?

Anyway, interesting times lie ahead!

From DSC:

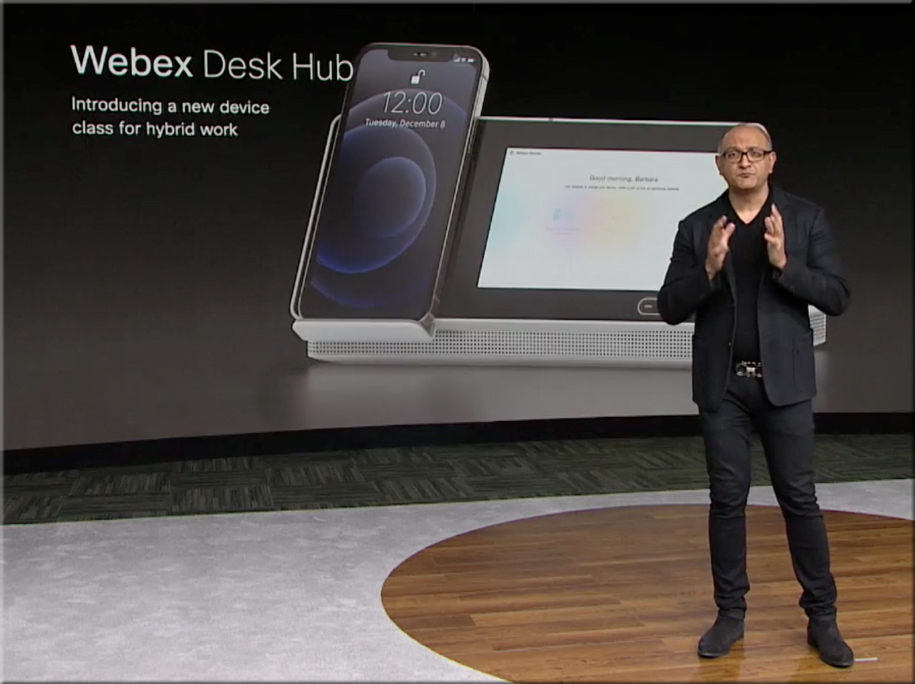

In yesterday’s webexone presentations, Cisco mentioned a new device category, calling it the Webex Desk Hub. It gets at the idea of walking into a facility and grabbing any desk, and making that desk you own — at least for that day and time. Cisco is banking on the idea that sometimes people will be working remotely, and sometimes they will be “going into the office.” But the facilities will likely be fewer and smaller — so one might not have their own office.

In that case, you can plug in your smart device, and things are set up the way they would be if you did have that space as a permanent office.

Applying this concept to the smart classrooms of the future, what might that concept look like for classrooms? A faculty member or a teacher could walk into any room that supports such a setup, put in their personal smart device, and the room conditions are instantly implemented:

From DSC:

Who needs to be discussing/debating “The Social Dilemma” movie? Whether one agrees with the perspectives put forth therein or not, the discussion boards out there should be lighting up in the undergraduate areas of Computer Science (especially Programming), Engineering, Business, Economics, Mathematics, Statistics, Philosophy, Religion, Political Science, Sociology, and perhaps other disciplines as well.

To those starting out the relevant careers here…just because we can, doesn’t mean we should. Ask yourself not whether something CAN be developed, but *whether it SHOULD be developed* and what the potential implications of a technology/invention/etc. might be. I’m not aiming to take a position here. Rather, I’m trying to promote some serious reflection for those developing our new, emerging technologies and our new products/services out there.