Microsoft President Warns of Orwell’s 1984 ‘Coming to Pass’ in 2024 — from interestingengineering.com by Chris Young

Microsoft’s Brad Smith warned we may be caught up in a losing race with artificial intelligence.

Excerpt (emphasis DSC):

The surveillance-state dystopia portrayed in George Orwell’s 1984 could “come to pass in 2024” if governments don’t do enough to protect the public against artificial intelligence (AI), Microsoft president Brad Smith warned in an interview for the BBC’s investigative documentary series Panorama.

During the interview, Smith warned of China’s increasing AI prowess and the fact that we may be caught up in a losing race with the technology itself.

“If we don’t enact the laws that will protect the public in the future, we are going to find the technology racing ahead, and it’s going to be very difficult to catch up,” Smith stated.

From DSC:

This is a major heads up to all those in the legal/legislative realm — especially the American Bar Association (ABA) and the Bar Associations across the country! The ABA needs to realize they have to up their game and get with the incredibly fast pace of the twenty-first century. If that doesn’t occur, we and future generations will pay the price. Two thoughts come to my mind in regards to the ABA and for the law schools out there:

Step 1: Allow 100% online-based JD programs all the time, from here on out.

Step 2: Encourage massive new program development within all law schools to help future lawyers, judges, legislative reps, & others build up more emerging technology expertise & the ramifications thereof.

Google’s plan to make search more sentient — from vox.com by Rebecca Heilweil

Google announces new search features every year, but this time feels different.

Excerpt:

At the keynote speech of its I/O developer conference on Tuesday, Google revealed a suite of ways the company is moving forward with artificial intelligence. These advancements show Google increasingly trying to build AI-powered tools that seem more sentient and that are better at perceiving how humans actually communicate and think. They seem powerful, too.

Two of the biggest AI announcements from Google involve natural language processing and search.

…

Google also revealed a number of AI-powered improvements to its Maps platform that are designed to yield more helpful results and directions.

Google’s plans to bring AI to education make its dominance in classrooms more alarming — from fastcompany.com by Ben Williamson

The tech giant has expressed an ambition to transform education with artificial intelligence, raising fresh ethical questions.

Struggling to Get a Job? Artificial Intelligence Could Be the Reason Why — from newsweek.com by Lydia Veljanovski; with thanks to Sam DeBrule for the resource

Excerpt:

Except that isn’t always the case. In many instances, instead of your application being tossed aside by a HR professional, it is actually artificial intelligence that is the barrier to entry. While this isn’t a problem in itself—AI can reduce workflow by rapidly filtering applicants—the issue is that within these systems lies the possibility of bias.

It is illegal in the U.S. for employers to discriminate against a job applicant because of their race, color, sex, religion, disability, national origin, age (40 or older) or genetic information. However, these AI hiring tools are often inadvertently doing just that, and there are no federal laws in the U.S. to stop this from happening.

These Indian edtech companies are shaping the future of AI & robotics — from analyticsinsight.net by Apoorva Komarraju May 25, 2021

Excerpt:

As edtech companies have taken a lead by digitizing education for the modern era, they have taken the stance to set up Atal Tinkering Labs in schools along with other services necessary for the budding ‘kidpreneurs’. With the availability of these services, students can experience 21st-century technologies like IoT, 3D printing, AI, and Robotics.

Researchers develop machine-learning model that accurately predicts diabetes, study says — from ctvnews.ca by Christy Somos

Excerpt:

TORONTO — Canadian researchers have developed a machine-learning model that accurately predicts diabetes in a population using routinely collected health data, a new study says.

The study, published in the JAMA Network Open journal, tested new machine-learning technology on routinely collected health data that examined the entire population of Ontario. The study was run by the ICES not-for-profit data research institute.

Using linked administrative health data from Ontario from 2006 to 2016, researchers created a validated algorithm by training the model on information taken from nearly 1.7 million patients.

Project Guideline: Enabling Those with Low Vision to Run Independently — from ai.googleblog.com by Xuan Yang; with thanks to Sam DeBrule for the resource

Excerpt:

For the 285 million people around the world living with blindness or low vision, exercising independently can be challenging. Earlier this year, we announced Project Guideline, an early-stage research project, developed in partnership with Guiding Eyes for the Blind, that uses machine learning to guide runners through a variety of environments that have been marked with a painted line. Using only a phone running Guideline technology and a pair of headphones, Guiding Eyes for the Blind CEO Thomas Panek was able to run independently for the first time in decades and complete an unassisted 5K in New York City’s Central Park.

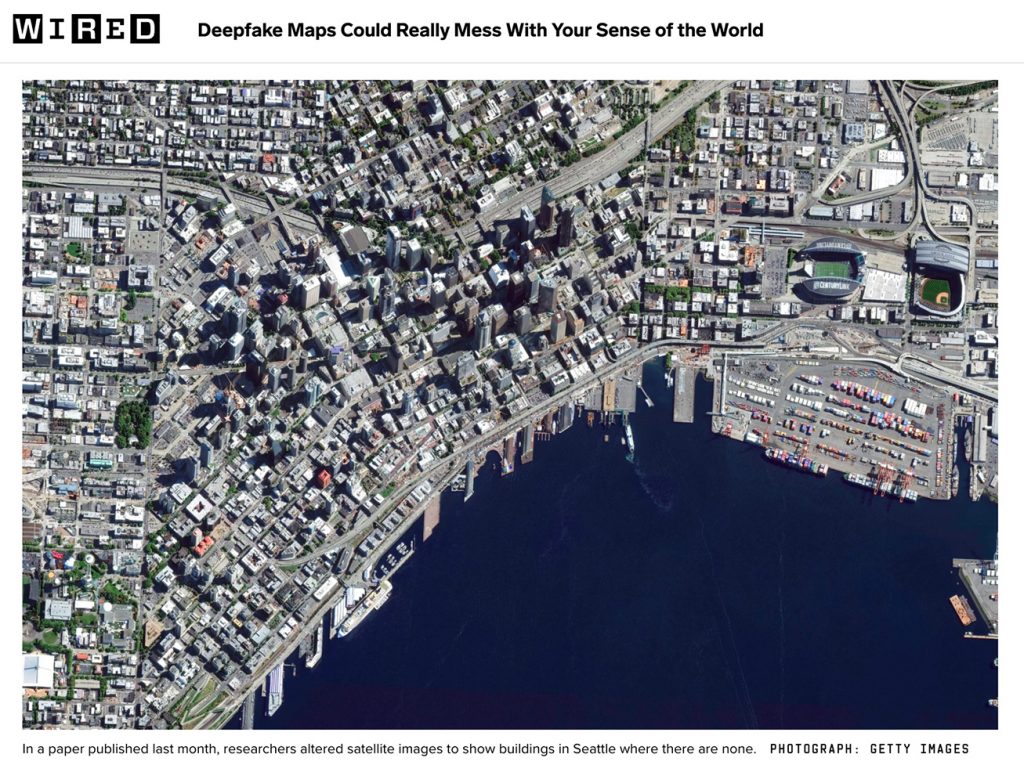

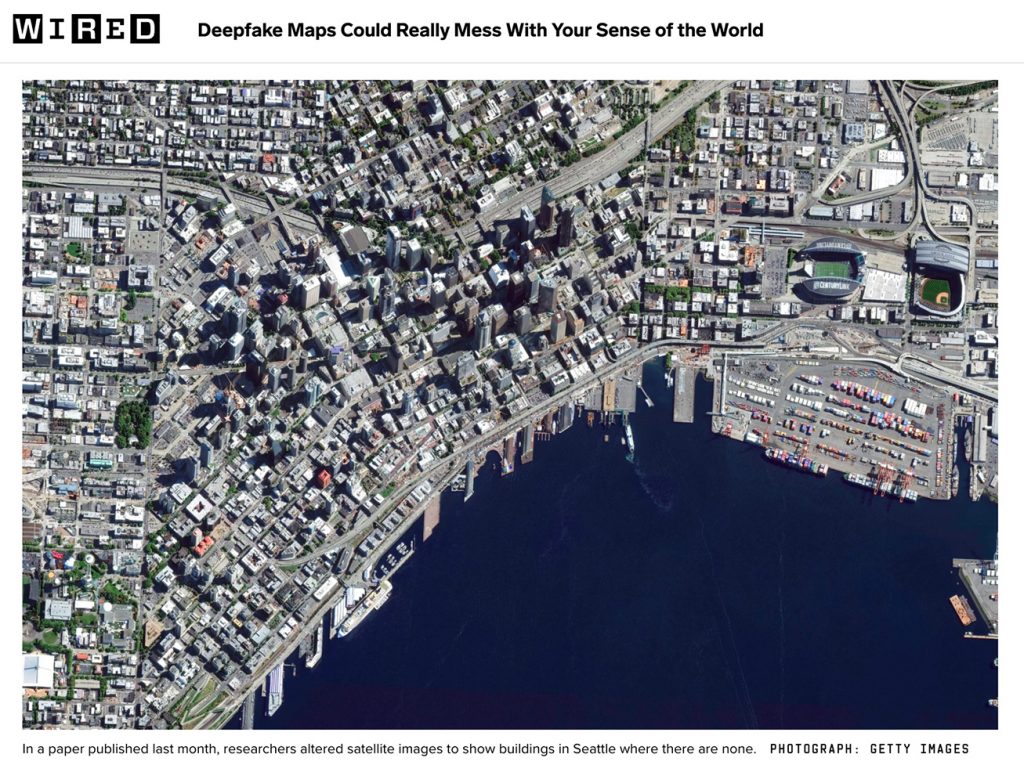

Deepfake Maps Could Really Mess With Your Sense of the World — from wired.com by Will Knight

Researchers applied AI techniques to make portions of Seattle look more like Beijing. Such imagery could mislead governments or spread misinformation online.