The tech that will change your life in 2016 — from wsj.com by Geoffrey A. Fowler and Joanna Stern

Gadgets, breakthroughs and ideas we think will define the state of the art in the year ahead

Excerpts:

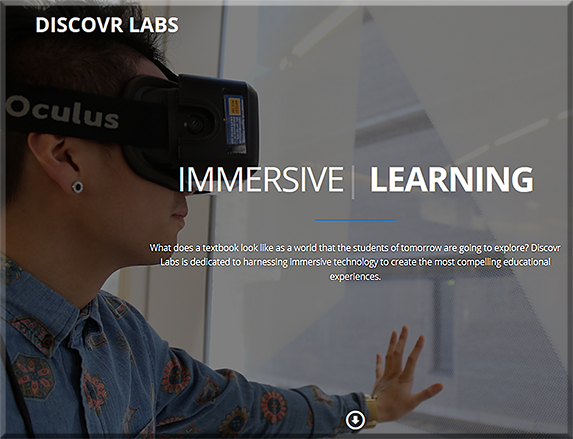

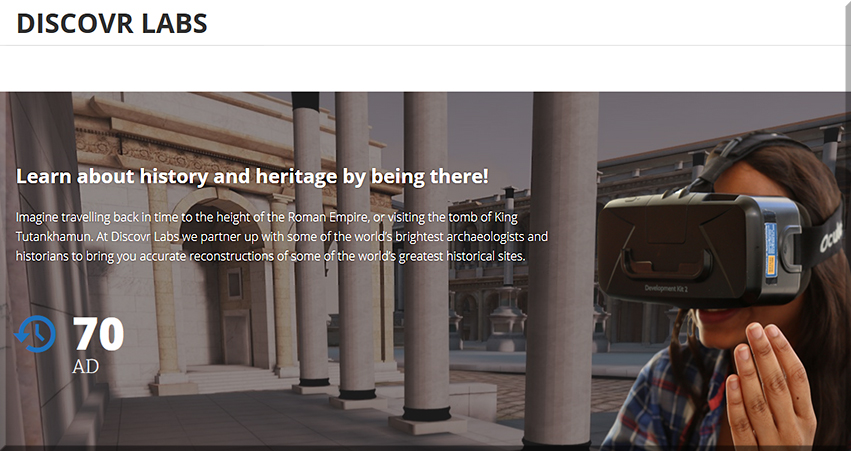

- Virtual Reality Gets Real

- Wiser Messaging Apps

- Safer, Smarter Drones

- Happy New USB Port! (USB Type-C port)

- Voice-Operated Everything

- Chinese Phones Hit the U.S.

- Cameras That See More

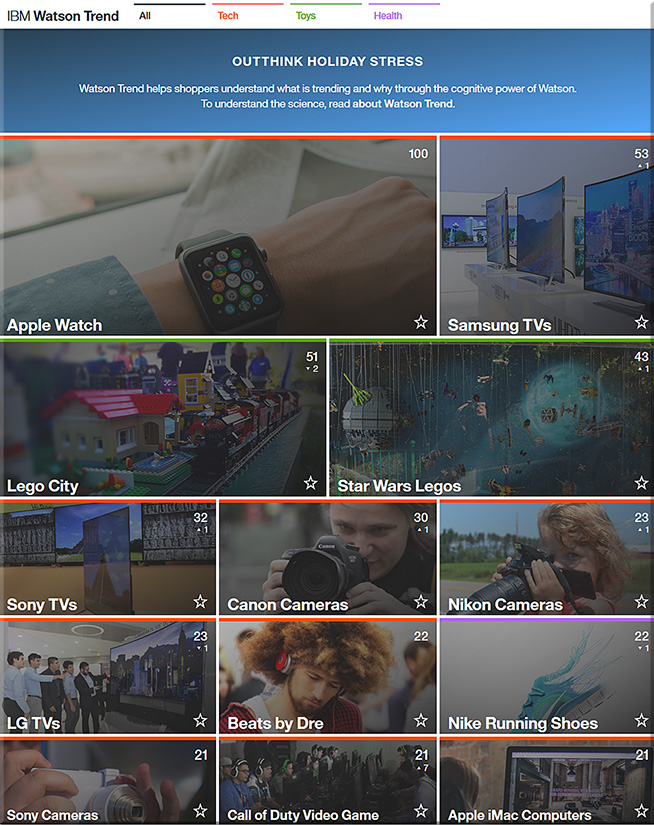

- Streaming Channels Galore

- Wireless Charging Everywhere

- Independent Wearables

- Cutting the Headphone Cord

- A Useful Internet of Things

Technology trends 2016 — from thefuturesagency.com by Rudy de Waele

Excerpt:

It’s this time of the year again for everyone in the business to release their yearly predictions. In order to save you some time, we collected all the most important and relevant trends – from the sources that matter, in one post.

The trends have been collected by The Futures Agency partner, speaker and content curator, Rudy de Waele, and were originally published on the shift 2020 Brain Food blog and newsletter.

CES 2016: Smart homes, smart cars, virtual reality — from cnbc.com by Harriet Taylor

Excerpt:

Nevertheless, there are still some distinct themes this year: Products that highlight the so-called Internet of Things (IoT), the connected home, autos and virtual reality will all have a big presence.

From smart to intelligent: 2016 AV trends — from avnetwork.com by Jonathan Owens

Excerpts:

- Sensors

- Bring Your Own Identity

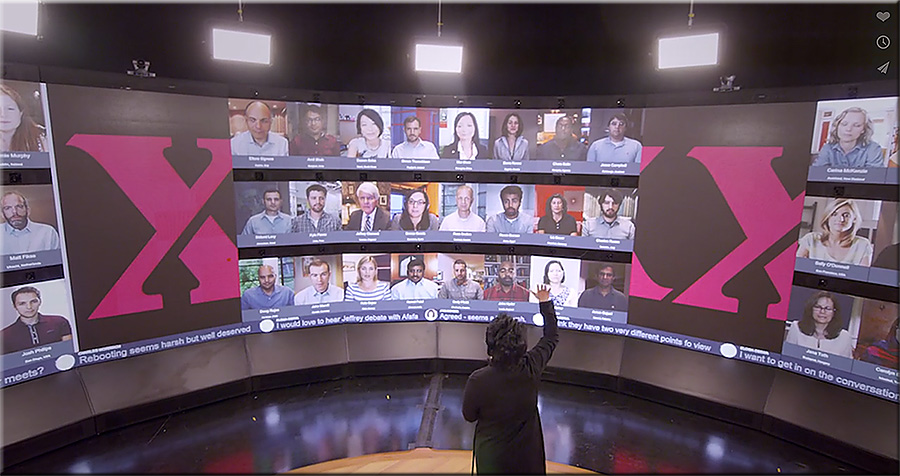

- UCC Bridging and True Collaboration

Cloud, virtual reality among top tech trends for 2016 — from news.investors.com by Patrick Seitz

Excerpts:

- Cloud computing

- Voice user interfaces

- Virtual reality

- Autonomous Vehicles

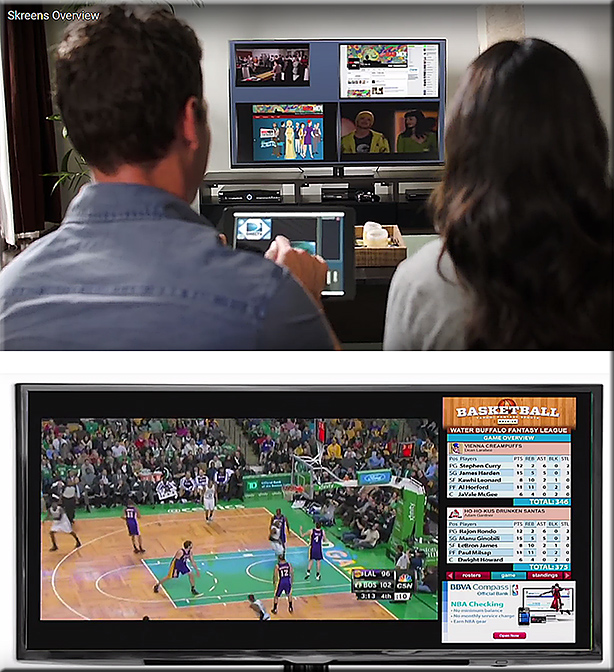

- Over-The-Top TV

Virtual reality, robot companions and wraparound smartphones: Top 5 tech trends due in 2016 — from scmp.com by Jack Liu

The headset cometh: A virtual reality content primer — from gigaom.com by

Excerpt:

When we talk about VR, we tend to talk in broad strokes. “Experiences,” we call them, as if that term is somehow covers and conveys the depth and disparity that exists between gaming, watching, and interacting with VR content. The reality of virtual reality, however, is not so easily categorized or described.

VR content is the big blanket term that clumsily and imprecisely covers large and vastly divergent portions of the content market as it stands. VR games, immersive video, and virtual cinema all fall under “VR content”, but they’re fundamentally different experiences, possibly appealing to very different portions of a potential mainstream VR market.

6 ways work will change in 2016 — from fastcompany.com by Jared Lindzon

Workplace trends for 2016 will be set in large part by what’s happening in the freelance world right now.

Excerpt:

Most major workplace trends don’t evolve overnight, and if you know where to look, you can already witness their approach.

Many of the trends that will come into focus in 2016 already exist today, but their significance is expected to grow and become mainstream in the year to come.

While such trends used to be set by the world’s largest companies, today many are championed by the smallest. Freelancers and independent employees need to stay ahead of future needs to ensure they are up to date with the most in-demand skills. Therefore, activity in the freelance market often serves as an early indication of the growing needs of traditional businesses.

At the same time, large organizations today are under greater threat of disruption, requiring early adoption and a heightened awareness of the surrounding business environment.

Here are some of the workplace trends that are expected to have far-reaching effects in 2016, from the boardrooms of Fortune 500 companies to the home offices, cafes, and coworking spaces of the freelance economy.

7 top tech trends impacting innovators in 2016 — from innovationexcellence.com by Chuck Brooks

Excerpts:

- The Internet of Things

- Data Science & Digital Transformation

- Artificial Intelligence (AI) and Augmented Reality technologies

- Quantum and Super Computing

- Smart Cities

- 3-D Printing

- Cybersecurity

Related:

- 30 Hot Products At CES 2016

- 10 ways the Internet of Things could change the world in 2016 — from techradar.com by Jamie Carter

- 50 Predictions for the Internet of Things in 2016 — from iotcentral.io by David Oro

- 10 Web design trends you can expect to see in 2016 — from thenextweb.com by Amber Leigh Turner

- HR Technology for 2016: 10 Big Disruptions on the Horizon — from bersin.com

- Tech trends that will make waves in 2016 — from cbsnews.com by Brian Mastroianni

- Big Data Analytics Predictions for 2016 — from data-informed.com by Scott Etkin

Addendum on 1/13/16:

- The 10 Biggest Tech Trends for 2016 — from entrepreneur.com

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)