Computers that never forget a face — from Future Today Institute

Excerpts:

In August, the U.S. Customs and Border Protection will roll out new technology that will scan the faces of drivers as they enter and leave the United States. For years, accomplishing that kind of surveillance through a car windshield has been difficult. But technology is quickly advancing. This system, activated by ambient light sensors, range finders and remote speedometers, uses smart cameras and AI-powered facial recognition technology to compare images in government files with people behind the wheel.

Biometric borders are just the beginning. Faceprints are quickly becoming our new fingerprints, and this technology is marching forward with haste. Faceprints are now so advanced that machine learning algorithms can recognize your unique musculatures and bone structures, capillary systems, and expressions using thousands of data points. All the features that make up a unique face are being scanned, captured and analyzed to accurately verify identities. New hairstyle? Plastic surgery? They don’t interfere with the technology’s accuracy.

Why you should care. Faceprints are already being used across China for secure payments. Soon, they will be used to customize and personalize your digital experiences. Our Future Today Institute modeling shows myriad near-future applications, including the ability to unlock your smart TV with your face. Retailers will use your face to personalize your in-store shopping experience. Auto manufacturers will start using faceprints to detect if drivers are under the influence of drugs or alcohol and prevent them from driving. It’s plausible that cars will soon detect if a driver is distracted and take the wheel using an auto-pilot feature. On a diet but live with others? Stash junk food in a drawer and program the lock to restrict your access. Faceprints will soon create opportunities for a wide range of sectors, including military, law enforcement, retail, manufacturing and security. But as with all technology, faceprints could lead to the loss of privacy and widespread surveillance.

It’s possible for both risk and opportunity to coexist. The point here is not alarmist hand-wringing, or pointless calls for cease-and-desist demands on the development and use of faceprint technology. Instead, it’s to acknowledge an important emerging trend––faceprints––and to think about the associated risks and opportunities for you and your organization well in advance. Approach biometric borders and faceprints with your (biometrically unique) eyes wide open.

Near-Futures Scenarios (2018 – 2028):

Optimistic: Faceprints make us safer, and they bring us back to physical offices and stores.

Pragmatic: As faceprint adoption grows, legal challenges mount.

In April, a U.S. federal judge ruled that Facebook must confront a class-action lawsuit that alleges its faceprint technology violates Illinois state privacy laws. Last year, a U.S. federal judge allowed a class-action suit to go forth against Shutterfly, claiming the company violated the Illinois Biometric Information Privacy Act, which ensures companies receive written releases before collecting biometric data, including faces. Companies and device manufacturers, who are early developers but late to analyzing legal outcomes, are challenged to balance consumer privacy with new security benefits.

Catastrophic: Faceprints are used for widespread surveillance and authoritative control.

How AI is helping sports teams scout star play — from nbcnews.com by Edd Gent

Professional baseball, basketball and hockey are among the sports now using AI to supplement traditional coaching and scouting.

Preparing students for workplace of the future — from educationdive.com by Shalina Chatlani

Excerpt:

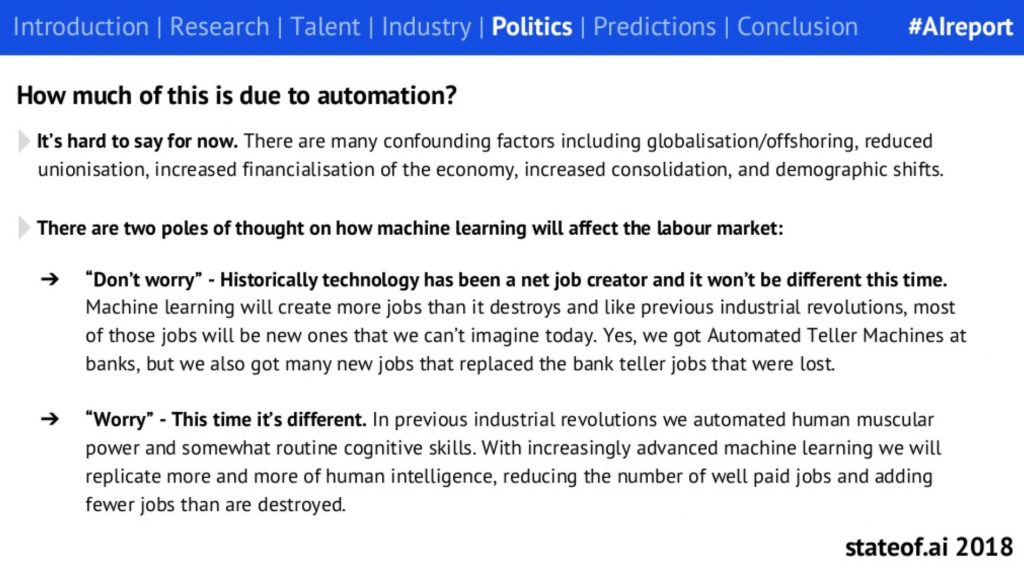

The workplace of the future will be marked by unprecedentedly advanced technologies, as well as a focus on incorporating artificial intelligence to drive higher levels of production with fewer resources. Employers and education stakeholders, noting the reality of this trend, are turning a reflective eye toward current students and questioning whether they will be workforce ready in the years to come.

This has become a significant concern for higher education executives, who find their business models could be disrupted as they fail to meet workforce demands. A 2018 Gallup-Northeastern University survey shows that of 3,297 U.S. citizens interviewed, only 22% with a bachelor’s degree said their education left them “well” or “very well prepared” to use AI in their jobs.

…

In his book “Robot-Proof: Higher Education in the Age of Artificial Intelligence,” Northeastern University President Joseph Aoun argued that for higher education to adapt advanced technologies, it has to focus on life-long learning, which he said says prepares students for the future by fostering purposeful integration of technical literacies, such as coding and data literacy, with human literacies, such as creativity, ethics, cultural agility and entrepreneurship.

“When students combine these literacies with experiential components, they integrate their knowledge with real life settings, leading to deep learning,” Aoun told Forbes.

Amazon’s A.I. camera could help people with memory loss recognize old friends and family — from cnbc.com by Christina Farr

- Amazon’s DeepLens is a smart camera that can recognize objects in front of it.

- One software engineer, Sachin Solkhan, is trying to figure out how to use it to help people with memory loss.

- Users would carry the camera to help them recognize people they know.

Microsoft acquired an AI startup that helps it take on Google Duplex — from qz.com by Dave Gershgorn

Excerpt:

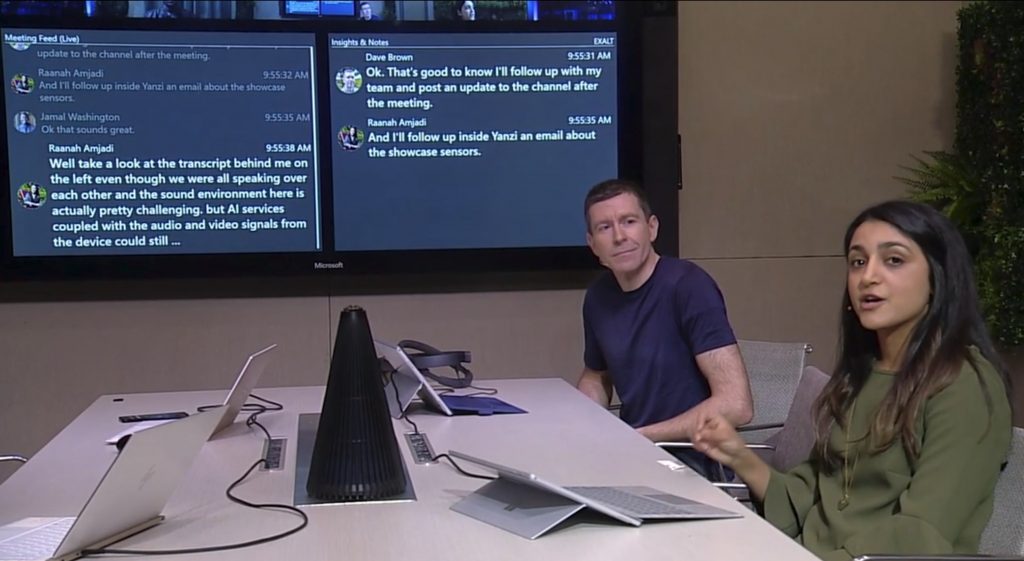

We’re going to talk to our technology, and everyone else’s too. Google proved that earlier this month with a demonstration of artificial intelligence that can hop on the phone to book a restaurant reservation or appointment at the hair salon.

Now it’s just a matter of who can build that technology fastest. To reach that goal, Microsoft has acquired conversational AI startup Semantic Machines for an undisclosed amount. Founded in 2014, the startup’s goal was to build AI that can converse with humans through speech or text, with the ability to be trained to converse on any language or subject.

Researchers developed an AI to detect DeepFakes — from thenextweb.com by Tristan Greene

Excerpt:

A team of researchers from the State University of New York (SUNY) recently developed a method for detecting whether the people in a video are AI-generated. It looks like DeepFakes could meet its match.

What it means: Fear over whether computers will soon be able to generate videos that are indistinguishable from real footage may be much ado about nothing, at least with the currently available methods.

The SUNY team observed that the training method for creating AI that makes fake videos involves feeding it images – not video. This means that certain human physiological quirks – like breathing and blinking – don’t show up in computer-generated videos. So they decided to build an AI that uses computer vision to detect blinking in fake videos.

Bringing It Down To Earth: Four Ways Pragmatic AI Is Being Used Today — from forbes.com by Carlos Melendez

Excerpt:

Without even knowing it, we are interacting with pragmatic AI day in and day out. It is used in the automated chatbots that answer our calls and questions and the customer service rep that texts with us on a retail site, providing a better and faster customer experience.

Below are four key categories of pragmatic AI and ways they are being applied today.

1. Speech Recognition And Natural Language Processing (NLP)

2. Predictive Analytics

3. Image Recognition And Computer Vision

4. Self-Driving Cars And Robots

Billable Hour ‘Makes No Sense’ in an AI World — from biglawbusiness.com by Helen Gunnarsson

Excerpt:

Artificial intelligence (AI) is transforming the practice of law, and “data is the new oil” of the legal industry, panelist Dennis Garcia said at a recent American Bar Association conference.Garcia is an assistant general counsel for Microsoft in Chicago. Robert Ambrogi, a Massachusetts lawyer and blogger who focuses on media, technology, and employment law, moderated the program.“The next generation of lawyers is going to have to understand how AI works” as part of the duty of competence, panelist Anthony E. Davis told the audience. Davis is a partner with Hinshaw & Culbertson LLP in New York.

…

Davis said AI will result in dramatic changes in law firms’ hiring and billing, among other things. The hourly billing model, he said, “makes no sense in a universe where what clients want is judgment.” Law firms should begin to concern themselves not with the degrees or law schools attended by candidates for employment but with whether they are “capable of developing judgment, have good emotional intelligence, and have a technology background so they can be useful” for long enough to make hiring them worthwhile, he said.

Deep Learning Tool Tops Dermatologists in Melanoma Detection — from healthitanalytics.com

A deep learning tool achieved greater accuracy than dermatologists when detecting melanoma in dermoscopic images.

Apple’s plans to bring AI to your phone — from wired.com by Tom Simonite

Excerpt:

HomeCourt is built on tools announced by Federighi last summer, when he launched Apple’s bid to become a preferred playground for AI-curious developers. Known as Core ML, those tools help developers who’ve trained machine learning algorithms deploy them on Apple’s mobile devices and PCs.

At Apple’s Worldwide Developer Conference on Monday, Federighi revealed the next phase of his plan to enliven the app store with AI. It’s a tool called Create ML that’s something like a set of training wheels for building machine learning models in the first place. In a demo, training an image-recognition algorithm to distinguish different flavors of ice cream was as easy as dragging and dropping a folder containing a few dozen images and waiting a few seconds. In a session for developers, Apple engineers suggested Create ML could teach software to detect whether online comments are happy or angry, or predict the quality of wine from characteristics such as acidity and sugar content. Developers can use Create ML now but can’t ship apps using the technology until Apple’s latest operating systems arrive later this year.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)