Introducing Gen-3 Alpha: Runway’s new base model for video generation.

Gen-3 Alpha can create highly detailed videos with complex scene changes, a wide range of cinematic choices, and detailed art directions.https://t.co/YQNE3eqoWf

(1/10) pic.twitter.com/VjEG2ocLZ8

— Runway (@runwayml) June 17, 2024

A new chapter of creativity begins.

Introducing GEN-3 Alpha – The first of a series of new models built by creatives for creatives. Video generated with @runwayml‘s new Text-2-Video model.

Coming soon. pic.twitter.com/oNONabxdNl

— Nicolas Neubert (@iamneubert) June 17, 2024

Google just announced their work on Video-to-audio, absolutely wild.

Here are 11 crazy examples

1. drums pic.twitter.com/sTNfymJIeN

— Linus ??? Ekenstam (@LinusEkenstam) June 17, 2024

Kuaishou Unveils Kling: A Text-to-Video Model To Challenge OpenAI’s Sora — from maginative.com by Chris McKay

Generating audio for video — from deepmind.google

LinkedIn leans on AI to do the work of job hunting — from techcrunch.com by Ingrid Lunden

Learning personalisation. LinkedIn continues to be bullish on its video-based learning platform, and it appears to have found a strong current among users who need to skill up in AI. Cohen said that traffic for AI-related courses — which include modules on technical skills as well as non-technical ones such as basic introductions to generative AI — has increased by 160% over last year.

You can be sure that LinkedIn is pushing its search algorithms to tap into the interest, but it’s also boosting its content with AI in another way.

For Premium subscribers, it is piloting what it describes as “expert advice, powered by AI.” Tapping into expertise from well-known instructors such as Alicia Reece, Anil Gupta, Dr. Gemma Leigh Roberts and Lisa Gates, LinkedIn says its AI-powered coaches will deliver responses personalized to users, as a “starting point.”

These will, in turn, also appear as personalized coaches that a user can tap while watching a LinkedIn Learning course.

Also related to this, see:

Unlocking New Possibilities for the Future of Work with AI — from news.linkedin.com

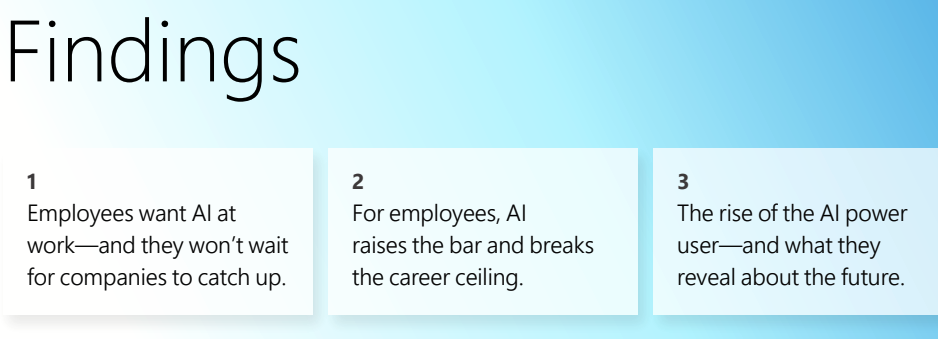

Personalized learning for everyone: Whether you’re looking to change or not, the skills required in the workplace are expected to change by 68% by 2030.

Expert advice, powered by AI: We’re beginning to pilot the ability to get personalized practical advice instantly from industry leading business leaders and coaches on LinkedIn Learning, all powered by AI. The responses you’ll receive are trained by experts and represent a blend of insights that are personalized to each learner’s unique needs. While human professional coaches remain invaluable, these tools provide a great starting point.

Personalized coaching, powered by AI, when watching a LinkedIn course: As learners —including all Premium subscribers — watch our new courses, they can now simply ask for summaries of content, clarify certain topics, or get examples and other real-time insights, e.g. “Can you simplify this concept?” or “How does this apply to me?”

Roblox’s Road to 4D Generative AI — from corp.roblox.com by Morgan McGuire, Chief Scientist

- Roblox is building toward 4D generative AI, going beyond single 3D objects to dynamic interactions.

- Solving the challenge of 4D will require multimodal understanding across appearance, shape, physics, and scripts.

- Early tools that are foundational for our 4D system are already accelerating creation on the platform.