Trend No. 3: The business model faces a full-scale transformation — from www2.deloitte.com by Cole Clark, Megan Cluver, and Jeffrey J. Selingo

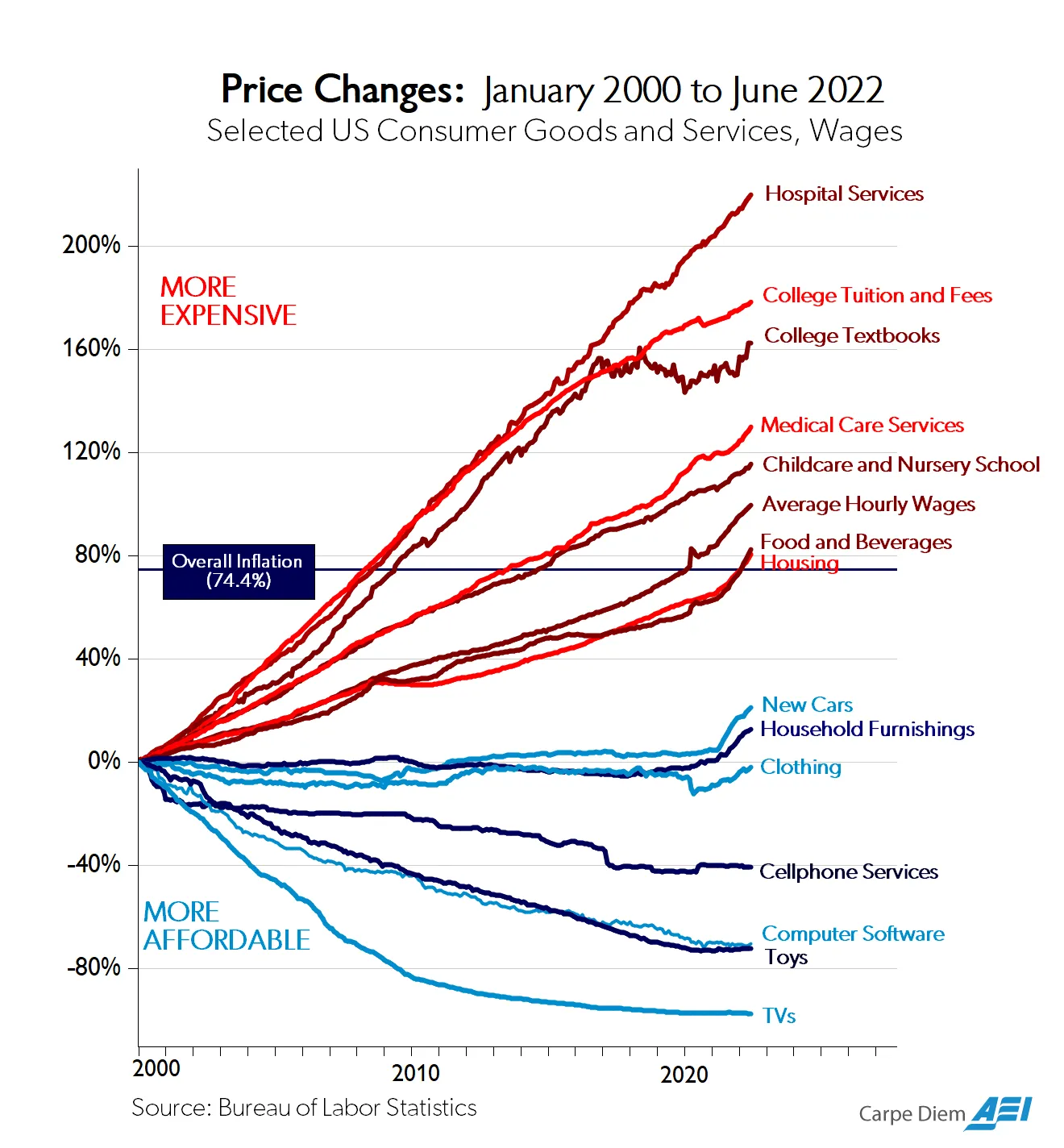

The traditional business model of higher education is broken as institutions can no longer rely on rising tuition among traditional students as the primary driver of revenue.

Excerpt:

Yet the opportunities for colleges and universities that shift their business model to a more student-centric one, serving the needs of a wider diversity of learners at different stages of their lives and careers, are immense. Politicians and policymakers are looking for solutions to the demographic cliff facing the workforce and the need to upskill and reskill generations of workers in an economy where the half-life of skills is shrinking. This intersection of needs—higher education needs students; the economy needs skilled workers—means that colleges and universities, if they execute on the right set of strategies, could play a critical role in developing the workforce of the future. For many colleges, this shift will require a significant rethinking of mission and structure as many institutions weren’t designed for workforce development and many faculty don’t believe it’s their job to get students a job. But if a set of institutions prove successful on this front, they could in the process improve the public perception of higher education, potentially leading to more political and financial support for growing this evolving business model in the future.

Also see:

Trend No. 2: The value of the degree undergoes further questioning — from www2.deloitte.com by Cole Clark, Megan Cluver, and Jeffrey J. Selingo

The perceived value of higher education has fallen as the skills needed to keep up in a job constantly change and learners have better consumer information on outcomes.

Excerpt:

Higher education has yet to come to grips with the trade-offs that students and their families are increasingly weighing with regard to obtaining a four-year degree.

…

But the problem facing the vast majority of colleges and universities is that they are no longer perceived to be the best source for the skills employers are seeking. This is especially the case as traditional degrees are increasingly competing with a rising tide of microcredentials, industry-based certificates, and well-paying jobs that don’t require a four-year degree.

Trend No. 1: College enrollment reaches its peak — from www2.deloitte.com by Cole Clark, Megan Cluver, and Jeffrey J. Selingo

Enrollment rates in higher education have been declining in the United States over the years as other countries catch up.

Excerpt:

Higher education in the United States has only known growth for generations. But enrollment of traditional students has been falling for more than a decade, especially among men, putting pressure both on the enrollment pipeline and on the work ecosystem it feeds. Now the sector faces increased headwinds as other countries catch up with the aggregate number of college-educated adults, with China and India expected to surpass the United States as the front runners in educated populations within the next decade or so.

Plus the other trends listed here >>

Also related to higher education, see the following items:

Number of Colleges in Distress Is Up 70% From 2012 — from bloomberg.com by Nic Querolo (behind firewall)

More schools see falling enrollement and tuition revenue | Small private, public colleges most at risk, report show

About 75% of students want to attend college — but far fewer expect to actually go — from highereddive.com by Jeremy Bauer-Wolf

There Is No Going Back: College Students Want a Live, Remote Option for In-Person Classes — from campustechnology.com by Eric Paljug

Excerpt:

Based on a survey of college students over the last three semesters, students understand that remotely attending a lecture via remote synchronous technology is less effective for them than attending in person, but they highly value the flexibility of this option of attending when they need it.

Future Prospects and Considerations for AR and VR in Higher Education Academic Technology — from er.educause.edu by Owen McGrath, Chris Hoffman and Shawna Dark

Imagining how the future might unfold, especially for emerging technologies like AR and VR, can help prepare for what does end up happening.

Black Community College Enrollment is Plummeting. How to Get Those Students Back — from the74million.org by Karen A. Stout & Francesca I. Carpenter

Stout & Carpenter: Schools need a new strategy to bolster access for learners of color who no longer see higher education as a viable pathway

As the Level Up coalition reports ,“the vast majority — 80% — of Black Americans believe that college is unaffordable.” This is not surprising given that Black families have fewer assets to pay for college and, as a result, incur significantly more student loan debt than their white or Latino peers. This is true even at the community college level. Only one-third of Black students are able to earn an associate degree without incurring debt.

Repairing Gen Ed | Colleges struggle to help students answer the question, ‘Why am I taking this class?’ — from chronicle.com by Beth McMurtrie

Students Are Disoriented by Gen Ed. So Colleges Are Trying to Fix It.

Excerpts:

Less than 30 percent of college graduates are working in a career closely related to their major, and the average worker has 12 jobs in their lifetime. That means, he says, that undergraduates must learn to be nimble and must build transferable skills. Why can’t those skills and ways of thinking be built into general education?

…

“Anyone paying attention to the nonacademic job market,” he writes, “will know that skills, rather than specific majors, are the predominant currency.”

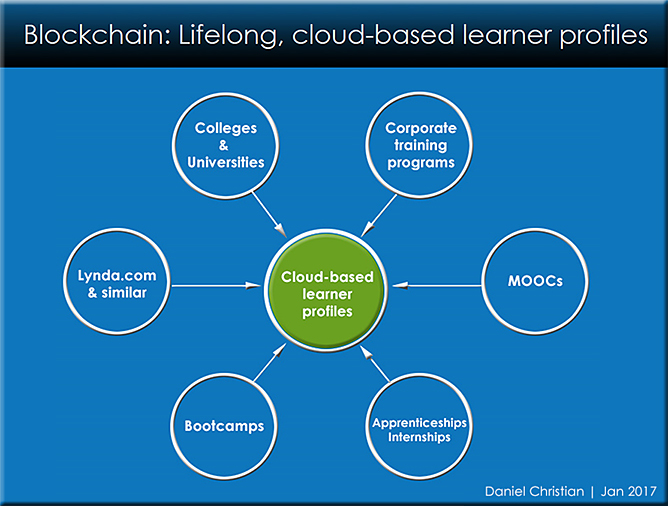

Micro-credentials Survey. 2023 Trends and Insights. — from holoniq.com

HolonIQ’s 2023 global survey on micro-credentials

3 Keys to Making Microcredentials Valid for Learners, Schools, and Employers — from campustechnology.com by Dave McCool

To give credentials value in the workplace, the learning behind them must be sticky, visible, and scalable.

Positive Partnership: Creating Equity in Gateway Course Success — from insidehighered.com by Ashley Mowreader

The Gardner Institute’s Courses and Curricula in Urban Ecosystems initiative works alongside institutions to improve success in general education courses.

American faith in higher education is declining: one poll — from bryanalexander.org by Bryan Alexander

Excerpt:

The main takeaway is that our view of higher education’s value is souring. Fewer of us see post-secondary learning as worth the cost, and now a majority think college and university degrees are no longer worth it: “56% of Americans think earning a four-year degree is a bad bet compared with 42% who retain faith in the credential.”

…

Again, this is all about one question in one poll with a small n. But it points to directions higher ed and its national setting are headed in, and we should think hard about how to respond.