From DSC:

We are hopefully creating the future that we want — i.e., creating the future of our dreams, not nightmares. The 14 items below show that technology is often waaay out ahead of us…and it takes time for other areas of society to catch up (such as areas that involve making policies, laws, and/or if we should even be doing these things in the first place).

Such reflections always make me ask:

- Who should be involved in some of these decisions?

- Who is currently getting asked to the decision-making tables for such discussions?

- How does the average citizen participate in such discussions?

Readers of this blog know that I’m generally pro-technology. But with the exponential pace of technological change, we need to slow things down enough to make wise decisions.

Google AI invents its own cryptographic algorithm; no one knows how it works — from arstechnica.co.uk by Sebastian Anthony

Neural networks seem good at devising crypto methods; less good at codebreaking.

Excerpt:

Google Brain has created two artificial intelligences that evolved their own cryptographic algorithm to protect their messages from a third AI, which was trying to evolve its own method to crack the AI-generated crypto. The study was a success: the first two AIs learnt how to communicate securely from scratch.

IoT growing faster than the ability to defend it — from scientificamerican.com by Larry Greenemeier

Last week’s use of connected gadgets to attack the Web is a wake-up call for the Internet of Things, which will get a whole lot bigger this holiday season

Excerpt:

With this year’s approaching holiday gift season the rapidly growing “Internet of Things” or IoT—which was exploited to help shut down parts of the Web this past Friday—is about to get a lot bigger, and fast. Christmas and Hanukkah wish lists are sure to be filled with smartwatches, fitness trackers, home-monitoring cameras and other wi-fi–connected gadgets that connect to the internet to upload photos, videos and workout details to the cloud. Unfortunately these devices are also vulnerable to viruses and other malicious software (malware) that can be used to turn them into virtual weapons without their owners’ consent or knowledge.

Last week’s distributed denial of service (DDoS) attacks—in which tens of millions of hacked devices were exploited to jam and take down internet computer servers—is an ominous sign for the Internet of Things. A DDoS is a cyber attack in which large numbers of devices are programmed to request access to the same Web site at the same time, creating data traffic bottlenecks that cut off access to the site. In this case the still-unknown attackers used malware known as “Mirai” to hack into devices whose passwords they could guess, because the owners either could not or did not change the devices’ default passwords.

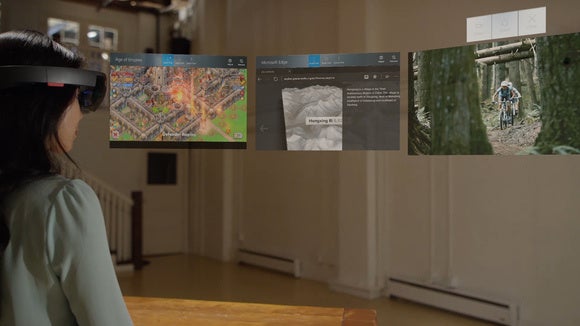

How to Get Lost in Augmented Reality — from inverse.com by Tanya Basu; with thanks to Woontack Woo for this resource

There are no laws against projecting misinformation. That’s good news for pranksters, criminals, and advertisers.

Excerpt:

Augmented reality offers designers and engineers new tools and artists and new palette, but there’s a dark side to reality-plus. Because A.R. technologies will eventually allow individuals to add flourishes to the environments of others, they will also facilitate the creation of a new type of misinformation and unwanted interactions. There will be advertising (there is always advertising) and there will also be lies perpetrated with optical trickery.

Two computer scientists-turned-ethicists are seriously considering the problematic ramifications of a technology that allows for real-world pop-ups: Keith Miller at the University of Missouri-St. Louis and Bo Brinkman at Miami University in Ohio. Both men are dismissive of Pokémon Go because smartphones are actually behind the times when it comes to A.R.

…

A very important question is who controls these augmentations,” Miller says. “It’s a huge responsibility to take over someone’s world — you could manipulate people. You could nudge them.”

Can we build AI without losing control over it? — from ted.com by Sam Harris

Description:

Scared of superintelligent AI? You should be, says neuroscientist and philosopher Sam Harris — and not just in some theoretical way. We’re going to build superhuman machines, says Harris, but we haven’t yet grappled with the problems associated with creating something that may treat us the way we treat ants.

Do no harm, don’t discriminate: official guidance issued on robot ethics — from theguardian.com

Robot deception, addiction and possibility of AIs exceeding their remits noted as hazards that manufacturers should consider

Excerpt:

Isaac Asimov gave us the basic rules of good robot behaviour: don’t harm humans, obey orders and protect yourself. Now the British Standards Institute has issued a more official version aimed at helping designers create ethically sound robots.

The document, BS8611 Robots and robotic devices, is written in the dry language of a health and safety manual, but the undesirable scenarios it highlights could be taken directly from fiction. Robot deception, robot addiction and the possibility of self-learning systems exceeding their remits are all noted as hazards that manufacturers should consider.

World’s first baby born with new “3 parent” technique — from newscientist.com by Jessica Hamzelou

Excerpt:

It’s a boy! A five-month-old boy is the first baby to be born using a new technique that incorporates DNA from three people, New Scientist can reveal. “This is great news and a huge deal,” says Dusko Ilic at King’s College London, who wasn’t involved in the work. “It’s revolutionary.”

The controversial technique, which allows parents with rare genetic mutations to have healthy babies, has only been legally approved in the UK. But the birth of the child, whose Jordanian parents were treated by a US-based team in Mexico, should fast-forward progress around the world, say embryologists.

Scientists Grow Full-Sized, Beating Human Hearts From Stem Cells — from popsci.com by Alexandra Ossola

It’s the closest we’ve come to growing transplantable hearts in the lab

Excerpt:

Of the 4,000 Americans waiting for heart transplants, only 2,500 will receive new hearts in the next year. Even for those lucky enough to get a transplant, the biggest risk is the their bodies will reject the new heart and launch a massive immune reaction against the foreign cells. To combat the problems of organ shortage and decrease the chance that a patient’s body will reject it, researchers have been working to create synthetic organs from patients’ own cells. Now a team of scientists from Massachusetts General Hospital and Harvard Medical School has gotten one step closer, using adult skin cells to regenerate functional human heart tissue, according to a study published recently in the journal Circulation Research.

Achieving trust through data ethics — from sloanreview.mit.edu

Success in the digital age requires a new kind of diligence in how companies gather and use data.

Excerpt:

A few months ago, Danish researchers used data-scraping software to collect the personal information of nearly 70,000 users of a major online dating site as part of a study they were conducting. The researchers then published their results on an open scientific forum. Their report included the usernames, political leanings, drug usage, and other intimate details of each account.

A firestorm ensued. Although the data gathered and subsequently released was already publicly available, many questioned whether collecting, bundling, and broadcasting the data crossed serious ethical and legal boundaries.

In today’s digital age, data is the primary form of currency. Simply put: Data equals information equals insights equals power.

Technology is advancing at an unprecedented rate — along with data creation and collection. But where should the line be drawn? Where do basic principles come into play to consider the potential harm from data’s use?

“Data Science Ethics” course — from the University of Michigan on edX.org

Learn how to think through the ethics surrounding privacy, data sharing, and algorithmic decision-making.

About this course

As patients, we care about the privacy of our medical record; but as patients, we also wish to benefit from the analysis of data in medical records. As citizens, we want a fair trial before being punished for a crime; but as citizens, we want to stop terrorists before they attack us. As decision-makers, we value the advice we get from data-driven algorithms; but as decision-makers, we also worry about unintended bias. Many data scientists learn the tools of the trade and get down to work right away, without appreciating the possible consequences of their work.

This course focused on ethics specifically related to data science will provide you with the framework to analyze these concerns. This framework is based on ethics, which are shared values that help differentiate right from wrong. Ethics are not law, but they are usually the basis for laws.

Everyone, including data scientists, will benefit from this course. No previous knowledge is needed.

Science, Technology, and the Future of Warfare — from mwi.usma.edu by Margaret Kosal

Excerpt:

We know that emerging innovations within cutting-edge science and technology (S&T) areas carry the potential to revolutionize governmental structures, economies, and life as we know it. Yet, others have argued that such technologies could yield doomsday scenarios and that military applications of such technologies have even greater potential than nuclear weapons to radically change the balance of power. These S&T areas include robotics and autonomous unmanned system; artificial intelligence; biotechnology, including synthetic and systems biology; the cognitive neurosciences; nanotechnology, including stealth meta-materials; additive manufacturing (aka 3D printing); and the intersection of each with information and computing technologies, i.e., cyber-everything. These concepts and the underlying strategic importance were articulated at the multi-national level in NATO’s May 2010 New Strategic Concept paper: “Less predictable is the possibility that research breakthroughs will transform the technological battlefield…. The most destructive periods of history tend to be those when the means of aggression have gained the upper hand in the art of waging war.”

Low-Cost Gene Editing Could Breed a New Form of Bioterrorism — from bigthink.com by Philip Perry

Excerpt:

2012 saw the advent of gene editing technique CRISPR-Cas9. Now, just a few short years later, gene editing is becoming accessible to more of the world than its scientific institutions. This new technique is now being used in public health projects, to undermine the ability of certain mosquitoes to transmit disease, such as the Zika virus. But that initiative has had many in the field wondering whether it could be used for the opposite purpose, with malicious intent.

Back in February, U.S. National Intelligence Director James Clapper put out a Worldwide Threat Assessment, to alert the intelligence community of the potential risks posed by gene editing. The technology, which holds incredible promise for agriculture and medicine, was added to the list of weapons of mass destruction.

It is thought that amateur terrorists, non-state actors such as ISIS, or rouge states such as North Korea, could get their hands on it, and use this technology to create a bioweapon such as the earth has never seen, causing wanton destruction and chaos without any way to mitigate it.

What would happen if gene editing fell into the wrong hands?

Robot nurses will make shortages obsolete — from thedailybeast.com by Joelle Renstrom

By 2022, one million nurse jobs will be unfilled—leaving patients with lower quality care and longer waits. But what if robots could do the job?

Excerpt:

Japan is ahead of the curve when it comes to this trend, given that its elderly population is the highest of any country. Toyohashi University of Technology has developed Terapio, a robotic medical cart that can make hospital rounds, deliver medications and other items, and retrieve records. It follows a specific individual, such as a doctor or nurse, who can use it to record and access patient data. Terapio isn’t humanoid, but it does have expressive eyes that change shape and make it seem responsive. This type of robot will likely be one of the first to be implemented in hospitals because it has fairly minimal patient contact, works with staff, and has a benign appearance.

Established to study and formulate best practices on AI technologies, to advance the public’s understanding of AI, and to serve as an open platform for discussion and engagement about AI and its influences on people and society.

GOALS

Support Best Practices

To support research and recommend best practices in areas including ethics, fairness, and inclusivity; transparency and interoperability; privacy; collaboration between people and AI systems; and of the trustworthiness, reliability, and robustness of the technology.

Create an Open Platform for Discussion and Engagement

To provide a regular, structured platform for AI researchers and key stakeholders to communicate directly and openly with each other about relevant issues.

Advance Understanding

To advance public understanding and awareness of AI and its potential benefits and potential costs to act as a trusted and expert point of contact as questions/concerns arise from the public and others in the area of AI and to regularly update key constituents on the current state of AI progress.

IBM Watson’s latest gig: Improving cancer treatment with genomic sequencing — from techrepublic.com by Alison DeNisco

A new partnership between IBM Watson Health and Quest Diagnostics will combine Watson’s cognitive computing with genetic tumor sequencing for more precise, individualized cancer care.

Addendum on 11/1/16:

An open letter to Microsoft and Google’s Partnership on AI — from wired.com by Gerd Leonhard

In a world where machines may have an IQ of 50,000, what will happen to the values and ethics that underpin privacy and free will?

Excerpt:

Dear Francesca, Eric, Mustafa, Yann, Ralf, Demis and others at IBM, Microsoft, Google, Facebook and Amazon.

The Partnership on AI to benefit people and society is a welcome change from the usual celebration of disruption and magic technological progress. I hope it will also usher in a more holistic discussion about the global ethics of the digital age. Your announcement also coincides with the launch of my book Technology vs. Humanity which dramatises this very same question: How will technology stay beneficial to society?

This open letter is my modest contribution to the unfolding of this new partnership. Data is the new oil – which now makes your companies the most powerful entities on the globe, way beyond oil companies and banks. The rise of ‘AI everywhere’ is certain to only accelerate this trend. Yet unlike the giants of the fossil-fuel era, there is little oversight on what exactly you can and will do with this new data-oil, and what rules you’ll need to follow once you have built that AI-in-the-sky. There appears to be very little public stewardship, while accepting responsibility for the consequences of your inventions is rather slow in surfacing.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)