From DSC:

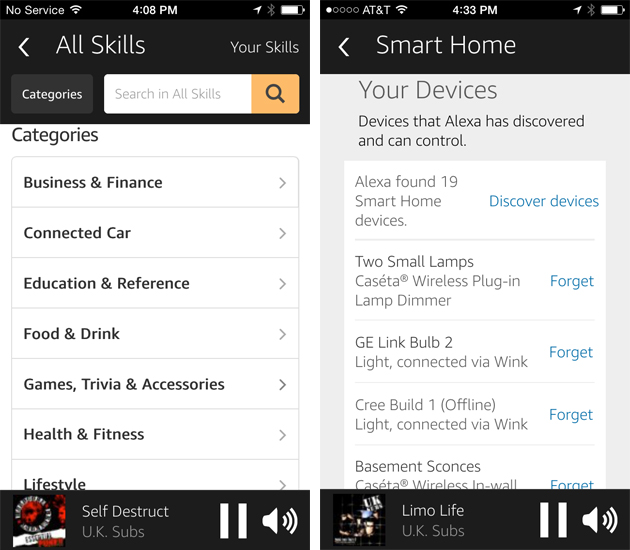

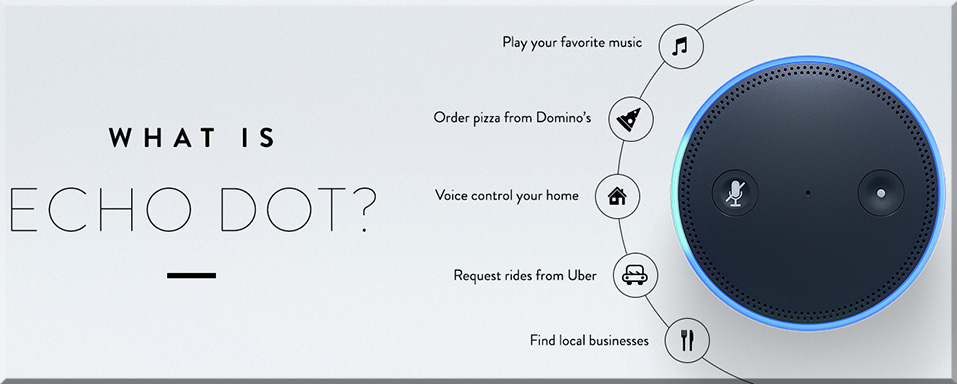

When I saw the article below, I couldn’t help but wonder…what are the teaching & learning-related ramifications when new “skills” are constantly being added to devices like Amazon’s Alexa?

What does it mean for:

- Students / learners

- Faculty members

- Teachers

- Trainers

- Instructional Designers

- Interaction Designers

- User Experience Designers

- Curriculum Developers

- …and others?

Will the capabilities found in Alexa simply come bundled as a part of the “connected/smart TV’s” of the future? Hmm….

NASA unveils a skill for Amazon’s Alexa that lets you ask questions about Mars — from geekwire.com by Kevin Lisota

Excerpt:

Amazon’s Alexa has gained many skills over the past year, such as being able to read tweets or deliver election results and fantasy football scores. Starting on Wednesday, you’ll be able to ask Alexa about Mars.

The new skill for the voice-controlled speaker comes courtesy of NASA’s Jet Propulsion Laboratory. It’s the first Alexa app from the space agency.

Tom Soderstrom, the chief technology officer at NASA’s Jet Propulsion Laboratory was on hand at the AWS re:invent conference in Las Vegas tonight to make the announcement.

Also see:

What Is Alexa? What Is the Amazon Echo, and Should You Get One? — from thewirecutter.com by Grant Clauser

Amazon launches new artificial intelligence services for developers: Image recognition, text-to-speech, Alexa NLP — from geekwire.com by Taylor Soper

Excerpt (emphasis DSC):

Amazon today announced three new artificial intelligence-related toolkits for developers building apps on Amazon Web Services

At the company’s AWS re:invent conference in Las Vegas, Amazon showed how developers can use three new services — Amazon Lex, Amazon Polly, Amazon Rekognition — to build artificial intelligence features into apps for platforms like Slack, Facebook Messenger, ZenDesk, and others.

The idea is to let developers utilize the machine learning algorithms and technology that Amazon has already created for its own processes and services like Alexa. Instead of developing their own AI software, AWS customers can simply use an API call or the AWS Management Console to incorporate AI features into their own apps.

Amazon announces three new AI services, including a text-to-voice service, Amazon Polly — from by D.B. Hebbard

AWS Announces Three New Amazon AI Services

Amazon Lex, the technology that powers Amazon Alexa, enables any developer to build rich, conversational user experiences for web, mobile, and connected device apps; preview starts today

Amazon Polly transforms text into lifelike speech, enabling apps to talk with 47 lifelike voices in 24 languages

Amazon Rekognition makes it easy to add image analysis to applications, using powerful deep learning-based image and face recognition

Capital One, Motorola Solutions, SmugMug, American Heart Association, NASA, HubSpot, Redfin, Ohio Health, DuoLingo, Royal National Institute of Blind People, LingApps, GoAnimate, and Coursera are among the many customers using these Amazon AI Services

Excerpt:

SEATTLE–(BUSINESS WIRE)–Nov. 30, 2016– Today at AWS re:Invent, Amazon Web Services, Inc. (AWS), an Amazon.com company (NASDAQ: AMZN), announced three Artificial Intelligence (AI) services that make it easy for any developer to build apps that can understand natural language, turn text into lifelike speech, have conversations using voice or text, analyze images, and recognize faces, objects, and scenes. Amazon Lex, Amazon Polly, and Amazon Rekognition are based on the same proven, highly scalable Amazon technology built by the thousands of deep learning and machine learning experts across the company. Amazon AI services all provide high-quality, high-accuracy AI capabilities that are scalable and cost-effective. Amazon AI services are fully managed services so there are no deep learning algorithms to build, no machine learning models to train, and no up-front commitments or infrastructure investments required. This frees developers to focus on defining and building an entirely new generation of apps that can see, hear, speak, understand, and interact with the world around them.

To learn more about Amazon Lex, Amazon Polly, or Amazon Rekognition, visit:

https://aws.amazon.com/amazon-ai