From DSC…by the way, another title for this blog could have been:

WIN-WIN situations all around! The Theatre Departments out there could collaborate with other depts/disciplines to develop highly engaging, digitally-based learning experiences!

The future of drama and the theatre — as well as opera, symphonies, and more — will likely include a significant virtual/digital component to them. While it’s too early to say that theatre needs to completely reinvent itself and move “the stage” completely online, below is an idea that creates a variety of WIN-WIN situations for actors, actresses, stage designers, digital audio/video editors, fine artists, graphic designers, programmers, writers, journalists, web designers, and many others as well — including the relevant faculty members!

A new world of creative, engaging, active learning could open up if those involved with the Theatre Department could work collaboratively with students/faculty members from other disciplines. And in the end, the learning experiences and content developed would be highly engaging — and perhaps even profitable for the institutions themselves!

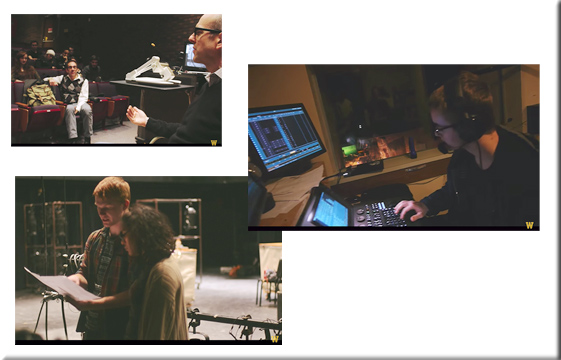

[DC: I only slightly edited the above image from the Theatre Department at WMU]

Though the integration of acting with online-based learning materials is not a new idea, this post encourages a far more significant interdisciplinary collaboration between the Theatre Department and other departments/disciplines.

Consider a “Dealing with Bias in Journalism” type of topic, per a class in the Digital Media and Journalism Major.

- Students from the Theatre Department work collaboratively with the students from the most appropriate class(es?) from the Communications Department to write the script, as per the faculty members’ 30,000-foot instructions (not 1000-foot level/detailed instructions)

- Writing the script would entail skills involved with research, collaboration, persuasion, creativity, communication, writing, and more

- The Theatre students would ultimately act out the script — backed up by those learning about sound design, stage design, lighting design, costume design, etc.

- Example scene: A woman is sitting around the kitchen table, eating breakfast and reading a posting — aloud — from a website that includes some serious bias in it that offends the reader. She threatens to cancel her subscription, contact the editor, and more. She calls out to her partner why she’s so mad about the article.

- Perhaps there could be two or more before/after scenes, given some changes in the way the article was written.

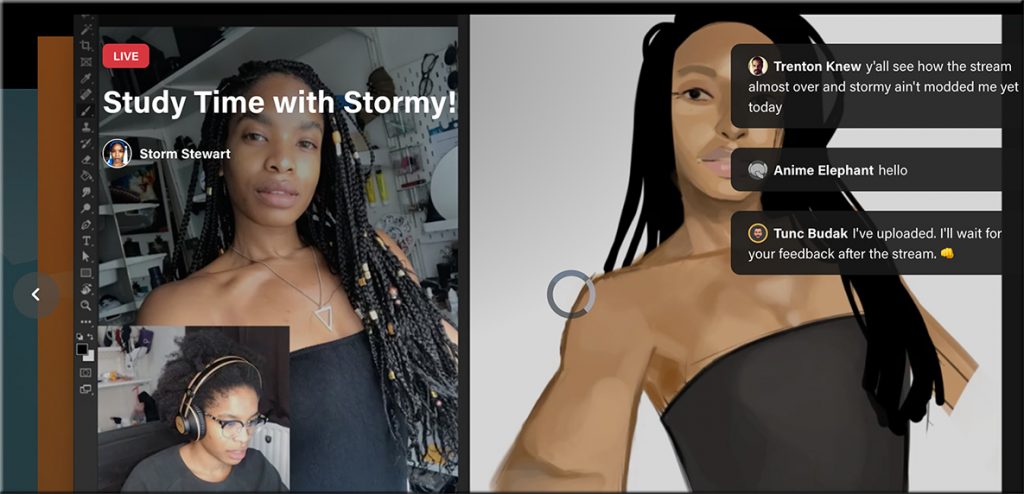

- Once the scenes were shot, the digital video editors, programmers, web designers, and more could take that material and work with the faculty members to integrate those materials into an engaging, interactive, branching type of learning experience.

- From there, the finished product would be deployed by the relevant faculty members.

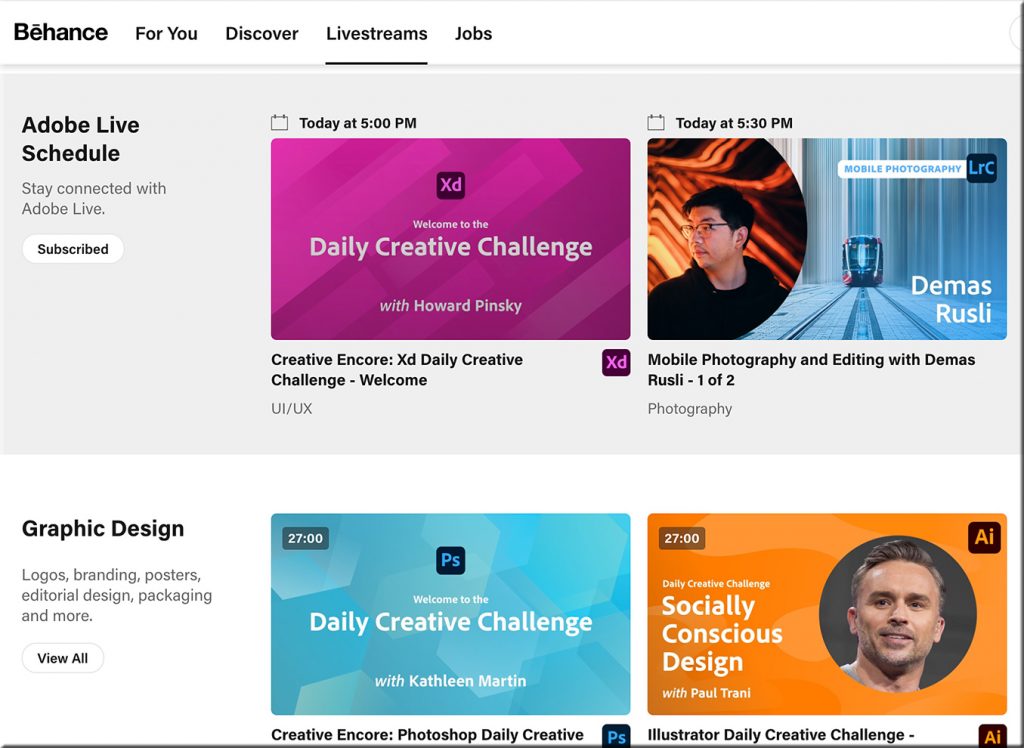

Colleges and universities could share content with each other and/or charge others for their products/content/learning experiences. In the future, I could easily see a marketplace for buying and selling such engaging content. This could create a needed new source of revenue — especially given that those large auditoriums and theaters are likely not bringing in as much revenue as they typically do.

Colleges and universities could also try to reach out to local acting groups to get them involved and continue to create feeders into the world of work.

Other tags/categories could include:

- MOOCs

- Learning from the Living[Class]Room

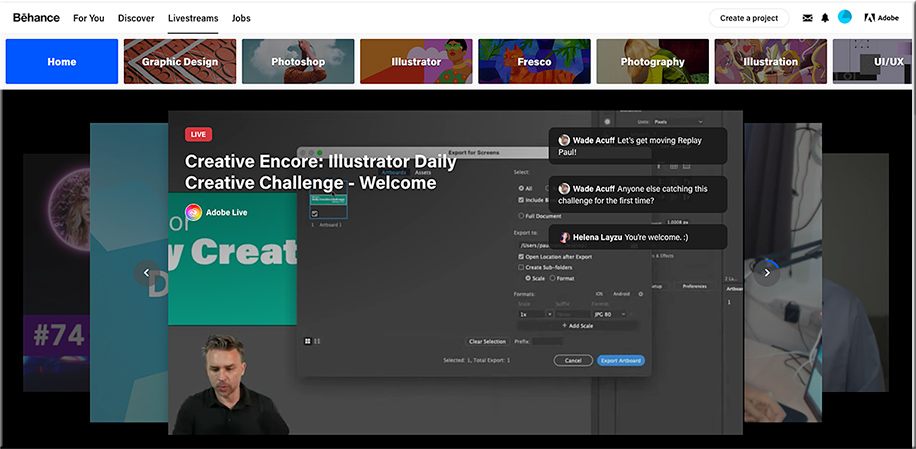

- Multimedia / digital literacy — tools from Adobe, Apple, and others.

- Passions, participation, engagement, attention.

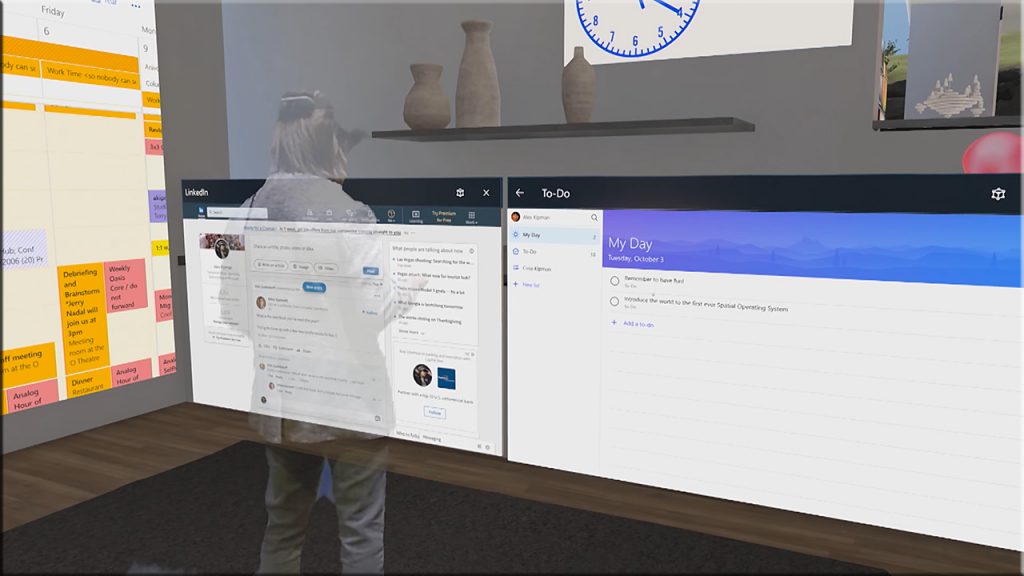

- XR: Creating immersive, Virtual Reality (VR)-based experiences

- Learning Experience Design

- Interaction Design

- Interface Design

- …and more

Also see:

What improv taught me about failure: As a teacher and academic — from scholarlyteacher.com by Katharine Hubbard

In improv, the only way to “fail” is to overthink and not have fun, which reframed what failure was on a grand scale and made me start looking at academia through the same lens. What I learned about failure through improv comes back to those same two core concepts: have fun and stop overthinking.

…

Students are more engaged when the professor is having fun with the materials (Keller, Hoy, Goetz, & Frenzel, 2016), and teaching is more enjoyable when we are having fun ourselves.