The World Will Be Painted With Data — from forbes.com by Charlie Fink

Excerpt:

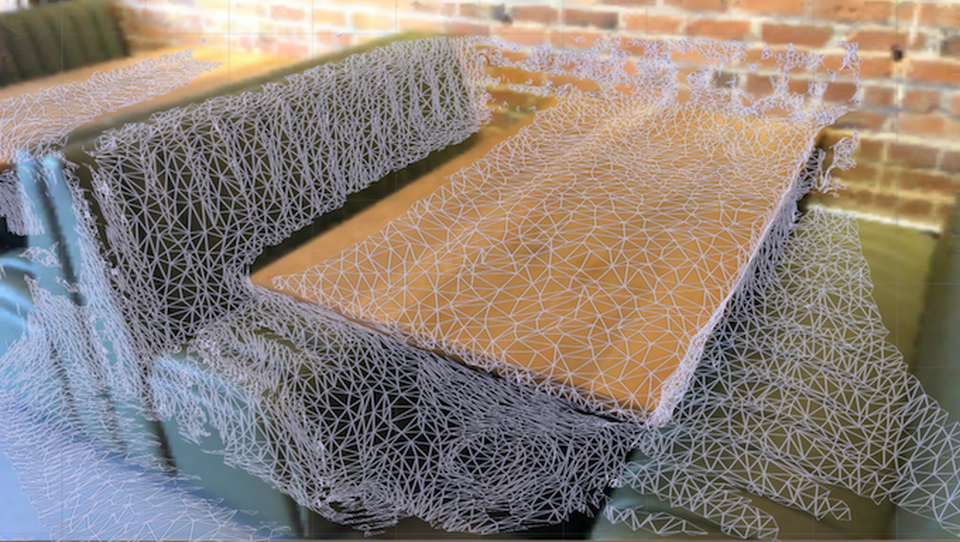

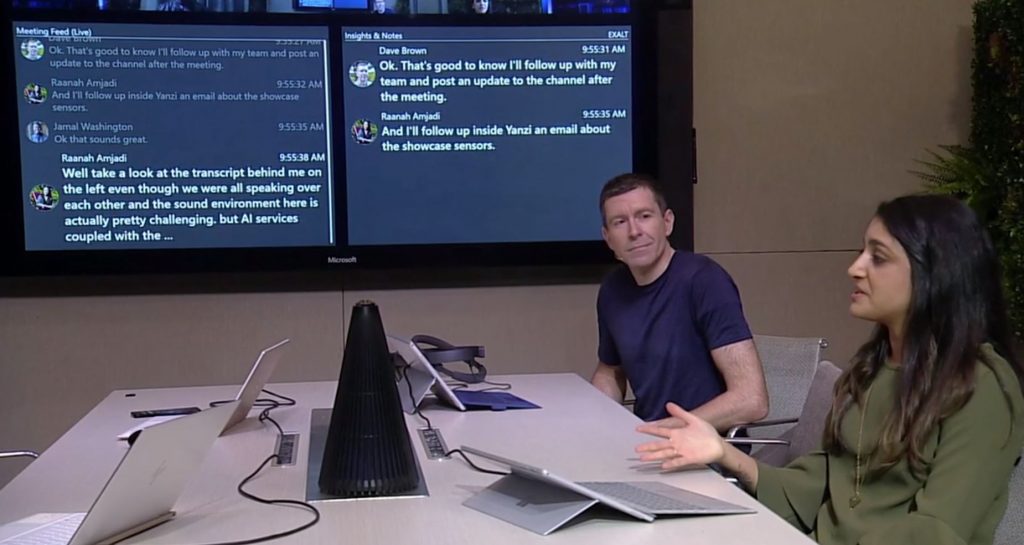

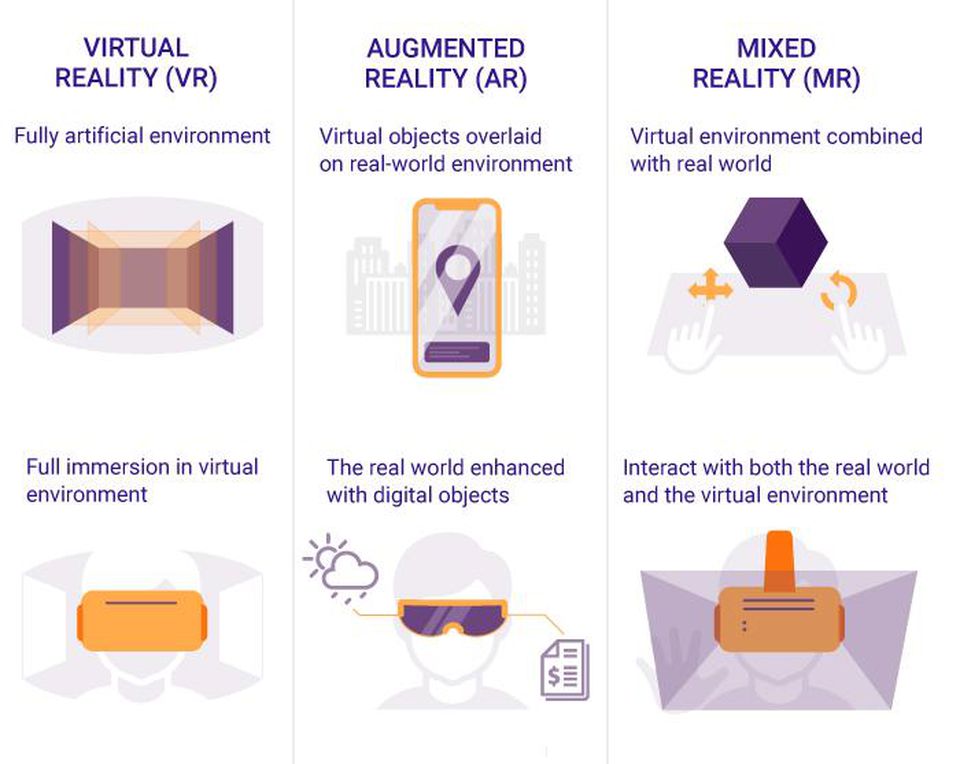

The world is about to be painted with data. Every place. Every person. Every thing. In the near term this invisible digital layer will be revealed by the camera in your phone, but in the long term it will be incorporated into a wearable device, likely a head-mounted display (HMD) integrating phone, audio, and AI assistants. Users will control the system with a combination of voice, gesture and ring controller. Workers in factories use monocular displays to do this now, but it’s going to be quite some time before this benefits consumers. While this coming augmentation of man represents an evolutionary turning point, it’s adoption will resemble that of the personal computer, which took at least fifteen years. Mobile AR, on the other hand, is here now, and in a billion Android and Apple smartphones, which are about to get a lot better. Thanks to AR, we can start building the world’s digital layer for the smartphone, right now, without waiting for HMDs to unlock the benefits of an AR-enabled world.

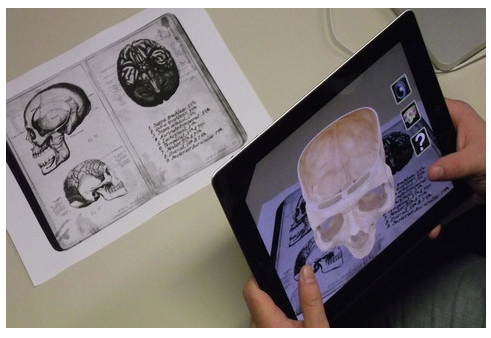

12 hot augmented reality ideas for your business — from information-age.com

Augmented reality is one of the most exciting technologies that made its way into the mass market in the recent years.

Excerpt:

In this article we will tell you about other ways to use this technology in a mobile app except for gaming and give you some augmented reality business ideas.

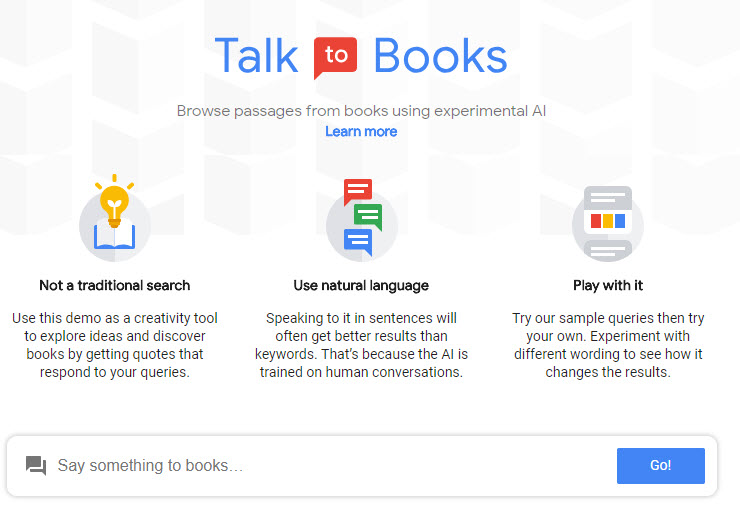

Google Maps is getting augmented reality directions and recommendation features — from theverge.com by Chaim Gartenberg

Plus, the ability to vote on restaurants with friends

Excerpt:

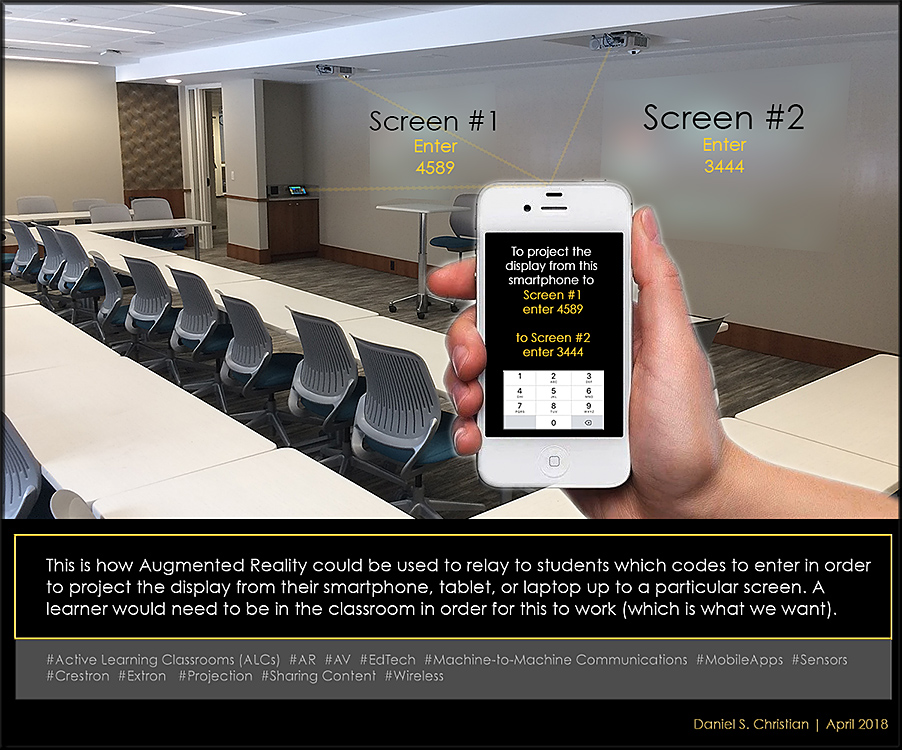

The new AR features combine Google’s existing Street View and Maps data with a live feed from your phone’s camera to overlay walking directions on top of the real world and help you figure out which way you need to go.

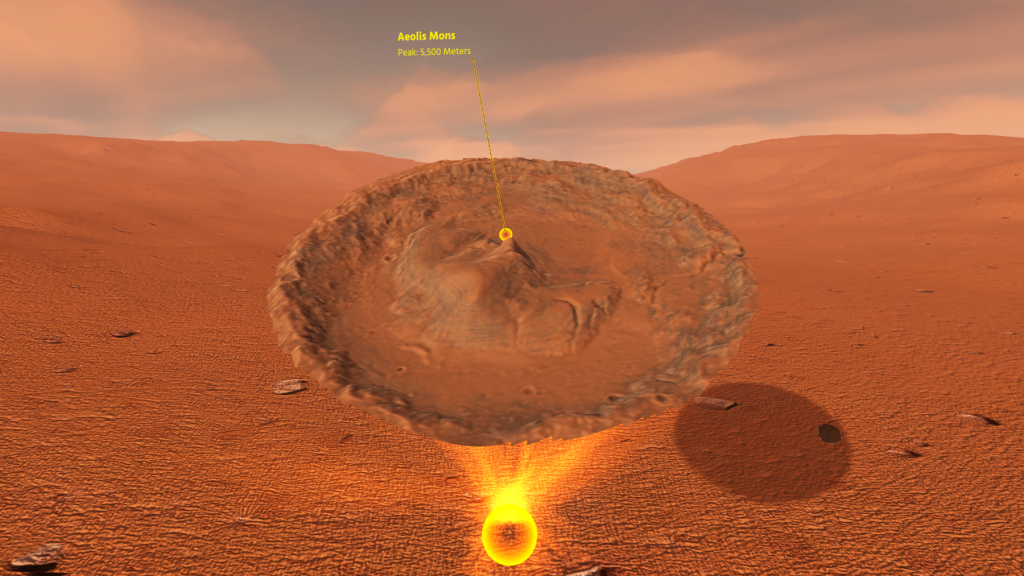

VR Travel: Virtual Reality Can Show You The World — from appreal-vr.com by Yariv Levski

Excerpt:

The VR travel industry may be in its infancy, but if you expect to see baby steps leading to market adoption, think again. Digital travel sales are expected to reach $198 billion this year, with virtual reality travel apps and VR tours capturing a good share of market revenue.

Of course, this should come as no surprise. Consumers increasingly turn to digital media when planning aspects of their lives, from recreational activities to retirement. Because VR has the power to engage travelers like no other technology can do, it is a natural step in the evolution of the travel industry. It is also likely to disrupt travel planning as we know it.

In this article, we will explore VR travel technology, and what it means for business in 2018.

From Inside VR & AR

HP Inc. is teaming up with DiSTI to create VR training programs for enterprise customers. DiSTI is a platform for user interface software and custom 3D training solutions. The companies are partnering to create maintenance and operations training in VR for vehicle, aircraft and industrial equipment systems. DiSTI’s new VE Studio software lets customers develop their own virtual training applications or have DiSTI and HP professional services teams assist in designing and building the program. — TECHRADAR

HP and DiSTI to enhance enterprise training through VR solutions — from techradar.com by

Global alliance will combine HP’s VR solutions with DiSTI’s advanced development platform

Excerpt:

HP Inc. today announced an alliance with the DiSTI Corporation, a leading global provider of VR and advanced human machine interface development solutions, to address the growing demand for high-impact, cost-effective VR training.

The two companies will work together to develop unique VR training solutions for enterprise customers, with a specific focus on maintenance and operations training for complex systems such as vehicle, aircraft and industrial equipment.

Addendum:

- Why 360 Video and Virtual Reality Matters and 5 Great Ways To Use It — from mediamerse.com

Excerpt:

It’s a different approach to storytelling: Just as standard video is a step up from photography in terms of immersiveness, 360 video and VR amp this up considerably further. Controlling what’s in the frame and editing to hone in on the elements of the picture that we’d like the viewer to focus on is somewhat ‘easy’ with photography. With moving pictures (video), this is harder but with the right use of the camera, it’s still easy to direct the viewer’s attention to the elements of the narrative we’d like to highlight.Since 360 and VR allow the user to essentially take control of the camera, content creators have a lot less control in terms of capturing attention. This has its upsides too though…360 video and particularly VR provide for a very rich sensory environment that standard video just can’t match.