Clay Shirky on Mega-Universities and Scale — from philonedtech.com by Clay Shirky

[This was a guest post by Clay Shirky that grew out of a conversation that Clay and Phil had about IPEDS enrollment data. Most of the graphs are provided by Phil.]

Excerpts:

Were half a dozen institutions to dominate the online learning landscape with no end to their expansion, or shift what Americans seek in a college degree, that would indeed be one of the greatest transformations in the history of American higher education. The available data, however, casts doubt on that idea.

Though much of the conversation around mega-universities is speculative, we already know what a mega-university actually looks like, one much larger than any university today. It looks like the University of Phoenix, or rather it looked like Phoenix at the beginning of this decade, when it had 470,000 students, the majority of whom took some or all of their classes online. Phoenix back then was six times the size of the next-largest school, Kaplan, with 78,000 students, and nearly five times the size of any university operating today.

From that high-water mark, Phoenix has lost an average of 40,000 students every year of this decade.

From DSC:

First of all, I greatly appreciate both Clay’s and Phil’s thought leadership and their respective contributions to education and learning through the years. I value their perspectives and their work. Clay and Phil offer up a great article here — one worth your time to read.

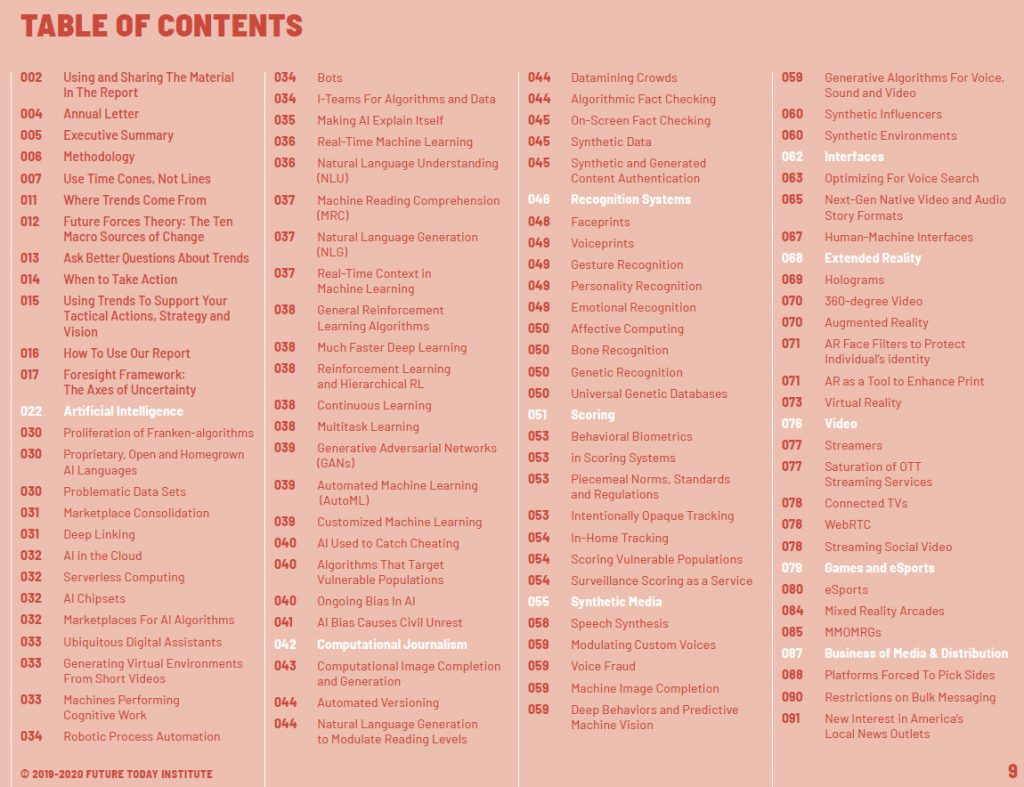

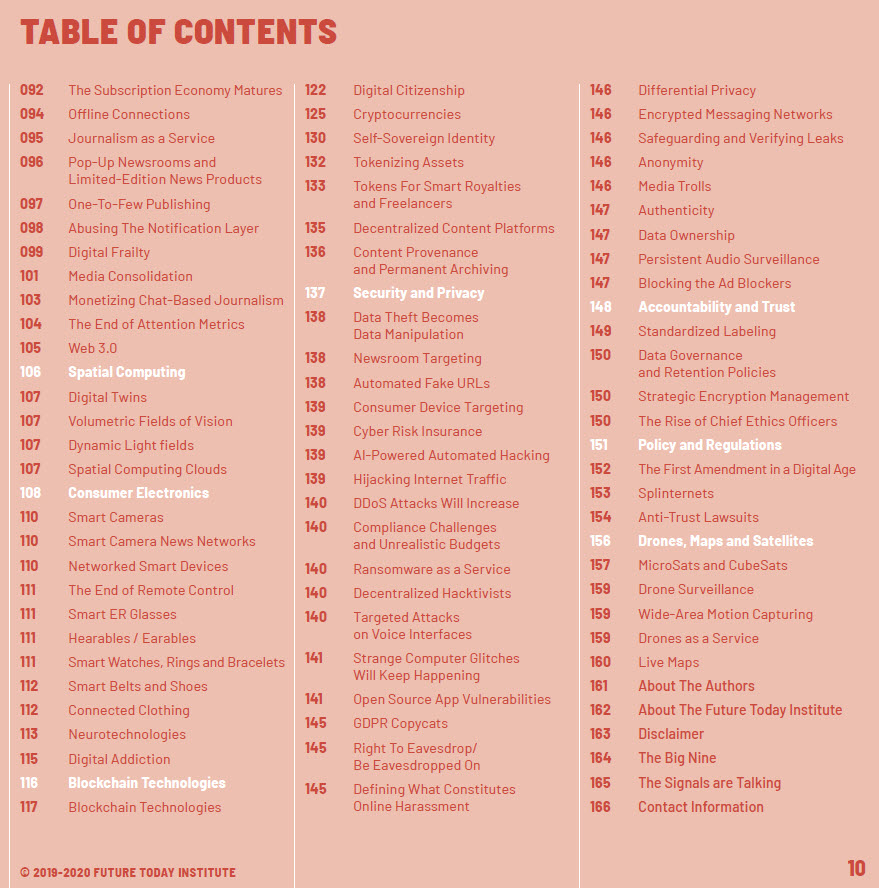

The article made me reflect on what I’ve been building upon and tracking for the last decade — a next generation ***PLATFORM*** that I believe will represent a powerful piece of a global learning ecosystem. I call this vision, “Learning from the Living [Class] Room.” Though the artificial intelligence-backed platform that I’m envisioning doesn’t yet fully exist — this new era and type of learning-based platform ARE coming. The emerging signs, technologies, trends — and “fingerprints”of it, if you will — are beginning to develop all over the place.

Such a platform will:

- Be aimed at the lifelong learner.

- Offer up major opportunities to stay relevant and up-to-date with one’s skills.

- Offer access to the program offerings from many organizations — including the mega-universities, but also, from many other organizations that are not nearly as large as the mega-universities.

- Be reliant upon human teachers, professors, trainers, subject matter experts, but will be backed up by powerful AI-based technologies/tools. For example, AI-based tools will pulse-check the open job descriptions and the needs of business and present the top ___ areas to go into (how long those areas/jobs last is anyone’s guess, given the exponential pace of technological change).

…

Below are some quotes that I want to comment on:

Not nothing, but not the kind of environment that will produce an educational Amazon either, especially since the top 30 actually shrank by 0.2% a year.

Instead of an “Amazon vs. the rest” dynamic, online education is turning into something much more widely adopted, where the biggest schools are simply the upper end of a continuum, not so different from their competitors, and not worth treating as members of a separate category.

Since the founding of William and Mary, the country’s second college, higher education in the U.S. hasn’t been a winner-take-all market, and it isn’t one today. We are not entering a world where the largest university operates at outsized scale, we’re leaving that world;

From DSC:

I don’t see us leaving that world at all…but that’s not my main reflection here. Instead, I’m not focusing on how large the mega-universities will become. When I speak of a forthcoming Walmart of Education or Amazon of Education, what I have in mind is a platform…not one particular organization.

Consider that the vast majority of Amazon’s revenues come from products that other organizations produce. They are a platform, if you will. And in the world of platforms (i.e., software), it IS a winner take all market.

Bill Gates reflects on this as well in this recent article from The Verge:

“In the software world, particularly for platforms, these are winner-take-all markets.

So it’s all about a forthcoming platform — or platforms. (It could be more than one platform. Consider Apple. Consider Microsoft. Consider Google. Consider Facebook.)

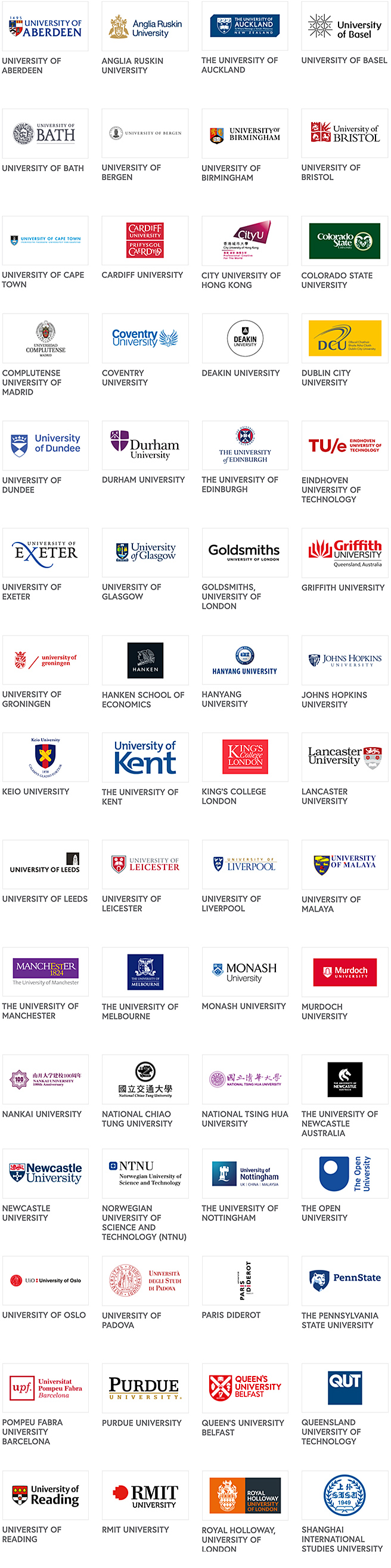

But then the question becomes…would a large amount of universities (and other types of organizations) be willing to offer up their courses on a platform? Well, consider what’s ALREADY happening with FutureLearn:

Finally…one more excerpt from Clay’s article:

Eventually the new ideas lose their power to shock, and end up being widely copied. Institutional transformation starts as heresy and ends as a section in the faculty handbook.

From DSC:

This is a great point. Reminds me of this tweet from Fred Steube (and I added a piece about Western Telegraph):

Some things to reflect upon…for sure.