EdTech Is Going Crazy For AI — from joshbersin.com by Josh Bersin

Excerpts:

This week I spent a few days at the ASU/GSV conference and ran into 7,000 educators, entrepreneurs, and corporate training people who had gone CRAZY for AI.

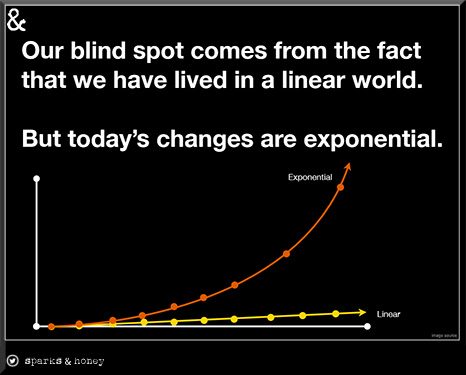

No, I’m not kidding. This community, which makes up people like training managers, community college leaders, educators, and policymakers is absolutely freaked out about ChatGPT, Large Language Models, and all sorts of issues with AI. Now don’t get me wrong: I’m a huge fan of this. But the frenzy is unprecedented: this is bigger than the excitement at the launch of the i-Phone.

Second, the L&D market is about to get disrupted like never before. I had two interactive sessions with about 200 L&D leaders and I essentially heard the same thing over and over. What is going to happen to our jobs when these Generative AI tools start automatically building content, assessments, teaching guides, rubrics, videos, and simulations in seconds?

The answer is pretty clear: you’re going to get disrupted. I’m not saying that L&D teams need to worry about their careers, but it’s very clear to me they’re going to have to swim upstream in a big hurry. As with all new technologies, it’s time for learning leaders to get to know these tools, understand how they work, and start to experiment with them as fast as you can.

Speaking of the ASU+GSV Summit, see this posting from Michael Moe:

EIEIO…Brave New World

By: Michael Moe, CFA, Brent Peus, Owen Ritz

Excerpt:

Last week, the 14th annual ASU+GSV Summit hosted over 7,000 leaders from 70+ companies well as over 900 of the world’s most innovative EdTech companies. Below are some of our favorite speeches from this year’s Summit…

***

Also see:

Imagining what’s possible in lifelong learning: Six insights from Stanford scholars at ASU+GSV — from acceleratelearning.stanford.edu by Isabel Sacks

Excerpt:

High-quality tutoring is one of the most effective educational interventions we have – but we need both humans and technology for it to work. In a standing-room-only session, GSE Professor Susanna Loeb, a faculty lead at the Stanford Accelerator for Learning, spoke alongside school district superintendents on the value of high-impact tutoring. The most important factors in effective tutoring, she said, are (1) the tutor has data on specific areas where the student needs support, (2) the tutor has high-quality materials and training, and (3) there is a positive, trusting relationship between the tutor and student. New technologies, including AI, can make the first and second elements much easier – but they will never be able to replace human adults in the relational piece, which is crucial to student engagement and motivation.

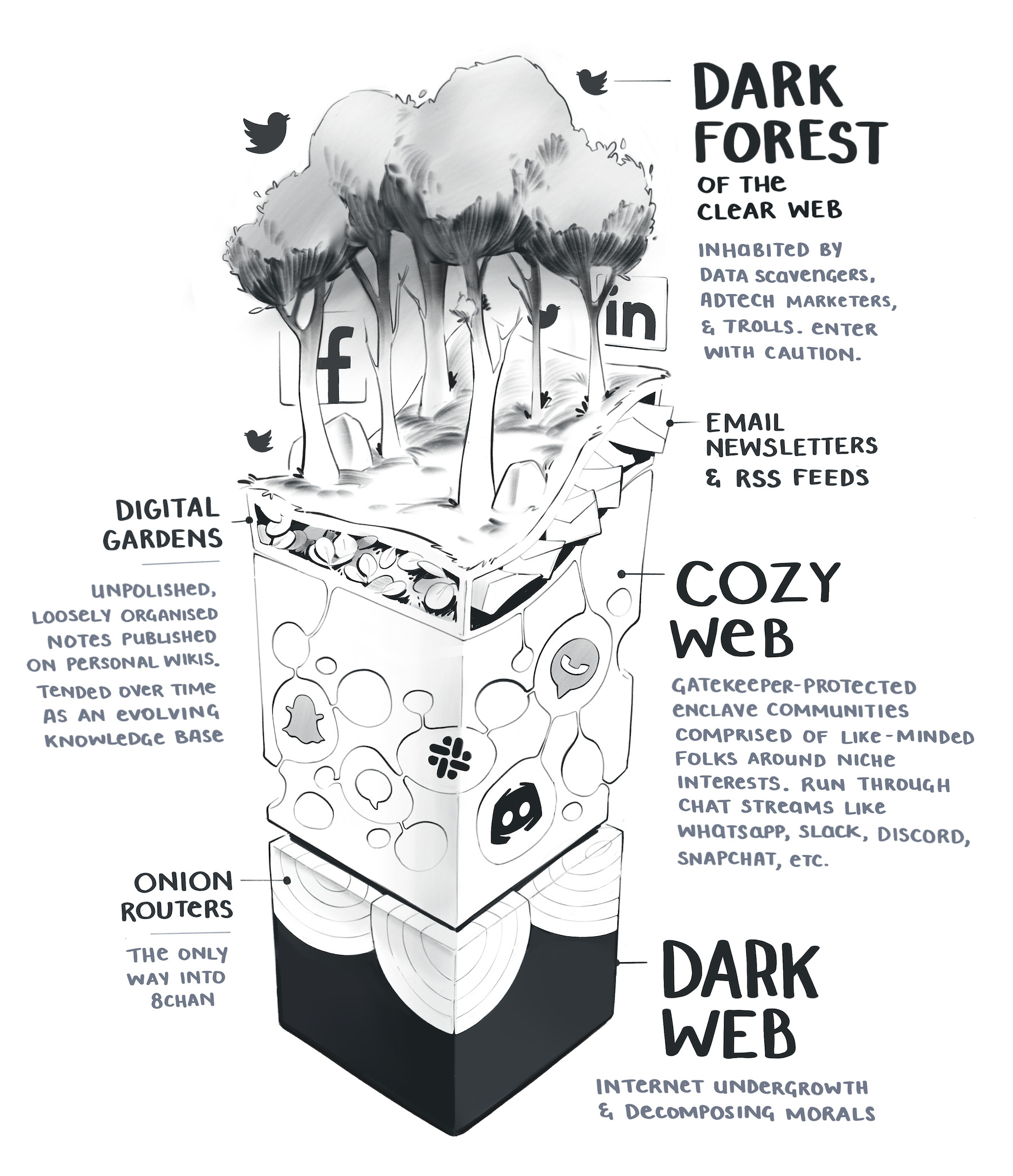

ChatGPT, Bing Chat, Google’s Bard—AI is infiltrating the lives of billions.

The 1% who understand it will run the world.

Here’s a list of key terms to jumpstart your learning:

— Misha (@mishadavinci) April 23, 2023

A guide to prompting AI (for what it is worth) — from oneusefulthing.org by Ethan Mollick

A little bit of magic, but mostly just practice

Excerpt (emphasis DSC):

Being “good at prompting” is a temporary state of affairs. The current AI systems are already very good at figuring out your intent, and they are getting better. Prompting is not going to be that important for that much longer. In fact, it already isn’t in GPT-4 and Bing. If you want to do something with AI, just ask it to help you do the thing. “I want to write a novel, what do you need to know to help me?” will get you surprisingly far.

…

The best way to use AI systems is not to craft the perfect prompt, but rather to use it interactively. Try asking for something. Then ask the AI to modify or adjust its output. Work with the AI, rather than trying to issue a single command that does everything you want. The more you experiment, the better off you are. Just use the AI a lot, and it will make a big difference – a lesson my class learned as they worked with the AI to create essays.

From DSC:

Agreed –> “Being “good at prompting” is a temporary state of affairs.” The User Interfaces that are/will be appearing will help greatly in this regard.

From DSC:

Bizarre…at least for me in late April of 2023:

FaceTiming live with AI… This app came across the @ElunaAI Discord and I was very impressed with its responsiveness, natural expression and language, etc…

Feels like the beginning of another massive wave in consumer AI products.

…who’s seen the movie HER? pic.twitter.com/By3dsew91Z

— Roberto Nickson (@rpnickson) April 26, 2023

Excerpt from Lore Issue #28: Drake, Grimes, and The Future of AI Music — from lore.com

Here’s a summary of what you need to know:

- The rise of AI-generated music has ignited legal and ethical debates, with record labels invoking copyright law to remove AI-generated songs from platforms like YouTube.

- Tech companies like Google face a conundrum: should they take down AI-generated content, and if so, on what grounds?

- Some artists, like Grimes, are embracing the change, proposing new revenue-sharing models and utilizing blockchain-based smart contracts for royalties.

- The future of AI-generated music presents both challenges and opportunities, with the potential to create new platforms and genres, democratize the industry, and redefine artist compensation.

The Need for AI PD — from techlearning.com by Erik Ofgang

Educators need training on how to effectively incorporate artificial intelligence into their teaching practice, says Lance Key, an award-winning educator.

“School never was fun for me,” he says, hoping that as an educator he could change that with his students. “I wanted to make learning fun.” This ‘learning should be fun’ philosophy is at the heart of the approach he advises educators take when it comes to AI.

Coursera Adds ChatGPT-Powered Learning Tools — from campustechnology.com by Kate Lucariello

Excerpt:

At its 11th annual conference in 2023, educational company Coursera announced it is adding ChatGPT-powered interactive ed tech tools to its learning platform, including a generative AI coach for students and an AI course-building tool for teachers. It will also add machine learning-powered translation, expanded VR immersive learning experiences, and more.

Coursera Coach will give learners a ChatGPT virtual coach to answer questions, give feedback, summarize video lectures and other materials, give career advice, and prepare them for job interviews. This feature will be available in the coming months.

From DSC:

Yes…it will be very interesting to see how tools and platforms interact from this time forth. The term “integration” will take a massive step forward, at least in my mind.

%3Ano_upscale()%2Fcdn.vox-cdn.com%2Fuploads%2Fchorus_asset%2Ffile%2F24064064%2Fmeta_teddy_square_gif.gif&w=1200&q=75)