What a beautiful visualization ? pic.twitter.com/A10vpOoBdr

— Love Music (@khnh80044) July 7, 2025

What a beautiful visualization ? pic.twitter.com/A10vpOoBdr

— Love Music (@khnh80044) July 7, 2025

Thoughts on thinking — from dcurt.is by Dustin Curtis

Intellectual rigor comes from the journey: the dead ends, the uncertainty, and the internal debate. Skip that, and you might still get the insight–but you’ll have lost the infrastructure for meaningful understanding. Learning by reading LLM output is cheap. Real exercise for your mind comes from building the output yourself.

The irony is that I now know more than I ever would have before AI. But I feel slightly dumber. A bit more dull. LLMs give me finished thoughts, polished and convincing, but none of the intellectual growth that comes from developing them myself.

Using AI Right Now: A Quick Guide — from oneusefulthing.org by Ethan Mollick

Which AIs to use, and how to use them

Every few months I put together a guide on which AI system to use. Since I last wrote my guide, however, there has been a subtle but important shift in how the major AI products work. Increasingly, it isn’t about the best model, it is about the best overall system for most people. The good news is that picking an AI is easier than ever and you have three excellent choices. The challenge is that these systems are getting really complex to understand. I am going to try and help a bit with both.

First, the easy stuff.

Which AI to Use

For most people who want to use AI seriously, you should pick one of three systems: Claude from Anthropic, Google’s Gemini, and OpenAI’s ChatGPT.

Also see:

Student Voice, Socratic AI, and the Art of Weaving a Quote — from elmartinsen.substack.com by Eric Lars Martinsen

How a custom bot helps students turn source quotes into personal insight—and share it with others

This summer, I tried something new in my fully online, asynchronous college writing course. These classes have no Zoom sessions. No in-person check-ins. Just students, Canvas, and a lot of thoughtful design behind the scenes.

One activity I created was called QuoteWeaver—a PlayLab bot that helps students do more than just insert a quote into their writing.

It’s a structured, reflective activity that mimics something closer to an in-person 1:1 conference or a small group quote workshop—but in an asynchronous format, available anytime. In other words, it’s using AI not to speed students up, but to slow them down.

…

The bot begins with a single quote that the student has found through their own research. From there, it acts like a patient writing coach, asking open-ended, Socratic questions such as:

What made this quote stand out to you?

How would you explain it in your own words?

What assumptions or values does the author seem to hold?

How does this quote deepen your understanding of your topic?

It doesn’t move on too quickly. In fact, it often rephrases and repeats, nudging the student to go a layer deeper.

The Disappearance of the Unclear Question — from jeppestricker.substack.com Jeppe Klitgaard Stricker

New Piece for UNESCO Education Futures

On [6/13/25], UNESCO published a piece I co-authored with Victoria Livingstone at Johns Hopkins University Press. It’s called The Disappearance of the Unclear Question, and it’s part of the ongoing UNESCO Education Futures series – an initiative I appreciate for its thoughtfulness and depth on questions of generative AI and the future of learning.

Our piece raises a small but important red flag. Generative AI is changing how students approach academic questions, and one unexpected side effect is that unclear questions – for centuries a trademark of deep thinking – may be beginning to disappear. Not because they lack value, but because they don’t always work well with generative AI. Quietly and unintentionally, students (and teachers) may find themselves gradually avoiding them altogether.

Of course, that would be a mistake.

We’re not arguing against using generative AI in education. Quite the opposite. But we do propose that higher education needs a two-phase mindset when working with this technology: one that recognizes what AI is good at, and one that insists on preserving the ambiguity and friction that learning actually requires to be successful.

Leveraging GenAI to Transform a Traditional Instructional Video into Engaging Short Video Lectures — from er.educause.edu by Hua Zheng

By leveraging generative artificial intelligence to convert lengthy instructional videos into micro-lectures, educators can enhance efficiency while delivering more engaging and personalized learning experiences.

This AI Model Never Stops Learning — from link.wired.com by Will Knight

Researchers at Massachusetts Institute of Technology (MIT) have now devised a way for LLMs to keep improving by tweaking their own parameters in response to useful new information.

The work is a step toward building artificial intelligence models that learn continually—a long-standing goal of the field and something that will be crucial if machines are to ever more faithfully mimic human intelligence. In the meantime, it could give us chatbots and other AI tools that are better able to incorporate new information including a user’s interests and preferences.

The MIT scheme, called Self Adapting Language Models (SEAL), involves having an LLM learn to generate its own synthetic training data and update procedure based on the input it receives.

Edu-Snippets — from scienceoflearning.substack.com by Nidhi Sachdeva and Jim Hewitt

Why knowledge matters in the age of AI; What happens to learners’ neural activity with prolonged use of LLMs for writing

Highlights:

Google I/O 2025: From research to reality — from blog.google

Here’s how we’re making AI more helpful with Gemini.

Google I/O 2025 LIVE — all the details about Android XR smart glasses, AI Mode, Veo 3, Gemini, Google Beam and more — from tomsguide.com by Philip Michaels

Google’s annual conference goes all in on AI

With a running time of 2 hours, Google I/O 2025 leaned heavily into Gemini and new models that make the assistant work in more places than ever before. Despite focusing the majority of the keynote around Gemini, Google saved its most ambitious and anticipated announcement towards the end with its big Android XR smart glasses reveal.

Shockingly, very little was spent around Android 16. Most of its Android 16 related news, like the redesigned Material 3 Expressive interface, was announced during the Android Show live stream last week — which explains why Google I/O 2025 was such an AI heavy showcase.

That’s because Google carved out most of the keynote to dive deeper into Gemini, its new models, and integrations with other Google services. There’s clearly a lot to unpack, so here’s all the biggest Google I/O 2025 announcements.

Our vision for building a universal AI assistant— from blog.google

We’re extending Gemini to become a world model that can make plans and imagine new experiences by simulating aspects of the world.

Making Gemini a world model is a critical step in developing a new, more general and more useful kind of AI — a universal AI assistant. This is an AI that’s intelligent, understands the context you are in, and that can plan and take action on your behalf, across any device.

By applying LearnLM capabilities, and directly incorporating feedback from experts across the industry, Gemini adheres to the principles of learning science to go beyond just giving you the answer. Instead, Gemini can explain how you get there, helping you untangle even the most complex questions and topics so you can learn more effectively. Our new prompting guide provides sample instructions to see this in action.

Learn in newer, deeper ways with Gemini — from blog.google.com by Ben Gomes

We’re infusing LearnLM directly into Gemini 2.5 — plus more learning news from I/O.

At I/O 2025, we announced that we’re infusing LearnLM directly into Gemini 2.5, which is now the world’s leading model for learning. As detailed in our latest report, Gemini 2.5 Pro outperformed competitors on every category of learning science principles. Educators and pedagogy experts preferred Gemini 2.5 Pro over other offerings across a range of learning scenarios, both for supporting a user’s learning goals and on key principles of good pedagogy.

Gemini gets more personal, proactive and powerful — from blog.google.com by Josh Woodward

It’s your turn to create, learn and explore with an AI assistant that’s starting to understand your world and anticipate your needs.

Here’s what we announced at Google IO:

Fuel your creativity with new generative media models and tools — from by Eli Collins

Introducing Veo 3 and Imagen 4, and a new tool for filmmaking called Flow.

AI in Search: Going beyond information to intelligence

We’re introducing new AI features to make it easier to ask any question in Search.

AI in Search is making it easier to ask Google anything and get a helpful response, with links to the web. That’s why AI Overviews is one of the most successful launches in Search in the past decade. As people use AI Overviews, we see they’re happier with their results, and they search more often. In our biggest markets like the U.S. and India, AI Overviews is driving over 10% increase in usage of Google for the types of queries that show AI Overviews.

This means that once people use AI Overviews, they’re coming to do more of these types of queries, and what’s particularly exciting is how this growth increases over time. And we’re delivering this at the speed people expect of Google Search — AI Overviews delivers the fastest AI responses in the industry.

In this story:

Make sure to animate your creations

video prompt: an ocean wave moving dramatically and a boat moving on the wave

*animated with Firefly Video Model https://t.co/BQmrN7kDiq pic.twitter.com/G5cMqcVuTW

— Kris Kashtanova (@icreatelife) May 16, 2025

New tools for ultimate character consistency — from heatherbcooper.substack.com by Heather B. Cooper

Plus simple & effective video prompt tips

We have some new tools for character, objects, and scene consistency with Runway and Midjourney.

Multimodal AI = multi-danger — from theneurondaily.com by Grant Harvey

According to a new report from Enkrypt AI, multimodal models have opened the door to sneakier attacks (like Ocean’s Eleven, but with fewer suits and more prompt injections).

Naturally, Enkrypt decided to run a few experiments… and things escalated quickly.

They tested two of Mistral’s newest models—Pixtral-Large and Pixtral-12B, built to handle words and visuals.

What they found? Yikes:

Rise of AI-generated deepfake videos spreads misinformation — from iblnews.org

.Get the 2025 Student Guide to Artificial Intelligence — from studentguidetoai.org

This guide is made available under a Creative Commons license by Elon University and the American Association of Colleges and Universities (AAC&U).

.

AI Isn’t Just Changing How We Work — It’s Changing How We Learn — from entrepreneur.com by Aytekin Tank; edited by Kara McIntyre

AI agents are opening doors to education that just a few years ago would have been unthinkable. Here’s how.

Agentic AI is taking these already huge strides even further. Rather than simply asking a question and receiving an answer, an AI agent can assess your current level of understanding and tailor a reply to help you learn. They can also help you come up with a timetable and personalized lesson plan to make you feel as though you have a one-on-one instructor walking you through the process. If your goal is to learn to speak a new language, for example, an agent might map out a plan starting with basic vocabulary and pronunciation exercises, then progress to simple conversations, grammar rules and finally, real-world listening and speaking practice.

…

For instance, if you’re an entrepreneur looking to sharpen your leadership skills, an AI agent might suggest a mix of foundational books, insightful TED Talks and case studies on high-performing executives. If you’re aiming to master data analysis, it might point you toward hands-on coding exercises, interactive tutorials and real-world datasets to practice with.

The beauty of AI-driven learning is that it’s adaptive. As you gain proficiency, your AI coach can shift its recommendations, challenge you with new concepts and even simulate real-world scenarios to deepen your understanding.

Ironically, the very technology feared by workers can also be leveraged to help them. Rather than requiring expensive external training programs or lengthy in-person workshops, AI agents can deliver personalized, on-demand learning paths tailored to each employee’s role, skill level, and career aspirations. Given that 68% of employees find today’s workplace training to be overly “one-size-fits-all,” an AI-driven approach will not only cut costs and save time but will be more effective.

What’s the Future for AI-Free Spaces? — from higherai.substack.com by Jason Gulya

Please let me dream…

This is one reason why I don’t see AI-embedded classrooms and AI-free classrooms as opposite poles. The bone of contention, here, is not whether we can cultivate AI-free moments in the classroom, but for how long those moments are actually sustainable.

Can we sustain those AI-free moments for an hour? A class session? Longer?

…

Here’s what I think will happen. As AI becomes embedded in society at large, the sustainability of imposed AI-free learning spaces will get tested. Hard. I think it’ll become more and more difficult (though maybe not impossible) to impose AI-free learning spaces on students.

However, consensual and hybrid AI-free learning spaces will continue to have a lot of value. I can imagine classes where students opt into an AI-free space. Or they’ll even create and maintain those spaces.

Duolingo’s AI Revolution — from drphilippahardman.substack.com by Dr. Philippa Hardman

What 148 AI-Generated Courses Tell Us About the Future of Instructional Design & Human Learning

Last week, Duolingo announced an unprecedented expansion: 148 new language courses created using generative AI, effectively doubling their content library in just one year. This represents a seismic shift in how learning content is created — a process that previously took the company 12 years for their first 100 courses.

As CEO Luis von Ahn stated in the announcement, “This is a great example of how generative AI can directly benefit our learners… allowing us to scale at unprecedented speed and quality.”

In this week’s blog, I’ll dissect exactly how Duolingo has reimagined instructional design through AI, what this means for the learner experience, and most importantly, what it tells us about the future of our profession.

Are Mixed Reality AI Agents the Future of Medical Education? — from ehealth.eletsonline.com

Medical education is experiencing a quiet revolution—one that’s not taking place in lecture theatres or textbooks, but with headsets and holograms. At the heart of this revolution are Mixed Reality (MR) AI Agents, a new generation of devices that combine the immersive depth of mixed reality with the flexibility of artificial intelligence. These technologies are not mere flashy gadgets; they’re revolutionising the way medical students interact with complicated content, rehearse clinical skills, and prepare for real-world situations. By combining digital simulations with the physical world, MR AI Agents are redefining what it means to learn medicine in the 21st century.

4 Reasons To Use Claude AI to Teach — from techlearning.com by Erik Ofgang

Features that make Claude AI appealing to educators include a focus on privacy and conversational style.

After experimenting using Claude AI on various teaching exercises, from generating quizzes to tutoring and offering writing suggestions, I found that it’s not perfect, but I think it behaves favorably compared to other AI tools in general, with an easy-to-use interface and some unique features that make it particularly suited for use in education.

Students and folks looking for work may want to check out:

Also relevant/see:

From DSC:

Look out Google, Amazon, and others! Nvidia is putting the pedal to the metal in terms of being innovative and visionary! They are leaving the likes of Apple in the dust.

The top talent out there is likely to go to Nvidia for a while. Engineers, programmers/software architects, network architects, product designers, data specialists, AI researchers, developers of robotics and autonomous vehicles, R&D specialists, computer vision specialists, natural language processing experts, and many more types of positions will be flocking to Nvidia to work for a company that has already changed the world and will likely continue to do so for years to come.

NVIDIA just shook the AI and Robotic world at NVIDIA GTC 2025.

CEO Jensen Huang announced jaw-dropping breakthroughs.

Here are the top 11 key highlights you can’t afford to miss: (wait till you see no 3) pic.twitter.com/domejuVdw5

— The AI Colony (@TheAIColony) March 19, 2025

NVIDIA’s AI Superbowl — from theneurondaily.com by Noah and Grant

PLUS: Prompt tips to make AI writing more natural

That’s despite a flood of new announcements (here’s a 16 min video recap), which included:

For enterprises, NVIDIA unveiled DGX Spark and DGX Station—Jensen’s vision of AI-era computing, bringing NVIDIA’s powerful Blackwell chip directly to your desk.

Nvidia Bets Big on Synthetic Data — from wired.com by Lauren Goode

Nvidia has acquired synthetic data startup Gretel to bolster the AI training data used by the chip maker’s customers and developers.

Nvidia, xAI to Join BlackRock and Microsoft’s $30 Billion AI Infrastructure Fund — from investopedia.com by Aaron McDade

Nvidia and xAI are joining BlackRock and Microsoft in an AI infrastructure group seeking $30 billion in funding. The group was first announced in September as BlackRock and Microsoft sought to fund new data centers to power AI products.

Nvidia CEO Jensen Huang says we’ll soon see 1 million GPU data centers visible from space — from finance.yahoo.com by Daniel Howley

Nvidia CEO Jensen Huang says the company is preparing for 1 million GPU data centers.

Nvidia stock stems losses as GTC leaves Wall Street analysts ‘comfortable with long term AI demand’ — from finance.yahoo.com by Laura Bratton

Nvidia stock reversed direction after a two-day slide that saw shares lose 5% as the AI chipmaker’s annual GTC event failed to excite investors amid a broader market downturn.

Microsoft, Google, and Oracle Deepen Nvidia Partnerships. This Stock Got the Biggest GTC Boost. — from barrons.com by Adam Clark and Elsa Ohlen

The 4 Big Surprises from Nvidia’s ‘Super Bowl of AI’ GTC Keynote — from barrons.com by Tae Kim; behind a paywall

AI Super Bowl. Hi everyone. This week, 20,000 engineers, scientists, industry executives, and yours truly descended upon San Jose, Calif. for Nvidia’s annual GTC developers’ conference, which has been dubbed the “Super Bowl of AI.”

20 AI Agent Examples in 2025 — from autogpt.net

AI Agents are now deeply embedded in everyday life and?quickly transforming industry after industry. The global AI market is expected to explode up to $1.59 trillion by 2030! That is a?ton of intelligent agents operating behind the curtains.

That’s why in this article, we explore?20 real-life AI Agents that are causing a stir today.

Top 100 Gen AI apps, new AI video & 3D — from eatherbcooper.substack.com by Heather Cooper

Plus Runway Restyle, Luma Ray2 img2vid keyframes & extend

?In the latest edition of Andreessen Horowitz’s “Top 100 Gen AI Consumer Apps,” the generative AI landscape has undergone significant shifts.

Notably, DeepSeek has emerged as a leading competitor to ChatGPT, while AI video models have advanced from experimental stages to more reliable tools for short clips. Additionally, the rise of “vibecoding” is broadening the scope of AI creators.

The report also introduces the “Brink List,” highlighting ten companies poised to enter the top 100 rankings.?

AI is Evolving Fast – The Latest LLMs, Video Models & Breakthrough Tools — from heatherbcooper.substack.com by Heather Cooper

Breakthroughs in multimodal search, next-gen coding assistants, and stunning text-to-video tech. Here’s what’s new:

I do these comparisons frequently to measure the improvements in different models for text or image to video prompts. I hope it is helpful for you, as well!

I included 6 models for an image to video comparison:

?Video Model Comparison: Image to video

6 Models included:

• Pika 2.1

• Adobe Firefly

• Runway Gen-3

• Kling 1.6

• Luma Ray2

• Hailuo T2V-01This time I used an image generated with Magnific’s new Fluid model ( Google DeepMind’s Imagen + Mystic 2.5 ), and the same… pic.twitter.com/rH1gRbhynB

— Heather Cooper (@HBCoop_) February 19, 2025

Why Smart Companies Are Granting AI Immunity to Their Employees — from builtin.com by Matt Almassian

Employees are using AI tools whether they’re authorized or not. Instead of cracking down on AI usage, consider developing an AI amnesty program. Learn more.

But the smartest companies aren’t cracking down. They’re flipping the script. Instead of playing AI police, they’re launching AI amnesty programs, offering employees a safe way to disclose their AI usage without fear of punishment. In doing so, they’re turning a security risk into an innovation powerhouse.

…

Before I dive into solutions, let’s talk about what keeps your CISO or CTO up at night. Shadow AI isn’t just about unauthorized tool usage — it’s a potential dirty bomb of security, compliance and operational risks that could explode at any moment.

…

6 Steps to an AI Amnesty Program

A first-ever study on prompts… — from theneurondaily.com

PLUS: OpenAI wants to charge $20K a month to replace you?!

What they discovered might change how you interact with AI:

That’s also why we think you, an actual human, should always place yourself as a final check between whatever your AI creates and whatever goes out into the world.

Leave it to Manus

“Manus is a general AI agent that bridges minds and actions: it doesn’t just think, it delivers results. Manus excels at various tasks in work and life, getting everything done while you rest.”

From DSC:

What could possibly go wrong?!

AI Search Has A Citation Problem — from cjr.org (Columbia Journalism Review) by Klaudia Ja?wi?ska and Aisvarya Chandrasekar

We Compared Eight AI Search Engines. They’re All Bad at Citing News.

We found that…

Chatbots were generally bad at declining to answer questions they couldn’t answer accurately, offering incorrect or speculative answers instead.

Our findings were consistent with our previous study, proving that our observations are not just a ChatGPT problem, but rather recur across all the prominent generative search tools that we tested.

5 new AI tools you’ll actually want to try — from wondertools.substack.com by Jeremy Kaplan

Chat with lifelike AI, clean up audio instantly, and reimagine your career

Hundreds of AI tools emerge every week. I’ve picked five new ones worth exploring. They’re free to try, easy to use, and signal new directions for useful AI.

Example:

Career Dreamer

A playful way to explore career possibilities with AI

10 Extreme Challenges Facing Schools — from stefanbauschard.substack.com by Stefan Bauschard

TL;DR

Schools face 10 extreme challenges

– assessment

– funding

– deportations

– opposition to trans students

– mental health

– AI/AGI world

– a struggle to engage students

– too many hats

– war risks

– resource redistribution and civil conflict

Hundreds of thousands of students are entitled to training and help finding jobs. They don’t get it — from hechingerreport.org by Meredith Kolodner

The best program to help students with disabilities get jobs is so hidden, ‘It’s like a secret society’

There’s a half-billion-dollar federal program that is supposed to help students with disabilities get into the workforce when they leave high school, but most parents — and even some school officials — don’t know it exists. As a result, hundreds of thousands of students who could be getting help go without it. New Jersey had the nation’s lowest proportion — roughly 2 percent — of eligible students receiving these services in 2023.

More than a decade ago, Congress recognized the need to help young people with disabilities get jobs, and earmarked funding for pre-employment transition services to help students explore and train for careers and send them on a pathway to independence after high school. Yet, today, fewer than 40 percent of people with disabilities ages 16 to 64 are employed, even though experts say most are capable of working.

The article links to:

Pre-Employment Transition Services

Both vocational rehabilitation agencies and schools are required by law to provide certain transition services and supports to improve post-school outcomes of students with disabilities.

The Workforce Innovation and Opportunity Act (WIOA) amends the Rehabilitation Act of 1973 and requires vocational rehabilitation (VR) agencies to set aside at least 15% of their federal funds to provide pre-employment transition services (Pre-ETS) to students with disabilities who are eligible or potentially eligible for VR services. The intent of pre-employment transition services is to:

improve the transition of students with disabilities from school to postsecondary education or to an employment outcome, increase opportunities for students with disabilities to practice and improve workplace readiness skills, through work-based learning experiences in a competitive, integrated work setting and increase opportunities for students with disabilities to explore post-secondary training options, leading to more industry recognized credentials, and meaningful post-secondary employment.

Repurposing Furniture to Support Learning in the Early Grades — from edutopia.org by Kendall Stallings

A kindergarten teacher gives five practical examples of repurposing classroom furniture to serve multiple uses.

I’m a supporter of evolving classroom spaces—of redesigning the layout of the room as the needs and interests of students develop. The easiest way to accommodate students, I’ve found, is to use the furniture itself to set up a functional, spacious, and logical classroom. There are several benefits to this approach. It provides children with safe, low-stakes environmental changes; fosters flexibility; and creates opportunities for spatial adaptation and problem-solving. Additionally, rearranging the room throughout the school year enables teachers to address potential catalysts for challenging behaviors and social conflicts that may arise, while sparking curiosity in an otherwise-familiar space.

How Teacher-Generated Videos Support Students in Science — from edutopia.org by Shawn Sutton

A five-minute video can help students get a refresher on important science concepts at their own pace.

While instructional videos were prepared out of necessity in the past, I’ve rediscovered their utility as a quick, flexible, and personal way to enhance classroom teaching. Instructional videos can be created through free screencasting software such as Screencastify, ScreenRec, Loom, or OBS Studio. Screencasting would be ideal for the direct presentation of information, like a slide deck of notes. Phone cameras make practical recording devices for live content beyond the computer screen, like a science demo. A teacher could invest in a phone stand and ring light at a nominal cost to assist in this type of recording.

However, not all teacher videos are created equal, and I’ve discovered four benefits and strategies that help make these teaching tools more effective.

NVIDIA’s Apple moment?! — from theneurondaily.com by Noah Edelman and Grant Harvey

PLUS: How to level up your AI workflows for 2025…

NVIDIA wants to put an AI supercomputer on your desk (and it only costs $3,000).

…

And last night at CES 2025, Jensen Huang announced phase two of this plan: Project DIGITS, a $3K personal AI supercomputer that runs 200B parameter models from your desk. Guess we now know why Apple recently developed an NVIDIA allergy…

…

But NVIDIA doesn’t just want its “Apple PC moment”… it also wants its OpenAI moment. NVIDIA also announced Cosmos, a platform for building physical AI (think: robots and self-driving cars)—which Jensen Huang calls “the ChatGPT moment for robotics.”

Jensen Huang’s latest CES speech: AI Agents are expected to become the next robotics industry, with a scale reaching trillions of dollars — from chaincatcher.com

NVIDIA is bringing AI from the cloud to personal devices and enterprises, covering all computing needs from developers to ordinary users.

At CES 2025, which opened this morning, NVIDIA founder and CEO Jensen Huang delivered a milestone keynote speech, revealing the future of AI and computing. From the core token concept of generative AI to the launch of the new Blackwell architecture GPU, and the AI-driven digital future, this speech will profoundly impact the entire industry from a cross-disciplinary perspective.

Also see:

NVIDIA Project DIGITS: The World’s Smallest AI Supercomputer. — from nvidia.com

A Grace Blackwell AI Supercomputer on your desk.

From DSC:

I’m posting this next item (involving Samsung) as it relates to how TVs continue to change within our living rooms. AI is finding its way into our TVs…the ramifications of this remain to be seen.

OpenAI ‘now knows how to build AGI’ — from therundown.ai by Rowan Cheung

PLUS: AI phishing achieves alarming success rates

The Rundown: Samsung revealed its new “AI for All” tagline at CES 2025, introducing a comprehensive suite of new AI features and products across its entire ecosystem — including new AI-powered TVs, appliances, PCs, and more.

The details:

Why it matters: Samsung’s web of products are getting the AI treatment — and we’re about to be surrounded by AI-infused appliances in every aspect of our lives. The edge will be the ability to sync it all together under one central hub, which could position Samsung as the go-to for the inevitable transition from smart to AI-powered homes.

***

“Samsung sees TVs not as one-directional devices for passive consumption but as interactive, intelligent partners that adapt to your needs,” said SW Yong, President and Head of Visual Display Business at Samsung Electronics. “With Samsung Vision AI, we’re reimagining what screens can do, connecting entertainment, personalization, and lifestyle solutions into one seamless experience to simplify your life.” — from Samsung

Understanding And Preparing For The 7 Levels Of AI Agents — from forbes.com by Douglas B. Laney

The following framework I offer for defining, understanding, and preparing for agentic AI blends foundational work in computer science with insights from cognitive psychology and speculative philosophy. Each of the seven levels represents a step-change in technology, capability, and autonomy. The framework expresses increasing opportunities to innovate, thrive, and transform in a data-fueled and AI-driven digital economy.

The Rise of AI Agents and Data-Driven Decisions — from devprojournal.com by Mike Monocello

Fueled by generative AI and machine learning advancements, we’re witnessing a paradigm shift in how businesses operate and make decisions.

AI Agents Enhance Generative AI’s Impact

Burley Kawasaki, Global VP of Product Marketing and Strategy at Creatio, predicts a significant leap forward in generative AI. “In 2025, AI agents will take generative AI to the next level by moving beyond content creation to active participation in daily business operations,” he says. “These agents, capable of partial or full autonomy, will handle tasks like scheduling, lead qualification, and customer follow-ups, seamlessly integrating into workflows. Rather than replacing generative AI, they will enhance its utility by transforming insights into immediate, actionable outcomes.”

Here’s what nobody is telling you about AI agents in 2025 — from aidisruptor.ai by Alex McFarland

What’s really coming (and how to prepare).

Everyone’s talking about the potential of AI agents in 2025 (and don’t get me wrong, it’s really significant), but there’s a crucial detail that keeps getting overlooked: the gap between current capabilities and practical reliability.

Here’s the reality check that most predictions miss: AI agents currently operate at about 80% accuracy (according to Microsoft’s AI CEO). Sounds impressive, right? But here’s the thing – for businesses and users to actually trust these systems with meaningful tasks, we need 99% reliability. That’s not just a 19% gap – it’s the difference between an interesting tech demo and a business-critical tool.

This matters because it completely changes how we should think about AI agents in 2025. While major players like Microsoft, Google, and Amazon are pouring billions into development, they’re all facing the same fundamental challenge – making them work reliably enough that you can actually trust them with your business processes.

Think about it this way: Would you trust an assistant who gets things wrong 20% of the time? Probably not. But would you trust one who makes a mistake only 1% of the time, especially if they could handle repetitive tasks across your entire workflow? That’s a completely different conversation.

Why 2025 will be the year of AI orchestration — from venturebeat.com by Emilia David|

In the tech world, we like to label periods as the year of (insert milestone here). This past year (2024) was a year of broader experimentation in AI and, of course, agentic use cases.

As 2025 opens, VentureBeat spoke to industry analysts and IT decision-makers to see what the year might bring. For many, 2025 will be the year of agents, when all the pilot programs, experiments and new AI use cases converge into something resembling a return on investment.

In addition, the experts VentureBeat spoke to see 2025 as the year AI orchestration will play a bigger role in the enterprise. Organizations plan to make management of AI applications and agents much more straightforward.

Here are some themes we expect to see more in 2025.

Predictions For AI In 2025: Entrepreneurs Look Ahead — from forbes.com by Jodie Cook

AI agents take charge

Jérémy Grandillon, CEO of TC9 – AI Allbound Agency, said “Today, AI can do a lot, but we don’t trust it to take actions on our behalf. This will change in 2025. Be ready to ask your AI assistant to book a Uber ride for you.” Start small with one agent handling one task. Build up to an army.

“If 2024 was agents everywhere, then 2025 will be about bringing those agents together in networks and systems,” said Nicholas Holland, vice president of AI at Hubspot. “Micro agents working together to accomplish larger bodies of work, and marketplaces where humans can ‘hire’ agents to work alongside them in hybrid teams. Before long, we’ll be saying, ‘there’s an agent for that.'”

…

Voice becomes default

Stop typing and start talking. Adam Biddlecombe, head of brand at Mindstream, predicts a shift in how we interact with AI. “2025 will be the year that people start talking with AI,” he said. “The majority of people interact with ChatGPT and other tools in the text format, and a lot of emphasis is put on prompting skills.

Biddlecombe believes, “With Apple’s ChatGPT integration for Siri, millions of people will start talking to ChatGPT. This will make AI so much more accessible and people will start to use it for very simple queries.”

Get ready for the next wave of advancements in AI. AGI arrives early, AI agents take charge, and voice becomes the norm. Video creation gets easy, AI embeds everywhere, and one-person billion-dollar companies emerge.

These 4 graphs show where AI is already impacting jobs — from fastcompany.com by Brandon Tucker

With a 200% increase in two years, the data paints a vivid picture of how AI technology is reshaping the workforce.

To better understand the types of roles that AI is impacting, ZoomInfo’s research team looked to its proprietary database of professional contacts for answers. The platform, which detects more than 1.5 million personnel changes per day, revealed a dramatic increase in AI-related job titles since 2022. With a 200% increase in two years, the data paints a vivid picture of how AI technology is reshaping the workforce.

Why does this shift in AI titles matter for every industry?

How AI Is Changing Education: The Year’s Top 5 Stories — from edweek.org by Alyson Klein

Ever since a new revolutionary version of chat ChatGPT became operable in late 2022, educators have faced several complex challenges as they learn how to navigate artificial intelligence systems.

…

Education Week produced a significant amount of coverage in 2024 exploring these and other critical questions involving the understanding and use of AI.

Here are the five most popular stories that Education Week published in 2024 about AI in schools.

What’s next with AI in higher education? — from msn.com by Science X Staff

Dr. Lodge said there are five key areas the higher education sector needs to address to adapt to the use of AI:

1. Teach ‘people’ skills as well as tech skills

2. Help all students use new tech

3. Prepare students for the jobs of the future

4. Learn to make sense of complex information

5. Universities to lead the tech change

5 Ways Teachers Can Use NotebookLM Today — from classtechtips.com by Dr. Monica Burns

This is wild.

Hume AI just announced a new AI voice model.

It’s like ChatGPT Advanced Voice Mode, Elevenlabs Voice Design, and Google NotebookLM in one.

t can create a voice with a whole personality from a description or 5 second clip. And more.

8 wild examples: pic.twitter.com/XOA779jSiE

— Min Choi (@minchoi) December 23, 2024

AI in 2024: Insights From our 5 Million Readers — from linkedin.com by Generative AI

Checking the Pulse: The Impact of AI on Everyday Lives

So, what exactly did our users have to say about how AI transformed their lives this year?

.

Top 2024 Developments in AI

Getting ready for 2025: your AI team members (Gift lesson 3/3) — from flexos.com by Daan van Rossum

And that’s why today, I’ll tell you exactly which AI tools I’ve recommended for the top 5 use cases to almost 200 business leaders who took the Lead with AI course.

1. Email Management: Simplifying Communication with AI

2. Meeting Management: Maximize Your Time

3. Research: Streamlining Information Gathering

…plus several more items and tools that were mentioned by Daan.

Introducing the 2025 Wonder Media Calendar for tweens, teens, and their families/households. Designed by Sue Ellen Christian and her students in her Global Media Literacy class (in the fall 2024 semester at Western Michigan University), the calendar’s purpose is to help people create a new year filled with skills and smart decisions about their media use. This calendar is part of the ongoing Wonder Media Library.com project that includes videos, lesson plans, games, songs and more. The website is funded by a generous grant from the Institute of Museum and Library Services, in partnership with Western Michigan University and the Library of Michigan.

1-800-CHAT-GPT—12 Days of OpenAI: Day 10

Per The Rundown: OpenAI just launched a surprising new way to access ChatGPT — through an old-school 1-800 number & also rolled out a new WhatsApp integration for global users during Day 10 of the company’s livestream event.

How Agentic AI is Revolutionizing Customer Service — from customerthink.com by Devashish Mamgain

Agentic AI represents a significant evolution in artificial intelligence, offering enhanced autonomy and decision-making capabilities beyond traditional AI systems. Unlike conventional AI, which requires human instructions, agentic AI can independently perform complex tasks, adapt to changing environments, and pursue goals with minimal human intervention.

This makes it a powerful tool across various industries, especially in the customer service function. To understand it better, let’s compare AI Agents with non-AI agents.

…

Characteristics of Agentic AI

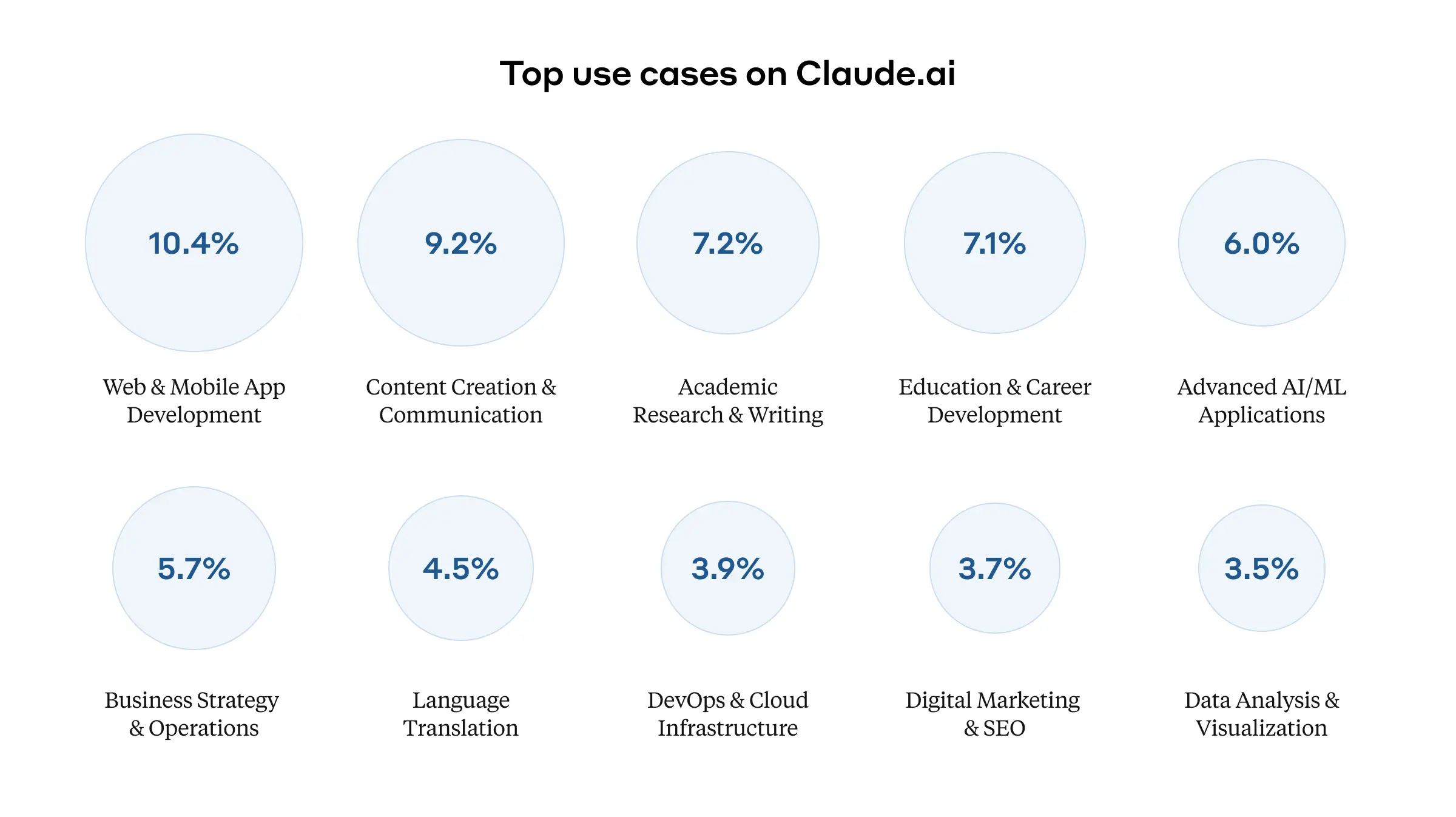

Clio: A system for privacy-preserving insights into real-world AI use — from anthropic.com

How, then, can we research and observe how our systems are used while rigorously maintaining user privacy?

Claude insights and observations, or “Clio,” is our attempt to answer this question. Clio is an automated analysis tool that enables privacy-preserving analysis of real-world language model use. It gives us insights into the day-to-day uses of claude.ai in a way that’s analogous to tools like Google Trends. It’s also already helping us improve our safety measures. In this post—which accompanies a full research paper—we describe Clio and some of its initial results.

Evolving tools redefine AI video — from heatherbcooper.substack.com by Heather Cooper

Google’s Veo 2, Kling 1.6, Pika 2.0 & more

AI video continues to surpass expectations

The AI video generation space has evolved dramatically in recent weeks, with several major players introducing groundbreaking tools.

Here’s a comprehensive look at the current landscape:

There are several other video models and platforms, including …