Video, Images and Sounds – Good Tools #14 — from goodtools.substack.com by Robin Good

Specifically in this issue:

- Free Image Libraries

- Image Search Engines

- Free Illustrations

- Free Icons

- Free Stock Video Footage

- Free Music for Video and Podcasts

Video, Images and Sounds – Good Tools #14 — from goodtools.substack.com by Robin Good

Specifically in this issue:

AI University for UK? — from donaldclarkplanb.blogspot.com by Donald Clark

Tertiary Education in the UK needs a fresh idea. What we need is an initiative on the same scale as The Open University, kicked off over 50 years ago.

…

It is clear that an educational vision is needed and I think the best starting point is that outlined and executed by Paul LeBlanc at SNHU. It is substantial, well articulated and has worked in what has become the largest University in the US.

It would be based on the competence model, with a focus on skills shortages. Here’s a starter with 25 ideas, a manifesto of sorts, based on lessons learnt from other successful models:

Learners’ Edition: AI-powered Coaching, Professional Certifications + Inspiring conversations about mastering your learning & speaking skills — from linkedin.com by Tomer Cohen

Excerpts:

1. Your own AI-powered coaching

Learners can go into LinkedIn Learning and ask a question or explain a challenge they are currently facing at work (we’re focusing on areas within Leadership and Management to start). AI-powered coaching will pull from the collective knowledge of our expansive LinkedIn Learning library and, instantaneously, offer advice, examples, or feedback that is personalized to the learner’s skills, job, and career goals.

What makes us so excited about this launch is we can now take everything we as LinkedIn know about people’s careers and how they navigate them and help accelerate them with AI.

…

3. Learn exactly what you need to know for your next job

When looking for a new job, it’s often the time we think about refreshing our LinkedIn profiles. It’s also a time we can refresh our skills. And with skill sets for jobs having changed by 25% since 2015 – with the number expected to increase by 65% by 2030– keeping our skills a step ahead is one of the most important things we can do to stand out.

There are a couple of ways we’re making it easier to learn exactly what you need to know for your next job:

When you set a job alert, in addition to being notified about open jobs, we’ll recommend learning courses and Professional Certificate offerings to help you build the skills needed for that role.

When you view a job, we recommend specific courses to help you build the required skills. If you have LinkedIn Learning access through your company or as part of a Premium subscription, you can follow the skills for the job, that way we can let you know when we launch new courses for those skills and recommend you content on LinkedIn that better aligns to your career goals.

2024 Edtech Predictions from Edtech Insiders — from edtechinsiders.substack.com by Alex Sarlin, Ben Kornell, and Sarah Morin

Omni-modal AI, edtech funding prospects, higher ed wake up calls, focus on career training, and more!

Alex: I talked to the 360 Learning folks at one point and they had this really interesting epiphany, which is basically that it’s been almost impossible for every individual company in the past to create a hierarchy of skills and a hierarchy of positions and actually organize what it looks like for people to move around and upskill within the company and get to new paths.

Until now. AI actually can do this very well. It can take not only job description data, but it can take actual performance data. It can actually look at what people do on a daily basis and back fit that to training, create automatic training based on it.

From DSC:

I appreciated how they addressed K-12, higher ed, and the workforce all in one posting. Nice work. We don’t need siloes. We need more overall design thinking re: our learning ecosystems — as well as more collaborations. We need more on-ramps and pathways in a person’s learning/career journey.

The biggest things that happened in AI this year — from superhuman.ai by Zain Kahn

January:

February:

March:

…and more

AI 2023: A Year in Review — from stefanbauschard.substack.com by Stefan Bauschard

2023 developments in AI and a hint of what they are building toward

Some of the items that Stefan includes in his posting include:

The Dictionary.com Word of the Year is “hallucinate.” — from content.dictionary.com by Nick Norlen and Grant Barrett; via The Rundown AI

hallucinate

[ huh–loo-suh-neyt ]

verb

(of artificial intelligence) to produce false information contrary to the intent of the user and present it as if true and factual. Example: When chatbots hallucinate, the result is often not just inaccurate but completely fabricated.

Soon, every employee will be both AI builder and AI consumer — from zdnet.com by Joe McKendrick, via Robert Gibson on LinkedIn

“Standardized tools and platforms as well as advanced low- or no-code tech may enable all employees to become low-level engineers,” suggests a recent report.

The time could be ripe for a blurring of the lines between developers and end-users, a recent report out of Deloitte suggests. It makes more business sense to focus on bringing in citizen developers for ground-level programming, versus seeking superstar software engineers, the report’s authors argue, or — as they put it — “instead of transforming from a 1x to a 10x engineer, employees outside the tech division could be going from zero to one.”

Along these lines, see:

UK Supreme Court rules AI is not an inventor — from theverge.com by Emilia David

The ruling follows a similar decision denying patent registrations naming AI as creators.

The UK Supreme Court ruled that AI cannot get patents, declaring it cannot be named as an inventor of new products because the law considers only humans or companies to be creators.

The Times Sues OpenAI and Microsoft Over A.I. Use of Copyrighted Work — from nytimes.com by Michael M. Grynbaum and Ryan Mac

The New York Times sued OpenAI and Microsoft for copyright infringement on Wednesday, opening a new front in the increasingly intense legal battle over the unauthorized use of published work to train artificial intelligence technologies.

…

The suit does not include an exact monetary demand. But it says the defendants should be held responsible for “billions of dollars in statutory and actual damages” related to the “unlawful copying and use of The Times’s uniquely valuable works.” It also calls for the companies to destroy any chatbot models and training data that use copyrighted material from The Times.

On this same topic, also see:

? The historic NYT v. @OpenAI lawsuit filed this morning, as broken down by me, an IP and AI lawyer, general counsel, and longtime tech person and enthusiast.

Tl;dr – It’s the best case yet alleging that generative AI is copyright infringement. Thread. ? pic.twitter.com/Zqbv3ekLWt

— Cecilia Ziniti (@CeciliaZin) December 27, 2023

Apple’s iPhone Design Chief Enlisted by Jony Ive, Sam Altman to Work on AI Devices — from bloomberg.com by Mark Gurman (behind paywall)

AI 2023: Chatbots Spark New Tools — from heatherbcooper.substack.com by Jeather Cooper

ChatGPT and Other Chatbots

The arrival of ChatGPT sparked tons of new AI tools and changed the way we thought about using a chatbot in our daily lives.

Chatbots like ChatGPT, Perplexity, Claude, and Bing Chat can help content creators by quickly generating ideas, outlines, drafts, and full pieces of content, allowing creators to produce more high-quality content in less time.

These AI tools boost efficiency and creativity in content production across formats like blog posts, social captions, newsletters, and more.

Microsoft’s next Surface laptops will reportedly be its first true ‘AI PCs’ — from theverge.com by Emma Roth

Next year’s Surface Laptop 6 and Surface Pro 10 will feature Arm and Intel options, according to Windows Central.

Microsoft is getting ready to upgrade its Surface lineup with new AI-enabled features, according to a report from Windows Central. Unnamed sources told the outlet the upcoming Surface Pro 10 and Surface Laptop 6 will come with a next-gen neural processing unit (NPU), along with Intel and Arm-based options.

How one of the world’s oldest newspapers is using AI to reinvent journalism — from theguardian.com by Alexandra Topping

Berrow’s Worcester Journal is one of several papers owned by the UK’s second biggest regional news publisher to hire ‘AI-assisted’ reporters

With the AI-assisted reporter churning out bread and butter content, other reporters in the newsroom are freed up to go to court, meet a councillor for a coffee or attend a village fete, says the Worcester News editor, Stephanie Preece.

“AI can’t be at the scene of a crash, in court, in a council meeting, it can’t visit a grieving family or look somebody in the eye and tell that they’re lying. All it does is free up the reporters to do more of that,” she says. “Instead of shying away from it, or being scared of it, we are saying AI is here to stay – so how can we harness it?”

This year, I watched AI change the world in real time.

From what happened, I have no doubts that the coming years will be the most transformative period in the history of humankind.

Here’s the full timeline of AI in 2023 (January-December):

January 15: ChatGPT becomes the… pic.twitter.com/przosHYiLQ

— Rowan Cheung (@rowancheung) December 29, 2023

What to Expect in AI in 2024 — from hai.stanford.edu by

Seven Stanford HAI faculty and fellows predict the biggest stories for next year in artificial intelligence.

Topics include:

Addendum on 1/2/24:

Tips on making professional-looking, engaging videos for online courses — from timeshighereducation.com by Geoff Fortescue

Making videos for online classes doesn’t have to be costly. Here are ways to make them look professional on a budget

During lockdown, we were forced to start producing videos for Moocs remotely. This was quite successful, and we continue to use these techniques whenever a contributor can’t come to the studio. The same principles can be used by anyone who doesn’t have access to a media production team. Here are our tips on producing educational videos on a budget.

Google NotebookLM (experiment)

From DSC:

Google hopes that this personalized AI/app will help people with their note-taking, thinking, brainstorming, learning, and creating.

It reminds me of what Derek Bruff was just saying in regards to Top Hat’s Ace product being able to work with a much narrower set of information — i.e., a course — and to be almost like a personal learning assistant for the course you are taking. (As Derek mentions, this depends upon how extensively one uses the CMS/LMS in the first place.)

More Chief Online Learning Officers Step Up to Senior Leadership Roles

In 2024, I think we will see more Chief Online Learning Officers (COLOs) take on more significant roles and projects at institutions.In recent years, we have seen many COLOs accept provost positions. The typical provost career path that runs up through the faculty ranks does not adequately prepare leaders for the digital transformation occurring in postsecondary education.

As we’ve seen with the professionalization of the COLO role, in general, these same leaders proved to be incredibly valuable during the pandemic due to their unique skills: part academic, part entrepreneur, part technologist, COLOs are unique in higher education. They sit at the epicenter of teaching, learning, technology, and sustainability. As institutions are evolving, look for more online and professional continuing leaders to take on more senior roles on campuses.

Julie Uranis, Senior Vice President, Online and Strategic Initiatives, UPCEA

Expanding Bard’s understanding of YouTube videos — via AI Valley

Reshaping the tree: rebuilding organizations for AI — from oneusefulthing.org by Ethan Mollick

Technological change brings organizational change.

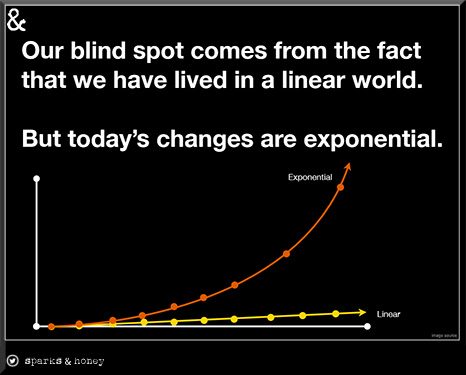

I am not sure who said it first, but there are only two ways to react to exponential change: too early or too late. Today’s AIs are flawed and limited in many ways. While that restricts what AI can do, the capabilities of AI are increasing exponentially, both in terms of the models themselves and the tools these models can use. It might seem too early to consider changing an organization to accommodate AI, but I think that there is a strong possibility that it will quickly become too late.

From DSC:

Readers of this blog have seen the following graphic for several years now, but there is no question that we are in a time of exponential change. One would have had an increasingly hard time arguing the opposite of this perspective during that time.

Nvidia’s revenue triples as AI chip boom continues — from cnbc.com by Jordan Novet; via GSV

KEY POINTS

Here’s how the company did, compared to the consensus among analysts surveyed by LSEG, formerly known as Refinitiv:

Nvidia’s revenue grew 206% year over year during the quarter ending Oct. 29, according to a statement. Net income, at $9.24 billion, or $3.71 per share, was up from $680 million, or 27 cents per share, in the same quarter a year ago.

DC: Anyone surprised? This is why the U.S. doesn’t want high-powered chips going to China. History repeats itself…again. The ways of the world/power continue on.

Pentagon’s AI initiatives accelerate hard decisions on lethal autonomous weapons https://t.co/PTDmJugiE2

— Daniel Christian (he/him/his) (@dchristian5) November 27, 2023

From DSC:

As I’ve long stated on the Learning from the Living [Class]Room vision, we are heading toward a new AI-empowered learning platform — where humans play a critically important role in making this new learning ecosystem work.

Along these lines, I ran into this site out on X/Twitter. We’ll see how this unfolds, but it will be an interesting space to watch.

From DSC:

This future learning platform will also focus on developing skills and competencies. Along those lines, see:

Scale for Skills-First — from the-job.beehiiv.com by Paul Fain

An ed-tech giant’s ambitious moves into digital credentialing and learner records.

A Digital Canvas for Skills

Instructure was a player in the skills and credentials space before its recent acquisition of Parchment, a digital transcript company. But that $800M move made many observers wonder if Instructure can develop digital records of skills that learners, colleges, and employers might actually use broadly.

…

Ultimately, he says, the CLR approach will allow students to bring these various learning types into a coherent format for employers.

Instructure seeks a leadership role in working with other organizations to establish common standards for credentials and learner records, to help create consistency. The company collaborates closely with 1EdTech. And last month it helped launch the 1EdTech TrustEd Microcredential Coalition, which aims to increase quality and trust in digital credentials.

Paul also links to 1EDTECH’s page regarding the Comprehensive Learning Record

What happens to teaching after Covid? — from chronicle.com by Beth McMurtrie

It’s an era many instructors would like to put behind them: black boxes on Zoom screens, muffled discussions behind masks, students struggling to stay engaged. But how much more challenging would teaching during the pandemic have been if colleges did not have experts on staff to help with the transition? On many campuses, teaching-center directors, instructional designers, educational technologists, and others worked alongside professors to explore learning-management systems, master video technology, and rethink what and how they teach.

A new book out this month, Higher Education Beyond Covid: New Teaching Paradigms and Promise, explores this period through the stories of campus teaching and learning centers. Their experiences reflect successes and failures, and what higher education could learn as it plans for the future.

Beth also mentioned/link to:

How to hold difficult discussions online — from chronicle.com by Beckie Supiano

As usual, our readers were full of suggestions. Kathryn Schild, the lead instructional designer in faculty development and instructional support at the University of Alaska at Anchorage, shared a guide she’s compiled on holding asynchronous discussions, which includes a section on difficult topics.

In an email, Schild also pulled out a few ideas she thought were particularly relevant to Le’s question, including:

What does the future of education LOOK like? — from stefanbauschard.substack.com by Stefen Bauschard

Diverse students receive instruction from robots in an online classroom that mimics the current structure of education.

The Misunderstanding About Education That Cost Mark Zuckerberg $100 Million — from danmeyer.substack.com by Dan Meyer

Personalized learning can feel isolating. Whole class learning can feel personal. This is hard to understand.

Excerpt (emphasis DSC):

Last week, Matt Barnum reported in Chalkbeat that the Chan Zuckerberg Initiative is laying off dozens of staff members and pivoting away from the personalized learning platform they have funded since 2015 with somewhere near $100M.

…

I have tried to illustrate as often as my subscribers will tolerate that students don’t particularly enjoy learning alone with laptops within social spaces like classrooms. That learning fails to answer their questions about their social identity. It contributes to their feelings of alienation and disbelonging. I find this case easy to make but hard to prove. Maybe we just haven’t done personalized learning right? Maybe Summit just needed to include generative AI chatbots in their platform?

What is far easier to prove, or rather to disprove, is the idea that “whole class instruction must feel impersonal to students,” that “whole class instruction must necessarily fail to meet the needs of individual students.”

From DSC:

I appreciate Dan’s comments here (as highlighted above) as they are helpful in my thoughts regarding the Learning from the Living [Class] Room vision. They seem to be echoed here by Jeppe Klitgaard Stricker when he says:

Personalized learning paths can be great, but they also entail a potential abolishment or unintended dissolution of learning communities and belonging.

Perhaps this powerful, global, Artificial Intelligence (AI)-backed, next-generation, lifelong learning platform of the future will be more focused on postsecondary students and experiences — but not so much for the K12 learning ecosystem.

But the school systems I’ve seen here in Michigan (USA) represent systems that address a majority of the class only. These one-size-fits-all systems don’t work for many students who need extra help and/or who are gifted students. The trains move fast. Good luck if you can’t keep up with the pace.

But if K-12’ers are involved in a future learning platform, the platform needs to address what Dan’s saying. It must address students questions about their social identity and not contribute to their feelings of alienation and disbelonging. It needs to support communities of practice and learning communities.

As AI Chatbots Rise, More Educators Look to Oral Exams — With High-Tech Twist — from edsurge.com by Jeffrey R. Young

To use Sherpa, an instructor first uploads the reading they’ve assigned, or they can have the student upload a paper they’ve written. Then the tool asks a series of questions about the text (either questions input by the instructor or generated by the AI) to test the student’s grasp of key concepts. The software gives the instructor the choice of whether they want the tool to record audio and video of the conversation, or just audio.

The tool then uses AI to transcribe the audio from each student’s recording and flags areas where the student answer seemed off point. Teachers can review the recording or transcript of the conversation and look at what Sherpa flagged as trouble to evaluate the student’s response.

ChatGPT breaks down this diagram of a human cell for a 9th grader.

This is the future of education. pic.twitter.com/L0Za0ZB5rs

— Mckay Wrigley (@mckaywrigley) September 28, 2023

The first GPT-4V-powered frontend engineer agent.

Just upload a picture of a design, and the agent autonomously codes it up, looks at a render for mistakes, improves the code accordingly, repeat.

Utterly insane. pic.twitter.com/qN75vwkbDZ

— Matt Shumer (@mattshumer_) September 29, 2023

AI Meets Med School— from insidehighered.com by Lauren Coffey

Adding to academia’s AI embrace, two institutions in the University of Texas system are jointly offering a medical degree paired with a master’s in artificial intelligence.

The University of Texas at San Antonio has launched a dual-degree program combining medical school with a master’s in artificial intelligence.

Several universities across the nation have begun integrating AI into medical practice. Medical schools at the University of Florida, the University of Illinois, the University of Alabama at Birmingham and Stanford and Harvard Universities all offer variations of a certificate in AI in medicine that is largely geared toward existing professionals.

“I think schools are looking at, ‘How do we integrate and teach the uses of AI?’” Dr. Whelan said. “And in general, when there is an innovation, you want to integrate it into the curriculum at the right pace.”

Speaking of emerging technologies and med school, also see:

Though not necessarily edu-related, this was interesting to me and hopefully will be to some profs and/or students out there:

Wait, what?

Chat GPT-4V vision just directed a full-on product photography shoot for Halloween and Christmas!

It even gave me feedback ? pic.twitter.com/LiajGZrA0N

— Salma – Midjourney & SD AI Product Photographer (@Salmaaboukarr) September 29, 2023

How to stop AI deepfakes from sinking society — and science — from nature.com by Nicola Jones; via The Neuron

Deceptive videos and images created using generative AI could sway elections, crash stock markets and ruin reputations. Researchers are developing methods to limit their harm.

48+ hours since Chat GPT-4V has started rolling out for Plus and enterprise users.

Incredible use cases and potential.

10 of the best examples I’ve seen. So far…

— Alie Jules (@saana_ai) September 29, 2023

GPT-4V gives us a glimpse of the future, but ~GPT-5V~ will take us there.

Think of GPT-4V like GPT-3. Good for demos, and usable for a small set of real-world problems, but brittle.

GPT-3 paved the way for the much more reliable GPT-4. https://t.co/mpK9ZCElW1

— Matt Shumer (@mattshumer_) September 29, 2023

A couple things happened in the world of AI this week. Here’s my TLDR (which is getting so long it’ll need its own TLDR pretty soon):

– ChatGPT going multi-modal

– ChatGPT brings back the “browse” functionality

– OpenAI and Jony Ive working on iPhone for AI

– Anthropic partners…— Matt Wolfe (@mreflow) October 1, 2023

Exploring the Impact of AI in Education with PowerSchool’s CEO & Chief Product Officer — from michaelbhorn.substack.com by Michael B. Horn

With just under 10 acquisitions in the last 5 years, PowerSchool has been active in transforming itself from a student information systems company to an integrated education company that works across the day and lifecycle of K–12 students and educators. What’s more, the company turned heads in June with its announcement that it was partnering with Microsoft to integrate AI into its PowerSchool Performance Matters and PowerSchool LearningNav products to empower educators in delivering transformative personalized-learning pathways for students.

AI Learning Design Workshop: The Trickiness of AI Bootcamps and the Digital Divide — from eliterate.usby Michael Feldstein

As readers of this series know, I’ve developed a six-session design/build workshop series for learning design teams to create an AI Learning Design Assistant (ALDA). In my last post in this series, I provided an elaborate ChatGPT prompt that can be used as a rapid prototype that everyone can try out and experiment with.1 In this post, I’d like to focus on how to address the challenges of AI literacy effectively and equitably.

Global AI Legislation Tracker— from iapp.org; via Tom Barrett

Countries worldwide are designing and implementing AI governance legislation commensurate to the velocity and variety of proliferating AI-powered technologies. Legislative efforts include the development of comprehensive legislation, focused legislation for specific use cases, and voluntary guidelines and standards.

This tracker identifies legislative policy and related developments in a subset of jurisdictions. It is not globally comprehensive, nor does it include all AI initiatives within each jurisdiction, given the rapid and widespread policymaking in this space. This tracker offers brief commentary on the wider AI context in specific jurisdictions, and lists index rankings provided by Tortoise Media, the first index to benchmark nations on their levels of investment, innovation and implementation of AI.

Diving Deep into AI: Navigating the L&D Landscape — from learningguild.com by Markus Bernhardt

The prospect of AI-powered, tailored, on-demand learning and performance support is exhilarating: It starts with traditional digital learning made into fully adaptive learning experiences, which would adjust to strengths and weaknesses for each individual learner. The possibilities extend all the way through to simulations and augmented reality, an environment to put into practice knowledge and skills, whether as individuals or working in a team simulation. The possibilities are immense.

“AI is real”

JPMorgan CEO Jamie Dimon says artificial intelligence will be part of “every single process,” adding it’s already “doing all the equity hedging for us” https://t.co/EtsTbiME1a pic.twitter.com/J9YD4slOpv

— Bloomberg (@business) October 2, 2023

Learning Lab | ChatGPT in Higher Education: Exploring Use Cases and Designing Prompts — from events.educause.edu; via Robert Gibson on LinkedIn

Part 1: October 16 | 3:00–4:30 p.m. ET

Part 2: October 19 | 3:00–4:30 p.m. ET

Part 3: October 26 | 3:00–4:30 p.m. ET

Part 4: October 30 | 3:00–4:30 p.m. ET

Mapping AI’s Role in Education: Pioneering the Path to the Future — from marketscale.com by Michael B. Horn, Jacob Klein, and Laurence Holt

Welcome to The Future of Education with Michael B. Horn. In this insightful episode, Michael gains perspective on mapping AI’s role in education from Jacob Klein, a Product Consultant at Oko Labs, and Laurence Holt, an Entrepreneur In Residence at the XQ Institute. Together, they peer into the burgeoning world of AI in education, analyzing its potential, risks, and roadmap for integrating it seamlessly into learning environments.

Ten Wild Ways People Are Using ChatGPT’s New Vision Feature — from newsweek.com by Meghan Roos; via Superhuman

Below are 10 creative ways ChatGPT users are making use of this new vision feature.

Student Use Cases for AI: Start by Sharing These Guidelines with Your Class — from hbsp.harvard.edu by Ethan Mollick and Lilach Mollick

To help you explore some of the ways students can use this disruptive new technology to improve their learning—while making your job easier and more effective—we’ve written a series of articles that examine the following student use cases:

Recap: Teaching in the Age of AI (What’s Working, What’s Not) — from celt.olemiss.edu by Derek Bruff, visiting associate director

Earlier this week, CETL and AIG hosted a discussion among UM faculty and other instructors about teaching and AI this fall semester. We wanted to know what was working when it came to policies and assignments that responded to generative AI technologies like ChatGPT, Google Bard, Midjourney, DALL-E, and more. We were also interested in hearing what wasn’t working, as well as questions and concerns that the university community had about teaching and AI.

Teaching: Want your students to be skeptical of ChatGPT? Try this. — from chronicle.com by Beth McMurtrie

Then, in class he put them into groups where they worked together to generate a 500-word essay on “Why I Write” entirely through ChatGPT. Each group had complete freedom in how they chose to use the tool. The key: They were asked to evaluate their essay on how well it offered a personal perspective and demonstrated a critical reading of the piece. Weiss also graded each ChatGPT-written essay and included an explanation of why he came up with that particular grade.

After that, the students were asked to record their observations on the experiment on the discussion board. Then they came together again as a class to discuss the experiment.

Weiss shared some of his students’ comments with me (with their approval). Here are a few:

2023 EDUCAUSE Horizon Action Plan: Generative AI — from library.educause.edu by Jenay Robert and Nicole Muscanell

Asked to describe the state of generative AI that they would like to see in higher education 10 years from now, panelists collaboratively constructed their preferred future.

.

Will Teachers Listen to Feedback From AI? Researchers Are Betting on It — from edsurge.com by Olina Banerji

Julie York, a computer science and media teacher at South Portland High School in Maine, was scouring the internet for discussion tools for her class when she found TeachFX. An AI tool that takes recorded audio from a classroom and turns it into data about who talked and for how long, it seemed like a cool way for York to discuss issues of data privacy, consent and bias with her students. But York soon realized that TeachFX was meant for much more.

York found that TeachFX listened to her very carefully, and generated a detailed feedback report on her specific teaching style. York was hooked, in part because she says her school administration simply doesn’t have the time to observe teachers while tending to several other pressing concerns.

“I rarely ever get feedback on my teaching style. This was giving me 100 percent quantifiable data on how many questions I asked and how often I asked them in a 90-minute class,” York says. “It’s not a rubric. It’s a reflection.”

TeachFX is easy to use, York says. It’s as simple as switching on a recording device.

…

But TeachFX, she adds, is focused not on her students’ achievements, but instead on her performance as a teacher.

ChatGPT Is Landing Kids in the Principal’s Office, Survey Finds — from the74million.org by Mark Keierleber

While educators worry that students are using generative AI to cheat, a new report finds students are turning to the tool more for personal problems.

Indeed, 58% of students, and 72% of those in special education, said they’ve used generative AI during the 2022-23 academic year, just not primarily for the reasons that teachers fear most. Among youth who completed the nationally representative survey, just 23% said they used it for academic purposes and 19% said they’ve used the tools to help them write and submit a paper. Instead, 29% reported having used it to deal with anxiety or mental health issues, 22% for issues with friends and 16% for family conflicts.

Part of the disconnect dividing teachers and students, researchers found, may come down to gray areas. Just 40% of parents said they or their child were given guidance on ways they can use generative AI without running afoul of school rules. Only 24% of teachers say they’ve been trained on how to respond if they suspect a student used generative AI to cheat.

Embracing weirdness: What it means to use AI as a (writing) tool — from oneusefulthing.org by Ethan Mollick

AI is strange. We need to learn to use it.

But LLMs are not Google replacements, or thesauruses or grammar checkers. Instead, they are capable of so much more weird and useful help.

Diving Deep into AI: Navigating the L&D Landscape — from learningguild.com by Markus Bernhardt

The prospect of AI-powered, tailored, on-demand learning and performance support is exhilarating: It starts with traditional digital learning made into fully adaptive learning experiences, which would adjust to strengths and weaknesses for each individual learner. The possibilities extend all the way through to simulations and augmented reality, an environment to put into practice knowledge and skills, whether as individuals or working in a team simulation. The possibilities are immense.

Thanks to generative AI, such visions are transitioning from fiction to reality.

Video: Unleashing the Power of AI in L&D — from drphilippahardman.substack.com by Dr. Philippa Hardman

An exclusive video walkthrough of my keynote at Sweden’s national L&D conference this week

Highlights