AI-driven Legal Apprenticeships — from thebrainyacts.beehiiv.com by Josh Kubicki

Excerpts:

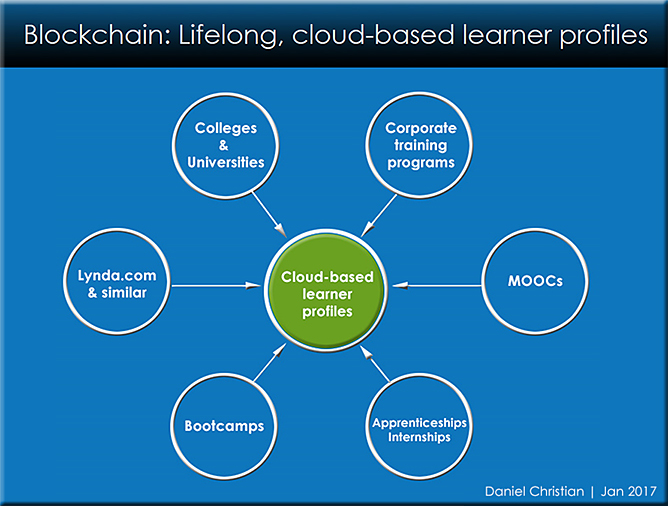

My hypothesis and research suggest that as bar associations and the ABA begin to recognize the on-going systemic issues of high-cost legal education, growing legal deserts (where no lawyer serves a given population), on-going and pervasive access to justice issues, and a public that is already weary of the legal system – alternative options that are already in play might become more supported.

What might that look like?

The combination of AI-assisted education with traditional legal apprenticeships has the potential to create a rich, flexible, and engaging learning environment. Here are three scenarios that might illustrate what such a combination could look like:

-

- Scenario One – Personalized Curriculum Development

- Scenario Two – On-Demand Tutoring and Mentoring

- Scenario Three – AI-assisted Peer Networks and Collaborative Learning:

Why Companies Are Vastly Underprepared For The Risks Posed By AI — from forbes.com by

Accuracy, bias, security, culture, and trust are some of the risks involved

Excerpt:

We know that there are challenges – a threat to human jobs, the potential implications for cyber security and data theft, or perhaps even an existential threat to humanity as a whole. But we certainly don’t yet have a full understanding of all of the implications. In fact, a World Economic Forum report recently stated that organizations “may currently underappreciate AI-related risks,” with just four percent of leaders considering the risk level to be “significant.”

A survey carried out by analysts Baker McKenzie concluded that many C-level leaders are over-confident in their assessments of organizational preparedness in relation to AI. In particular, it exposed concerns about the potential implications of biased data when used to make HR decisions.

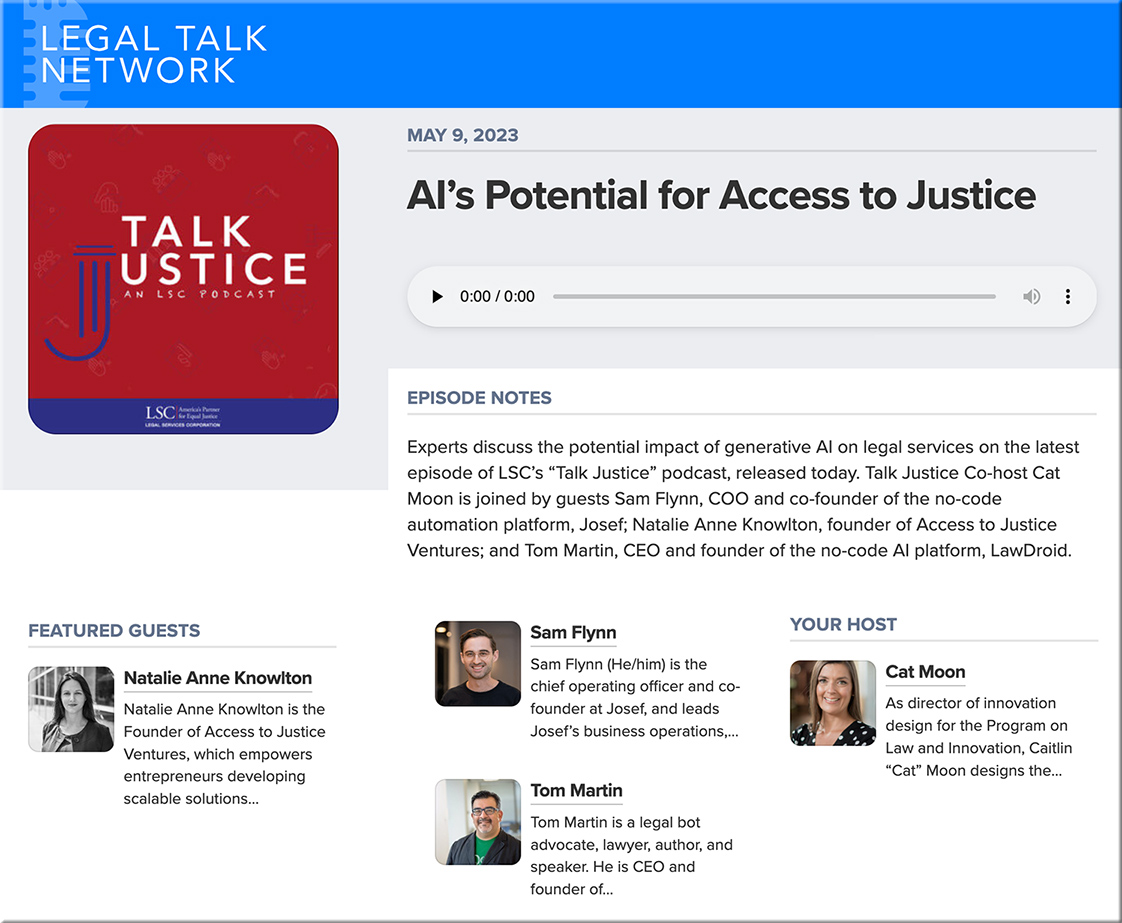

AI & lawyer training: How law firms can embrace hybrid learning & development — thomsonreuters.com

A big part of law firms’ successful adaptation to the increased use of ChatGPT and other forms of generative AI, may depend upon how firmly they embrace online learning & development tools designed for hybrid work environments

Excerpt:

As law firms move forward in using of advanced artificial intelligence such as ChatGPT and other forms of generative AI, their success may hinge upon how they approach lawyer training and development and what tools they enlist for the process.

One of the tools that some law firms use to deliver a new, multi-modal learning environment is an online, video-based learning platform, Hotshot, that delivers more than 250 on-demand courses on corporate, litigation, and business skills.

Ian Nelson, co-founder of Hotshot, says he has seen a dramatic change in how law firms are approaching learning & development (L&D) in the decade or so that Hotshot has been active. He believes the biggest change is that 10 years ago, firms hadn’t yet embraced the need to focus on training and development.

From DSC:

Heads up law schools. Are you seeing/hearing this!?

- Are we moving more towards a lifelong learning model within law schools?

- If not, shouldn’t we be doing that?

- Are LLM programs expanding quickly enough? Is more needed?

Legal tech and innovation: 3 ways AI supports the evolution of legal ops — from lexology.com

Excerpts:

- Simplified legal spend analysis

- Faster contract review

- Streamlined document management

AI-assisted cheating isn’t a temptation if students have a reason to care about their own learning.

Yesterday I happened to listen to two different podcasts that ended up resonating with one another and with an idea that’s been rattling around inside my head with all of this moral uproar about generative AI:

** If we trust students – and earn their trust in return – then they will be far less motivated to cheat with AI or in any other way. **

First, the question of motivation. On the Intentional Teaching podcast, while interviewing James Lang and Michelle Miller on the impact of generative AI, Derek Bruff points out (drawing on Lang’s Cheating Lessons book) that if students have “real motivation to get some meaning out of [an] activity, then there’s far less motivation to just have ChatGPT write it for them.” Real motivation and real meaning FOR THE STUDENT translates into an investment in doing the work themselves.

…

Then I hopped over to one of my favorite podcasts – Teaching in Higher Ed – where Bonni Stachowiak was interviewing Cate Denial about a “pedagogy of kindness,” which is predicated on trusting students and not seeing them as adversaries in the work we’re doing.

So the second key element: being kind and trusting students, which builds a culture of mutual respect and care that again diminishes the likelihood that they will cheat.

…

Again, human-centered learning design seems to address so many of the concerns and challenges of the current moment in higher ed. Maybe it’s time to actually practice it more consistently. #aiineducation #higheredteaching #inclusiveteaching