The Prompt #14: Your Guide to Custom Instructions — from noisemedia.ai by Alex Banks

Whilst we typically cover a single ‘prompt’ to use with ChatGPT, today we’re exploring a new feature now available to everyone: custom instructions.

You provide specific directions for ChatGPT leading to greater control of the output. It’s all about guiding the AI to get the responses you really want.

To get started:

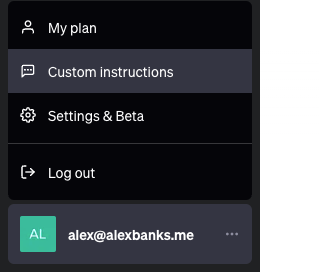

Log into ChatGPT ? Click on your name/email bottom left corner ? select ‘Custom instructions’

Meet Zoom AI Companion, your new AI assistant! Unlock the benefits with a paid Zoom account — from blog.zoom.us by Smita Hashim

We’re excited to introduce you to AI Companion (formerly Zoom IQ), your new generative AI assistant across the Zoom platform. AI Companion empowers individuals by helping them be more productive, connect and collaborate with teammates, and improve their skills.

…

Envision being able to interact with AI Companion through a conversational interface and ask for help on a whole range of tasks, similarly to how you would with a real assistant. You’ll be able to ask it to help prepare for your upcoming meeting, get a consolidated summary of prior Zoom meetings and relevant chat threads, and even find relevant documents and tickets from connected third-party applications with your permission.

From DSC:

“You can ask AI Companion to catch you up on what you missed during a meeting in progress.”

And what if some key details were missed? Should you rely on this? I’d treat this with care/caution myself.

Via the Rundown

— Daniel Christian (he/him/his) (@dchristian5) September 6, 2023

A.I.’s un-learning problem: Researchers say it’s virtually impossible to make an A.I. model ‘forget’ the things it learns from private user data — from fortune.com by Stephen Pastis (behind paywall)

That’s because, as it turns out, it’s nearly impossible to remove a user’s data from a trained A.I. model without resetting the model and forfeiting the extensive money and effort put into training it. To use a human analogy, once an A.I. has “seen” something, there is no easy way to tell the model to “forget” what it saw. And deleting the model entirely is also surprisingly difficult.

This represents one of the thorniest, unresolved, challenges of our incipient artificial intelligence era, alongside issues like A.I. “hallucinations” and the difficulties of explaining certain A.I. outputs.

More companies see ChatGPT training as a hot job perk for office workers — from cnbc.com by Mikaela Cohen

Key points:

- Workplaces filled with artificial intelligence are closer to becoming a reality, making it essential that workers know how to use generative AI.

- Offering specific AI chatbot training to current employees could be your next best talent retention tactic.

- 90% of business leaders see ChatGPT as a beneficial skill in job applicants, according to a report from career site Resume Builder.

OpenAI Plugs ChatGPT Into Canva to Sharpen Its Competitive Edge in AI — from decrypt.co by Jose Antonio Lanz

Now ChatGPT Plus users can “talk” to Canva directly from OpenAI’s bot, making their workflow easier.

This strategic move aims to make the process of creating visuals such as logos, banners, and more, even more simple for businesses and entrepreneurs.

This latest integration could improve the way users generate visuals by offering a streamlined and user-friendly approach to digital design.

From DSC:

This Tweet addresses a likely component of our future learning ecosystems:

Excited to introduce YouPro for Education—your AI study buddy.

Access unlimited AI chat + search, unlimited AI writing generations, unlimited AI art generations, supercharged with GPT-4 and Stable Diffusion XL at just $6.99/month for students and teachers. pic.twitter.com/0t8zf0AaLr

— Richard Socher (@RichardSocher) August 30, 2023

Large language models aren’t people. Let’s stop testing them as if they were. — from technologyreview.com by Will Douglas Heaven

With hopes and fears about this technology running wild, it’s time to agree on what it can and can’t do.

That’s why a growing number of researchers—computer scientists, cognitive scientists, neuroscientists, linguists—want to overhaul the way they are assessed, calling for more rigorous and exhaustive evaluation. Some think that the practice of scoring machines on human tests is wrongheaded, period, and should be ditched.

“There’s a lot of anthropomorphizing going on,” she says. “And that’s kind of coloring the way that we think about these systems and how we test them.”

“There is a long history of developing methods to test the human mind,” says Laura Weidinger, a senior research scientist at Google DeepMind. “With large language models producing text that seems so human-like, it is tempting to assume that human psychology tests will be useful for evaluating them. But that’s not true: human psychology tests rely on many assumptions that may not hold for large language models.”

We Analyzed Millions of ChatGPT User Sessions: Visits are Down 29% since May, Programming Assistance is 30% of Use — from sparktoro.com by Rand Fishkin

In concert with the fine folks at Datos, whose opt-in, anonymized panel of 20M devices (desktop and mobile, covering 200+ countries) provides outstanding insight into what real people are doing on the web, we undertook a challenging project to answer at least some of the mystery surrounding ChatGPT.

“In terms of human capital development, for instance, AI can be the teacher that helps the national workforce upskill in both AI-related and other domains.”

Article via Robert Gibson on LinkedIn https://t.co/iYKkPavX1H

— Daniel Christian (he/him/his) (@dchristian5) September 4, 2023

Crypto in ‘arms race’ against AI-powered scams — Quantstamp co-founder — from cointelegraph.com by Tom Mitchelhill

Quantstamp’s Richard Ma explained that the coming surge in sophisticated AI phishing scams could pose an existential threat to crypto organizations.

With the field of artificial intelligence evolving at near breakneck speed, scammers now have access to tools that can help them execute highly sophisticated attacks en masse, warns the co-founder of Web3 security firm Quantstamp.