Course Awareness in HyFlex: Managing unequal participation numbers — from hyflexlearning.org by Candice Freeman

Excerpt:

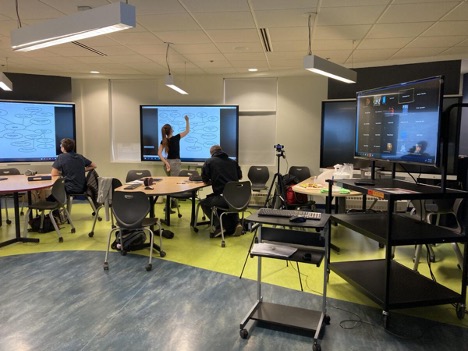

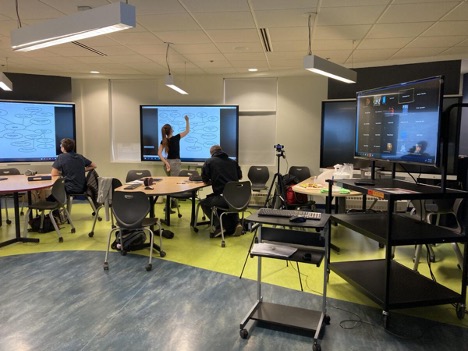

How do you teach a HyFlex course when the number of students in various participation modes is very unequal? How do you teach one student in a mode – often in the classroom? Conversely, you could ask how do you teach 50 asynchronous students with very few in the synchronous mode(s)? Answers will vary greatly depending from teacher to teacher. This article suggests a strategy called Course Awareness, a mindfulness technique designed to help teachers envision each learner as being in the instructor’s presence and engaged in the instruction regardless of participation (or attendance) mode choice.

From DSC:

I had understood the hyflex teaching model as addressing both online-based (i.e., virtual/not on-site) and on-site/physically-present students at the same time — and that each student could choose the manner in which they wanted to attend that day’s class. For example, on one day, a student could take the course in room 123 of Anderson Hall. The next time the class meets, that same student could attend from their dorm room.

But this article introduces — at least to me — the idea that we have a third method of participating in the hyflex model — asynchronously (i.e., not at the same time). So rather than making their way to Anderson Hall or attending from their dorm, that same student does not attend at the same time as other students (either virtually or physically). That student will likely check in with a variety of tools to catch up with — and contribute to — the discussions. As the article mentions:

Strategically, you need to employ web-based resources designed to gather real-time information over a specified period of time, capturing all students and not just students engaging live. For example, Mentimeter, PollEverywhere, and Sli.do allow the instructor to pose engaging, interactive questions without limiting engagement time to the instance the question is initially posed. These tools are designed to support both synchronous and asynchronous participation.

So it will be interesting to see how our learning ecosystems morph in this area. Will there be other new affordances, pedagogies, and tools that take into consideration that the faculty members are addressing synchronous and asynchronous students as well as online and physically present students? Hmmm…more experimentation is needed here, as well as more:

- Research

- Instructional design

- Design thinking

- Feedback from students and faculty members

Will this type of model work best in the higher education learning ecosystem but not the K-12 learning ecosystem? Will it thrive with employees within the corporate realm? Hmmm…again, time will tell.

And to add another layer to the teaching and learning onion, now let’s talk about multimodal learning. This article, How to support multimodal learning” by Monica Burns, mentions that:

Multimodal learning is a teaching concept where using different senses simultaneously helps students interact with content at a deeper level. In the same way we learn through multiple types of media in our own journey as lifelong learners, students can benefit from this type of learning experience.

The only comment I have here is that if you think that throwing a warm body into a K12 classroom fixes the problem of teachers leaving the field, you haven’t a clue how complex this teaching and learning onion is. Good luck to all of those people who are being thrown into the deep end — and essentially being told to sink or swim.

%3Ano_upscale()%2Fcdn.vox-cdn.com%2Fuploads%2Fchorus_asset%2Ffile%2F24064064%2Fmeta_teddy_square_gif.gif&w=1200&q=75)

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)