It’s The End Of The Legal Industry As We Know It — from artificiallawyer.com by Richard Tromans

It’s the end of the legal industry as we know it and I feel fine. I really do.

The legal industry as we know it is already over. The seismic event that triggered this evolutionary shift happened in November 2022. There’s no going back to a pre-genAI world. Change, incremental or otherwise, will be unstoppable. The only question is: at what pace will this change happen?

It’s clear that substantive change at the heart of the legal economy may take a long time – and we should never underestimate the challenge of overturning decades of deeply embedded cultural practices – but, at least it has begun.

AI: The New Legal Powerhouse — Why Lawyers Should Befriend The Machine To Stay Ahead — from today.westlaw.com

(October 24, 2024) – Jeremy Glaser and Sharzaad Borna of Mintz discuss waves of change in the legal profession brought on by AI, in areas such as billing, the work of support staff and junior associates, and ethics.

The dual nature of AI — excitement and fear

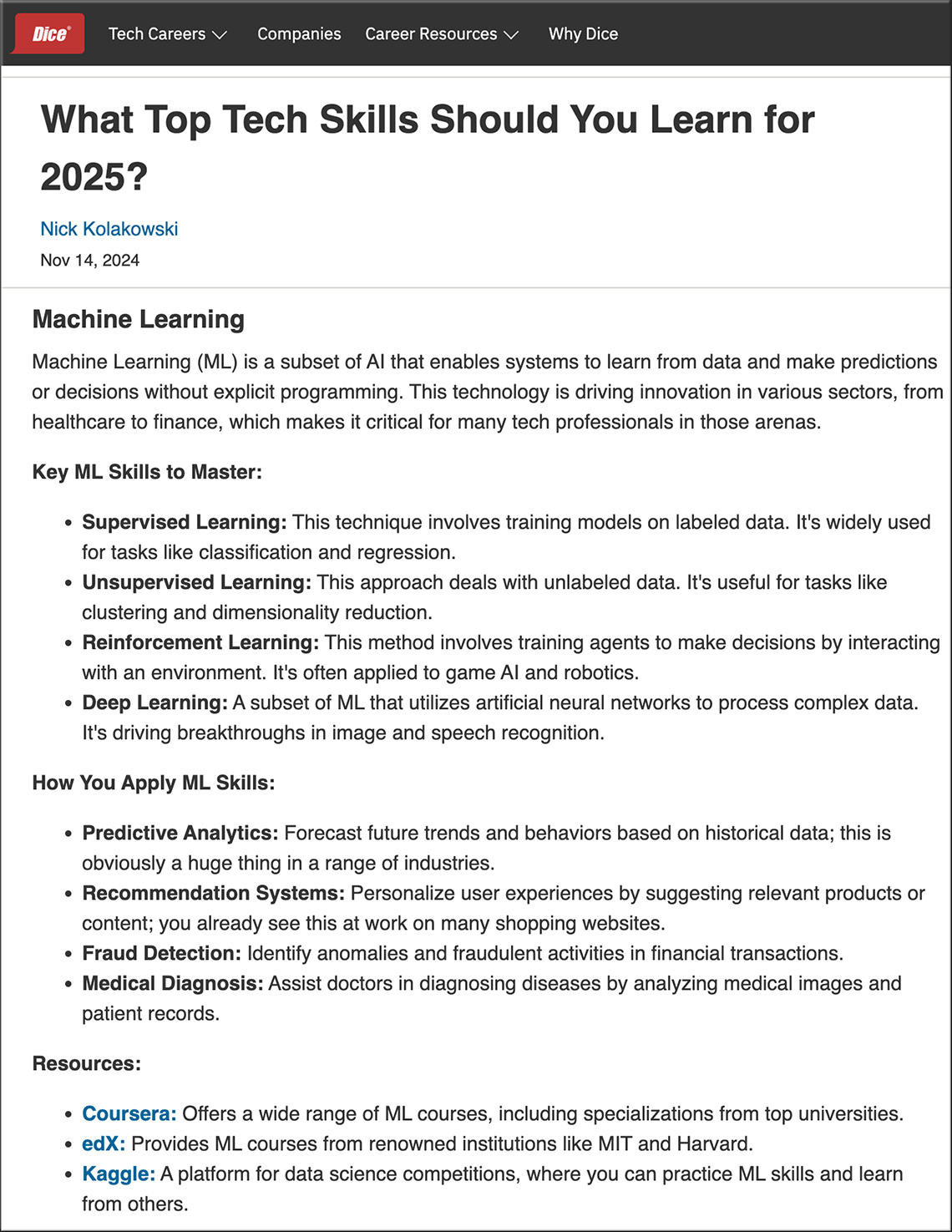

AI is evolving at lightning speed, sparking both wonder and worry. As it transforms industries and our daily lives, we are caught between the thrill of innovation and the jitters of uncertainty. Will AI elevate the human experience or just leave us in the dust? How will it impact our careers, privacy and sense of security?

Just as we witnessed with the rise of the internet — and later, social media — AI is poised to redefine how we work and live, bringing a mix of optimism and apprehension. While we grapple with AI’s implications, our clients expect us to lead the charge in leveraging it for their benefit.

However, this shift also means more competition for fewer entry-level jobs. Law schools will play a key role in helping students become more marketable by offering courses on AI tools and technology. Graduates with AI literacy will have an edge over their peers, as firms increasingly value associates who can collaborate effectively with AI tools.

Will YOU use ChatGPT voice mode to lie to your family? Brainyacts #244 — from thebrainyacts.beehiiv.com by Sam Douthit, Aristotle Jones, and Derek Warzel.

Small Law’s Secret Weapon: AI Courtroom Mock Battles — this excerpt is by Brainacts author Josh Kubicki

As many of you know, this semester my law students have the opportunity to write the lead memo for this newsletter, each tackling issues that they believe are both timely and intriguing for our readers. This week’s essay presents a fascinating experiment conducted by three students who explored how small law firms might leverage ChatGPT in a safe, effective manner. They set up ChatGPT to simulate a mock courtroom, even assigning it the persona of a Seventh Circuit Court judge to stage a courtroom dialogue. It’s an insightful take on the how adaptable technology like ChatGPT can offer unique advantages to smaller practices. They share other ideas as well. Enjoy!

The following excerpt was written by Sam Douthit, Aristotle Jones, and Derek Warzel.

One exciting example is a “Courtroom Persona AI” tool, which could let solo practitioners simulate mock trials and practice arguments with AI that mimics specific judges, local courtroom customs, or procedural quirks. Small firms, with their deep understanding of local courts and judicial styles, could take full advantage of this tool to prepare more accurate and relevant arguments. Unlike big firms that have to spread resources across jurisdictions, solo and small firms could use this AI-driven feedback to tailor their strategies closely to local court dynamics, making their preparations sharper and more strategic. Plus, not all solo or small firms have someone to practice with or bounce their ideas off of. For these practitioners, it’s a chance to level up their trial preparation without needing large teams or costly mock trials, gaining a practical edge where it counts most.

Some lawyers have already started to test this out, like the mock trial tested out here. One oversimplified and quick way to try this out is using the ChatGPT app.

The Human in AI-Assisted Dispute Resolution — from jdsupra.com by Epiq

Accountability for Legal Outputs

AI is set to replace some of the dispute resolution work formerly done by lawyers. This work includes summarising documents, drafting legal contracts and filings, using generative AI to produce arbitration submissions for an oral hearing, and, in the not-too-distant future, ingesting transcripts from hearings and comparing them to the documentary record to spot inconsistencies.

As Pendell put it, “There’s quite a bit of lawyering going on there.” So, what’s left for humans?

The common feature in all those examples is that humans must make the judgement call. Lawyers won’t just turn over a first draft of an AI-generated contract or filing to another party or court. The driving factor is that law is still a regulated profession, and regulators will hold humans accountable.

The idea that young lawyers must do routine, menial work as a rite of passage needs to be updated. Today’s AI tools put lawyers at the top of an accountability chain, allowing them to practice law using judgement and strategy as they supervise the work of AI.

Small law firms embracing AI as they move away from hourly billing — from legalfutures.co.uk by Neil Rose

Small law firms have embraced artificial intelligence (AI), with document drafting or automation the most popular application, according to new research.

The survey also found expectations of a continued move away from hourly billing to fixed fees.

Legal technology provider Clio commissioned UK-specific research from Censuswide as an adjunct to its annual US-focused Legal Trends report, polling 500 solicitors, 82% of whom worked at firms with 20 lawyers or fewer.

Some 96% of them reported that their firms have adopted AI into their processes in some way – 56% of them said it was widespread or universal – while 62% anticipated an increase in AI usage over the next 12 months.