Stanford’s 2023 AI Index Report — from aiindex.stanford.edu

AI Aids In Connecting Learning and Performance Ecosystems — from learningguild.com by Markus Bernhardt and Teresa Rose

Excerpt:

The ways in which employees access information, surface answers to questions, and find the right subject matter experts is shifting and drastically improving. With this, so is access to and potential efficiency of formal learning and training.

The question is, how do these elements fuse together in the reimagined ecosystem? What will performance support, formal learning and training, or upskilling and reskilling look like when we combine the best of digital and asynchronous tools, as well as synchronous and in-person endeavors.

Power Performance with Microlearning’s Purpose and Potential — from learningguild.com by Robyn Defelice

Excerpt:

Because we are dealing in performance-based microlearning, each campaign and product will have its own purpose and potential (P&P).

The P&P are not derived from the organization’s definition but by the goals of the campaign itself.

…

Performance Pathways (Purpose) and Use Cases (Potential) provide opportunity to think through the alignment to a campaign goal while preparing for the design of the microlearning products.

Augmented Reality: Your Next Step Into Immersive Learning — from learningguild.com by Bill Brandon

Excerpt:

I asked Debbie Richard for her thoughts on the uses of AR and immersive learning. Debbie is the founder and president of Creative Interactive Ideas, where she helps talent development professionals thrive and flourish in their careers.

Debbie offered this reply:

“Developing an augmented reality experience is a great way for instructional designers to get started with immersive learning. There are a number of AR development applications that require little to no programming skills. All the learner will need to access the experience is a smart device.

Some great examples of using augmented reality in immersive learning are:

GPT-4 Is a Reasoning Engine — from every.to by Dan Shipper

Reason is only as good as the information we give it

Excerpt:

Even though our AI models were trained by reading the whole internet, that training mostly enhances their reasoning abilities not how much they know. And so, the performance of today’s AI models is constrained by their lack of knowledge.

I saw Sam Altman speak at a small Sequoia event in SF last week, and he emphasized this exact point: GPT models are actually reasoning engines not knowledge databases.

This is crucial to understand because it predicts that advances in the usefulness of AI will come from advances in its ability to access the right knowledge at the right time—not just from advances in its reasoning powers.

Why? It’s expensive and time consuming to find information that’s relevant to the things you think about. Even if you give AI access to a search engine, so it can make queries to find the right information—it’ll cost you money and time.

If, instead, you’ve spent a lifetime gathering and curating information that’s important to you, you can customize your AI experience so it’s more useful to you right off the bat.

Why some college professors are adopting ChatGPT AI as quickly as students — from cnbc.com by Carolyn Chun

Key Points:

Also relevant/see:

5 AI-Driven Healthcare Trends and Solutions in 2023 — from hitconsultant.net by Dmitrii Evstiukhin

Excerpt:

Healthcare enterprises are quickly embracing artificial intelligence solutions to mitigate loss, streamline operations, improve efficiency, and enhance customer service.

Learn about the latest trends and solutions in healthcare AI, obstacles to AI adoption, and how artificial intelligence is rapidly transforming standards of patient care in 2023.

Also relevant/see:

Learning Designers will have to adapt or die. 10 ways to UPSKILL to AI…. — from donaldclarkplanb.blogspot.com by Donald Clark

From Ethan Mollick on LinkedIn:

Take a look at this simulated negotiation, with grading and feedback. Prompt: “I want to do deliberate practice about how to conduct negotiations. You will be my negotiation teacher. You will simulate a detailed scenario in which I have to engage in a negotiation. You will fill the role of one party, I will fill the role of the other. You will ask for my response to in each step of the scenario and wait until you receive it. After getting my response, you will give me details of what the other party does and says. You will grade my response and give me detailed feedback about what to do better using the science of negotiation. You will give me a harder scenario if I do well, and an easier one if I fail.”

Samples from Bing Creative mode and ChatGPT-4 (3.5, the free version, does not work as well)

I’m having a blast with ChatGPT – it’s testing ME! — from by Mark Mrohs

Using ChatGPT as an agent for asynchronous active learning

I have been experimenting with possible ways to incorporate interactions with ChatGPT into instruction. And I’m blown away. I want to show you some of what I’ve come up with.

Canva’s New AI Wonder Tools — from wondertools.substack.com by Jeremy Caplan

A magic eraser, a branding kit, AI presentations, and more new features

Excerpt:

Canva launched a bunch of new features at a live event viewed by 1.5 million people globally. The Australian company is no longer an upstart. 125 million people use it monthly, including 13 million paid subscribers yielding $1.4 billion in revenue. Canva’s increasingly competing with Adobe to help people create eye-catching visuals. Here are its most useful new tricks.

How to use AI to do practical stuff: A new guide — from oneusefulthing.substack.com by Ethan Mollick

People often ask me how to use AI. Here’s an overview with lots of links.

Excerpts:

We live in an era of practical AI, but many people haven’t yet experienced it, or, if they have, they might have wondered what the big deal is. Thus, this guide. It is a modified version of one I put out for my students earlier in the year, but a lot has changed. It is an overview of ways to get AI to do practical things.

…

I want to try to show you some of why AI is powerful, in ways both exciting and anxiety-producing.

Also see Ethan’s posting:

Power and Weirdness: How to Use Bing AI

Bing AI is a huge leap over ChatGPT, but you have to learn its quirks

Teaching: What You Can Learn From Students About ChatGPT — from chronicle.com by Beth McMurtrie

Excerpts (emphasis DSC):

Like a lot of you, I have been wondering how students are reacting to the rapid launch of generative AI tools. And I wanted to point you to creative ways in which professors and teaching experts have helped involve them in research and policymaking.

At Kalamazoo College, Autumn Hostetter, a psychology professor, and six of her students surveyed faculty members and students to determine whether they could detect an AI-written essay, and what they thought of the ethics of using various AI tools in writing. You can read their research paper here.

…

Next, participants were asked about a range of scenarios, such as using Grammarly, using AI to make an outline for a paper, using AI to write a section of a paper, looking up a concept on Google and copying it directly into a paper, and using AI to write an entire paper. As expected, commonly used tools like Grammarly were considered the most ethical, while writing a paper entirely with AI was considered the least. But researchers found variation in how people approached the in-between scenarios. Perhaps most interesting: Students and faculty members shared very similar views with each scenario.

Also relevant/see:

This Was Written By a Human: A Real Educator’s Thoughts on Teaching in the Age of ChatGPT — from er.educause.edu educause.org by Jered Borup

The well-founded concerns surrounding ChatGPT shouldn’t distract us from considering how it might be useful.

DC: Fire can be used to warm up people, a room or even a house. It can provide light. It can help cook a meal.

At the same time, fire can completely destroy a house, neighborhood, or acres of wooded lands.

Is #AI similar? Is it how we use it? Do we even know how to use it yet? pic.twitter.com/bKaOdyUUGg

— Daniel Christian (he/him/his) (@dchristian5) March 31, 2023

From DSC:

After seeing this…

“Make me an app”—just talk to your @Replit app to make software pic.twitter.com/U1v5m5Un1U

— Amjad Masad ? (@amasad) March 24, 2023

…I wondered:

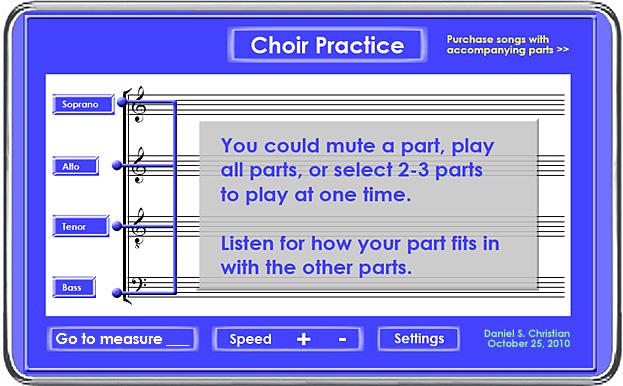

This line of thought reminded me of this posting that I did back on 10/27/2010 entitled, “For those institutions (or individuals) who might want to make a few million.”

And I want to say that when I went back to look at this posting, I was a bit ashamed of myself. I’d like to apologize for the times when I’ve been too excited about something and exaggerated/hyped an idea up on this Learning Ecosystems blog. For example, I used the words millions of dollars in the title…and that probably wouldn’t be the case these days. (But with inflation being what it is, heh…who knows!? Maybe I shouldn’t be too hard on myself.) I just had choirs in mind when I posted the idea…and there aren’t as many choirs around these days. 🙂

a big deal: @elonmusk, Y. Bengio, S. Russell, ??@tegmark?, V. Kraknova, P. Maes, ?@Grady_Booch, ?@AndrewYang?, ?@tristanharris? & over 1,000 others, including me, have called for a temporary pause on training systems exceeding GPT-4 https://t.co/PJ5YFu0xm9

— Gary Marcus (@GaryMarcus) March 29, 2023

The above Tweet links to:

Pause Giant AI Experiments: An Open Letter — from futureoflife.org

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

Elon Musk, Steve Wozniak and dozens of top scientists concerned about the technology moving too fast have signed an open letter asking companies to pull back on artificial intelligence. @trevorlault reports on the new A.I. plea. pic.twitter.com/Vu9QlKfV8C

— Good Morning America (@GMA) March 30, 2023

However, the letter has since received heavy backlash, as there seems to be no verification in signing it. Yann LeCun from Meta denied signing the letter and completely disagreed with the premise. (source)

Nope.

I did not sign this letter.

I disagree with its premise. https://t.co/DoXwIZDcOx— Yann LeCun (@ylecun) March 29, 2023

In Sudden Alarm, Tech Doyens Call for a Pause on ChatGPT — from wired.com by Will Knight (behind paywall)

Tech luminaries, renowned scientists, and Elon Musk warn of an “out-of-control race” to develop and deploy ever-more-powerful AI systems.

1/The call for a 6 month moratorium on making AI progress beyond GPT-4 is a terrible idea.

I'm seeing many new applications in education, healthcare, food, … that'll help many people. Improving GPT-4 will help. Lets balance the huge value AI is creating vs. realistic risks.

— Andrew Ng (@AndrewYNg) March 29, 2023

Evolving Zoom IQ, our smart companion, with new features and a collaboration with OpenAI — from blog.zoom.us

Excerpt:

Today we’re announcing that we’re evolving the capabilities of Zoom IQ to become a smart companion that empowers collaboration and unlocks people’s potential by summarizing chat threads, organizing ideas, drafting content for chats, emails, and whiteboard sessions, creating meeting agendas, and more.