The mounting human and environmental costs of generative AI — from by Sasha Luccioni

Op-ed: Planetary impacts, escalating financial costs, and labor exploitation all factor.

Excerpt:

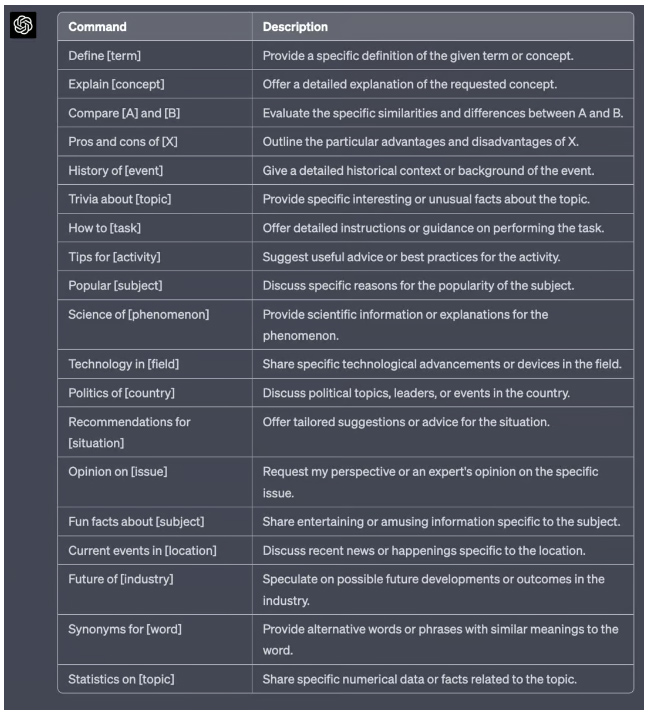

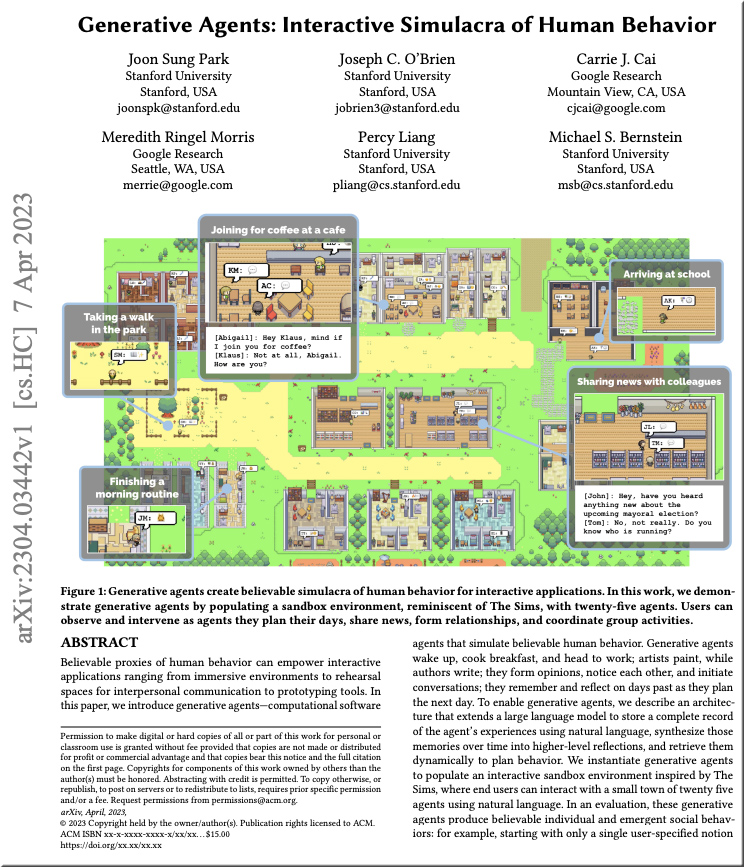

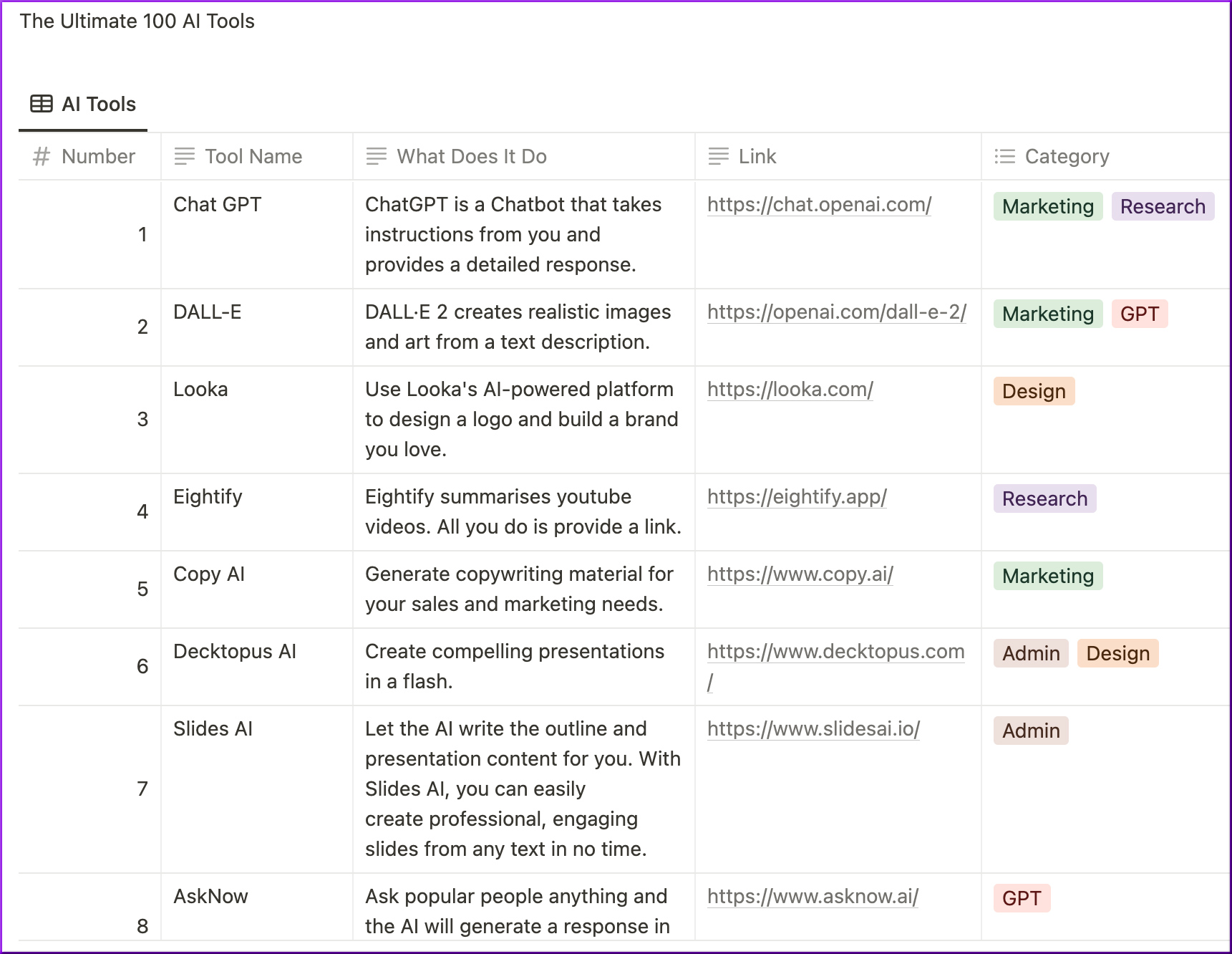

Over the past few months, the field of artificial intelligence has seen rapid growth, with wave after wave of new models like Dall-E and GPT-4 emerging one after another. Every week brings the promise of new and exciting models, products, and tools. It’s easy to get swept up in the waves of hype, but these shiny capabilities come at a real cost to society and the planet.

Downsides include the environmental toll of mining rare minerals, the human costs of the labor-intensive process of data annotation, and the escalating financial investment required to train AI models as they incorporate more parameters.

Let’s look at the innovations that have fueled recent generations of these models—and raised their associated costs.

Also relevant/see:

ChatGPT is Thirsty: a mini exec briefing — from BrainyActs #040 | ChatGPT’s Thirst Problem

Between the growing number of data centers + the exponential growth in consumer-grade Generative AI tools, water is becoming a scarce resource.