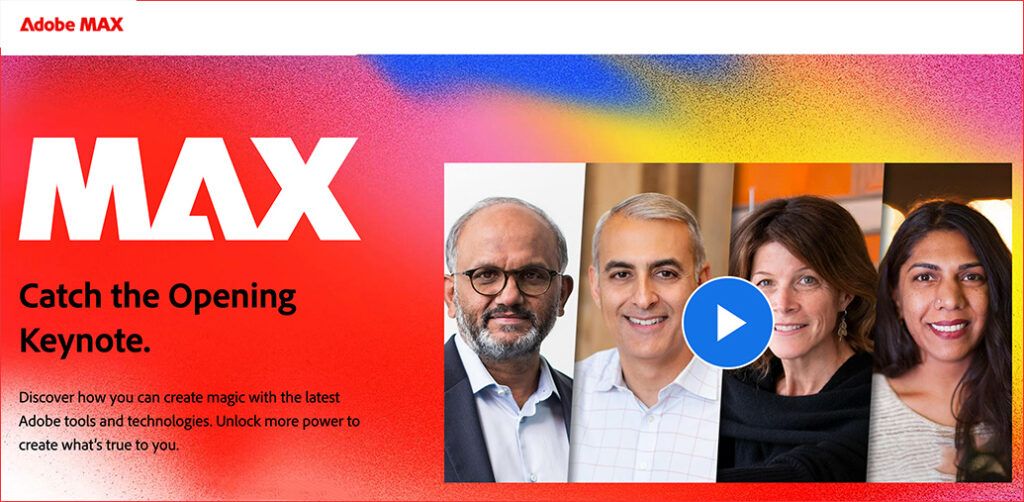

Adobe Reinvents its Entire Creative Suite with AI Co-Pilots, Custom Models, and a New Open Platform — from theneuron.ai by Grant Harvey

Adobe just put an AI co-pilot in every one of its apps, letting you chat with Photoshop, train models on your own style, and generate entire videos with a single subscription that now includes top models from Google, Runway, and Pika.

Adobe came to play, y’all.

At Adobe MAX 2025 in Los Angeles, the company dropped an entire creative AI ecosystem that touches every single part of the creative workflow. In our opinion, all these new features aren’t about replacing creators; it’s about empowering them with superpowers they can actually control.

Adobe’s new plan is to put an AI co-pilot in every single app.

- For professionals, the game-changer is Firefly Custom Models. Start training one now to create a consistent, on-brand look for all your assets.

- For everyday creators, the AI Assistants in Photoshop and Express will drastically speed up your workflow.

- The best place to start is the Photoshop AI Assistant (currently in private beta), which offers a powerful glimpse into the future of creative software—a future where you’re less of a button-pusher and more of a creative director.

Adobe MAX Day 2: The Storyteller Is Still King, But AI Is Their New Superpower — from theneuron.ai by Grant Harvey

Adobe’s Day 2 keynote showcased a suite of AI-powered creative tools designed to accelerate workflows, but the real message from creators like Mark Rober and James Gunn was clear: technology serves the story, not the other way around.

On the second day of its annual MAX conference, Adobe drove home a message that has been echoing through the creative industry for the past year: AI is not a replacement, but a partner. The keynote stage featured a powerful trio of modern storytellers—YouTube creator Brandon Baum, science educator and viral video wizard Mark Rober, and Hollywood director James Gunn—who each offered a unique perspective on a shared theme: technology is a powerful tool, but human instinct, hard work, and the timeless art of storytelling remain paramount.

From DSC:

As Grant mentioned, the demos dealt with ideation, image generation, video generation, audio generation, and editing.

Adobe Max 2025: all the latest creative tools and AI announcements — from theverge.com by Jess Weatherbed

The creative software giant is launching new generative AI tools that make digital voiceovers and custom soundtracks for videos, and adding AI assistants to Express and Photoshop for web that edit entire projects using descriptive prompts. And that’s just the start, because Adobe is planning to eventually bring AI assistants to all of its design apps.

Also see Adobe Delivers New AI Innovations, Assistants and Models Across Creative Cloud to Empower Creative Professionals plus other items from the News section from Adobe