Helping Neurodiverse Students Learn Through New Classroom Design — from insidehighered.com by Michael Tyre

Michael Tyre offers some insights into how architects and administrators can work together to create better learning environments for everyone.

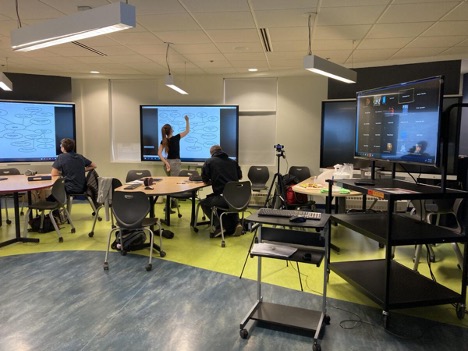

We emerged with two guiding principles. First, we had learned that certain environments—in particular, those that cause sensory distraction—can more significantly impact neurodivergent users. Therefore, our design should diminish distractions by mitigating, when possible, noise, visual contrast, reflective surfaces and crowds. Second, we understood that we needed a design that gave neurodivergent users the agency of choice.

The importance of those two factors—a dearth of distraction and an abundance of choice—was bolstered in early workshops with the classroom committee and other stakeholders, which occurred at the same time we were conducting our research. Some things didn’t come up in our research but were made quite clear in our conversations with faculty members, students from the neurodivergent community and other stakeholders. That feedback greatly influenced the design of the Young Classroom.

We ended up blending the two concepts. The main academic space utilizes traditional tables and chairs, albeit in a variety of heights and sizes, while the peripheral classroom spaces use an array of less traditional seating and table configurations, similar to the radical approach.

On a somewhat related note, also see:

Unpacking Fingerprint Culture — from marymyatt.substack.com by Mary Myatt

This post summarises a fascinating webinar I had with Rachel Higginson discussing the elements of building belonging in our settings.

We know that belonging is important and one of the ways to make this explicit in our settings is to consider what it takes to cultivate an inclusive environment where each individual feels valued and understood.

Rachel has spent several years working with young people, particularly those on the periphery of education to help them back into mainstream education and participating in class, along with their peers.

Rachel’s work helping young people to integrate back into education resulted in schools requesting support and resources to embed inclusion within their settings. As a result, Finding My Voice has evolved into a broader curriculum development framework.

![The Living [Class] Room -- by Daniel Christian -- July 2012 -- a second device used in conjunction with a Smart/Connected TV](http://danielschristian.com/learning-ecosystems/wp-content/uploads/2012/07/The-Living-Class-Room-Daniel-S-Christian-July-2012.jpg)

Podcasting studio at FUSE Workspace in Houston, TX.

Podcasting studio at FUSE Workspace in Houston, TX.